Facebook claims that it’s among the best in the business at policing dangerous content on the site. That may be true, but new revelations in the Facebook Papers leaked by whistleblower Frances Haugen cast doubt on the company’s commitment to cracking down on hate groups, conspiracy theories, and other harms it knows is baked into its platforms.

And even when Facebook is aware of the problem, it often doesn’t take the correct action needed.

The Daily Dot found that a QAnon conspiracy theorist that Facebook itself used as a prime example of some of the most dangerous people on the platform is on Instagram. There, he boasts about his myriad bans and calls the FDA a cabal dead set on bringing about communism. He also co-owns a “health” business that still has an account on Facebook.

Internal documents in the Facebook Papers refer to him as a superspreader of QAnon, a perfect example of how people get radicalized on its platform. He and a group he ran was banned last November. The documents note that the individual also used Instagram. Yet today he’s still up there.

Although the document is redacted, there’s enough information to definitively establish that the company is aware that the individual is involved with the business’ Facebook page and to strongly imply it’s aware of his Instagram account. (The Daily Dot is not identifying the individual, business, or the pages.)

The document that details this individual’s activities, “Harmful Conspiracies Theories: definitions, features, persons,” demonstrates the time and effort Facebook spent studying and understanding how conspiracy theories spread on its platform and the breadth of its knowledge about the people spreading them.

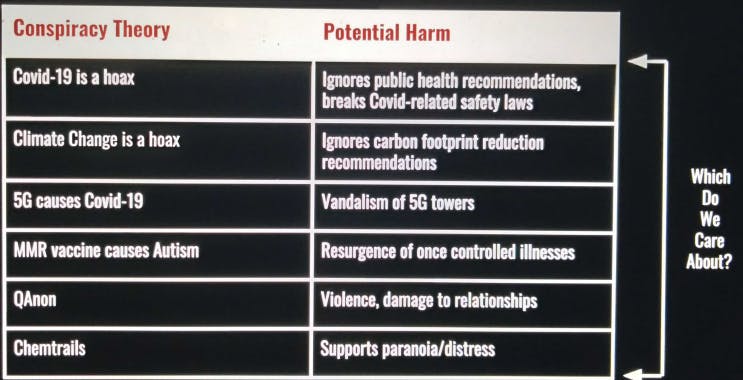

The paper details the numerous negative impacts on behavior, health, and safety of believing conspiracy theories. It states that such theories cause “paranoia/distress,” “legitimize violence,” and lead to “riskier health and law-related behavior.” It also notes that the theories circulating on its platform “result in damage to Facebook’s brand reputation.”

The paper names several types of conspiracies, such as QAnon and chemtrails, and their potential harms. Facebook implies that QAnon is the worst of these. It alone is said to have the potential to cause “violence [and] damage to relationships.”

It goes on to note that conspiracy theories often overlap with violent or hateful groups and include “apocalyptic call[s] to action.” The sum total of Facebook’s analysis of conspiracy theories generally and QAnon particularly is that both are dangerous, divisive, and will spread rapidly on its platform if left unchecked.

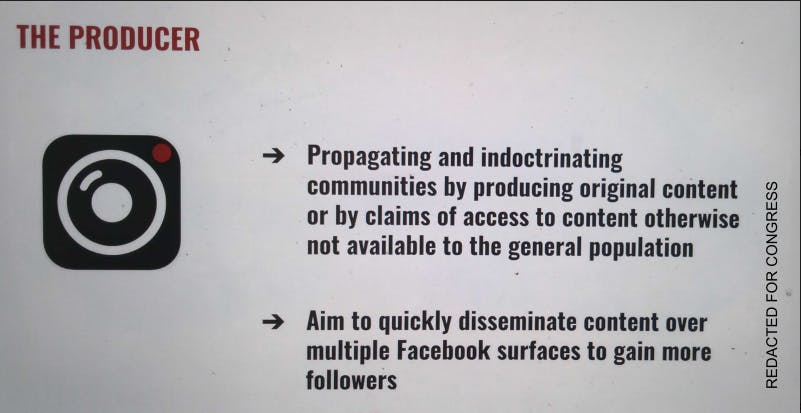

In this paper, Facebook established four subcategories of conspiracists: producer, recruiter, naïve networker, and seeker, defining each of these archetypes. It considers producers the most dangerous.

According to Facebook, a producer will often be a “superspreader” of harmful conspiracy theories, creating and disseminating hundreds of posts containing disinformation every month across multiple Facebook surfaces—Messenger, groups, Instagram, Newsfeed, and comments—to “spread their ideology and become leaders and/or crusaders of their group.”

“Top [community operations] include spam, hate, [dangerous organizations and individuals],” Facebook writes. Producers “constantly look for more avenues of influence,” it adds. It says they can be motivated by ideology, religion, or money.

It also provided an example of a real account defined as a producer to show just how promblematic they are to have on the platform. This is the individual the Daily Dot found still using Facebook’s products nearly a year after he was banned.

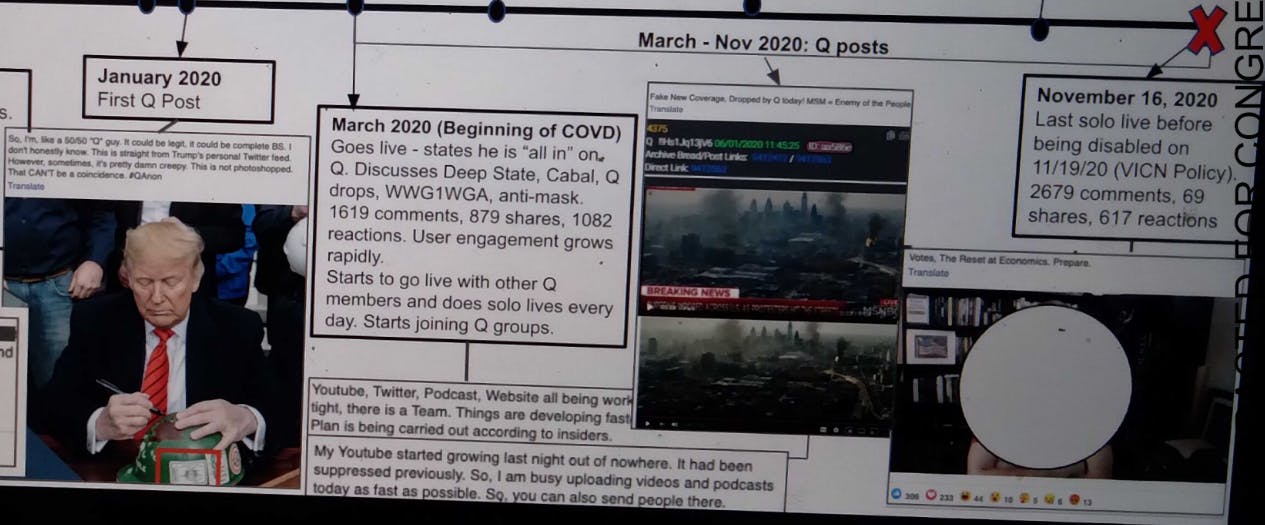

The paper provides a “user journey [map]” of the producer’s account. It says he created an account in 2006. In the early days, he shared “generic benign posts” about daily life and politics. it says. Three years later, in 2009, he launched a wellness business. In 2012, he began sprinkling conspiracy theories into posts about their business and remunerations on life.

In Jan. 2020, this individual went full QAnon, according to Facebook. In a Facebook live at the beginning of the pandemic, he discussed a wide breadth of QAnon theories and catchphrases, the company says. That June, he launched a Facebook group that spread QAnon theories. Within a month, it had nearly 9,000 members.

QAnon is the conspiracy theory that former President Donald Trump is secretly fighting a cabal of pedophiles within the nation’s elite.

During Facebook’s widespread purge of QAnon accounts, he was banned. The paper says he had grown to 40,000 followers.

The paper goes on to provide a detailed biographical history of the individual, as well as hypothesize about his motivations, frustrations, and signals that indicated he was a producer. This section further states that the person has an “active IG” on which he also shared harmful conspiracy theories.

Facebook notes that the individual bought a lot of ads and included screenshots of several examples of its ads for various health supplements. A search for the products named led the Daily Dot to multiple websites, all run by the same individual. One of the products includes his title and surname. His name led to a podcast, business website, its Facebook page, and his personal Instagram account. On a live broadcast of their podcast on Friday, he theorized that the Queen of England was actually dead—and possible a reptilian humanoid.

“I actually can get fully on board with the queen being gone because I have ultimate disdain for the lizard,” he said.

Each of the sites and pages includes photos and video of the same person. He’s also listed as one of the company’s directors on public documents and as one of the owners on its website.

The personal Facebook page and the QAnon group it launched appear to have been deleted. The business’ Facebook page, launched a decade ago, remains active. One reviewer touted the same type of product, an anti-parasitic supplement, that Facebook included in its analysis of the producer’s activity.

Although Facebook’s own document acknowledges that this individual has an Instagram account where he shares similar content as he did in the banned group, as of this writing, their Instagram page remains active. (It’s not known whether he had a secondary account that may have been banned.) On Instagram, in recent years, he’s almost exclusively posted conspiracy theories—falsely claiming vaccines cause COVID-19 and that Trump won the 2020 election. The oldest post on the page is from 2014.

No one has argued that Facebook or any other social media platform faces an easy task in eradicating conspiracy theories like QAnon. But many now can now see that Facebook’s public stances don’t often correlate with its behind-the-scenes action.

This story is based on Frances Haugen’s disclosures to the Securities and Exchange Commission, which were also provided to Congress in redacted form by her legal team. The redacted versions received by Congress were obtained by a consortium of news organizations, including the Daily Dot, the New York Times, Politico, the Atlantic, Wired, the Verge, CNN, Gizmodo, and dozens of other outlets.