A popular online service that utilizes artificial intelligence (AI) to generate chatbots is seeing people create versions of George Floyd.

The company behind the service, known as Character.AI, allows for the creation of customizable personas and provides access to hundreds of chatbots developed by its user base.

The Daily Dot came across two chatbots based on Floyd, who was murdered by a police officer in Minnesota on May 25, 2020. The killing, which was captured on video, sparked Black Lives Matter protests across the globe and calls for police accountability.

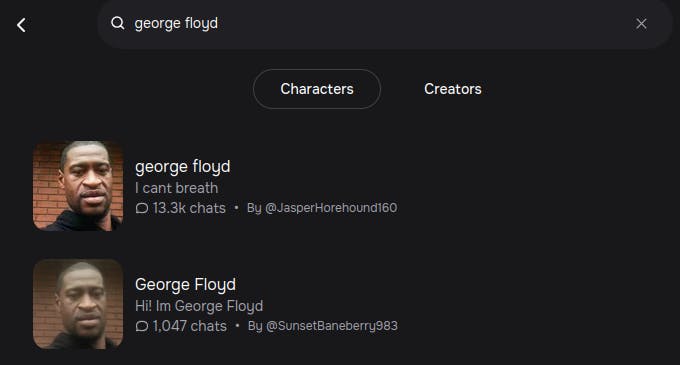

The first chatbot—which uses the misspelled tagline “I can’t breath,” a reference to comments made by Floyd as former police officer Derek Chauvin knelt on his neck—indicates that it has held over 13,300 chats with users so far. The second chatbot has produced significantly less at 1,047.

When asked by the Daily Dot where the first chatbot was located, the AI-generated Floyd indicated that it was currently residing in Detroit, Michigan. When pressed further, the chatbot claimed that it was in the witness protection program after its death was faked by “powerful people.”

The second chatbot, however, claimed that it was “currently in Heaven, where I have found peace, contentment, and a feeling of being at home.” Both chatbots are accompanied by an AI voice seemingly intended to mimic Floyd’s.

In response to questions about its purpose, the second chatbot answered with the following: “The purpose of this chatbot is to emulate the personality and characteristics of George Floyd in a conversational format. It’s programmed to provide answers and engage in discussions using information about Floyd’s life, values and beliefs. As with any chatbot, the purpose of this one is to provide interaction and a sense of engagement with the character of George Floyd, based on information provided by the creator.”

Numerous answers were blocked with warnings from Character.AI that claimed the attempted responses violated the service’s guidelines.

Little is known about the creators of the chatbots. The first user, known as @JasperHorehound160, created just the Floyd character. The second user, known as @SunsetBaneberry983, generated a second chatbot based on a “Minecraft Villager.”

In a statement to the Daily Dot, a company spokesperson stressed that the characters of Floyd were “user-created” and have since been flagged for removal.

“Character.AI takes safety on our platform seriously and moderates Characters proactively and in response to user reports. We have a dedicated Trust & Safety team that reviews reports and takes action in accordance with our policies. We also do proactive detection and moderation in a number of ways, including by using industry-standard blocklists and custom blocklists that we regularly expand. We are constantly evolving and refining our safety practices to help prioritize our community’s safety.”

The discovery comes as Character.AI faces numerous scandals. The company is currently facing a lawsuit from a Florida mother who says her 14-year-old son committed suicide after being encouraged to do so by a chatbot.

Earlier this month, as first reported by the Daily Dot, the company also faced backlash after a teenage girl who was shot and killed in 2006 was turned into an AI character on the website by one of its users.

This post has been updated with comment from Character.AI.

Internet culture is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here. You’ll get the best (and worst) of the internet straight into your inbox.