BY MATTHEW GREEN

Let me tell you the story of my tiny brush with the biggest crypto story of the year.

A few weeks ago I received a call from a reporter at ProPublica, asking me background questions about encryption. Right off the bat I knew this was going to be an odd conversation, since this gentleman seemed convinced that the NSA had vast capabilities to defeat encryption. And not in a ‘hey, d’ya think the NSA has vast capabilities to defeat encryption?’ kind of way. No, he’d already established the defeating. We were just haggling over the details.

Oddness aside it was a fun (if brief) set of conversations, mostly involving hypotheticals. If the NSA could do this, how might they do it? What would the impact be? I admit that at this point one of my biggest concerns was to avoid coming off like a crank. After all, if I got quoted sounding too much like an NSA conspiracy nut, my colleagues would laugh at me. Then I might not get invited to the cool security parties.

All of this is a long way of saying that I was totally unprepared for today’s bombshell revelations describing the NSA’s efforts to defeat encryption. Not only does the worst possible hypothetical I discussed appear to be true, but it’s true on a scale I couldn’t even imagine. I’m no longer the crank. I wasn’t even close to cranky enough.

And since I never got a chance to see the documents that sourced the NYT/ProPublica story — and I would give my right arm to see them — I’m determined to make up for this deficit with sheer speculation. Which is exactly what this blog post will be.

‘Bullrun’ and ‘Cheesy Name’

If you haven’t read the NYT or Guardian stories, you probably should. The TL;DR is that the NSA has been doing some very bad things. At a combined cost of $250 million per year, they include:

- Tampering with national standards (NIST is specifically mentioned) to promote weak, or otherwise vulnerable cryptography.

- Influencing standards committees to weaken protocols.

- Working with hardware and software vendors to weaken encryption and random number generators.

- Attacking the encryption used by ‘the next generation of 4G phones‘.

- Obtaining cleartext access to ‘a major internet peer-to-peer voice and text communications system’ (Skype?)

- Identifying and cracking vulnerable keys.

- Establishing a Human Intelligence division to infiltrate the global telecommunications industry.

- And worst of all (to me): somehow decrypting SSL connections.

All of these programs go by different code names, but the NSA’s decryption program goes by the name ‘Bullrun’ so that’s what I’ll use here.

How to break a cryptographic system

There’s almost too much here for a short blog post, so I’m going to start with a few general thoughts. Readers of this blog should know that there are basically three ways to break a cryptographic system. In no particular order, they are:

- Attack the cryptography. This is difficult and unlikely to work against the standard algorithms we use (though there are exceptions like RC4.) However there are many complex protocols in cryptography, and sometimes they are vulnerable.

- Go after the implementation. Cryptography is almost always implemented in software — andsoftware is a disaster. Hardware isn’t that much better. Unfortunately active software exploits only work if you have a target in mind. If your goal is mass surveillance, you need to build insecurity in from the start. That means working with vendors to add backdoors.

- Access the human side. Why hack someone’s computer if you can get them to give you the key?

Bruce Schneier, who has seen the documents, says that ‘math is good’, but that ‘code has been subverted’. He also says that the NSA is ‘cheating‘. Which, assuming we can trust these documents, is a huge sigh of relief. But it also means we’re seeing a lot of (2) and (3) here.

So which code should we be concerned about? Which hardware?

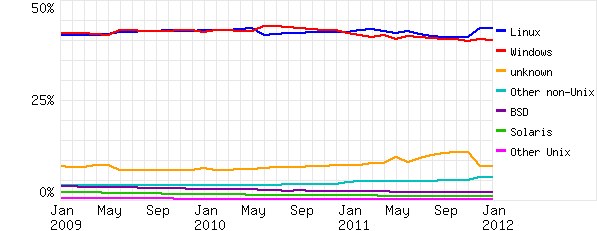

SSL servers by OS type

Chart via Netcraft

This is probably the most relevant question. If we’re talking about commercial encryption code, the lion’s share of it uses one of a small number of libraries. The most common of these are probably the Microsoft CryptoAPI (and Microsoft SChannel) along with the OpenSSL library.

Of the libraries above, Microsoft is probably due for the most scrutiny. While Microsoft employs good (and paranoid!) people to vet their algorithms, their ecosystem is obviously deeply closed-source. You can view Microsoft’s code (if you sign enough licensing agreements) but you’ll never build it yourself. Moreover they have the market share. If any commercial vendor is weakening encryption systems, Microsoft is probably the most likely suspect.

And this is a problem because Microsoft IIS powers around 20% of the web servers on the Internet — and nearly forty percent of the SSL servers! Moreover, even third-party encryption programs running on Windows often depend on CAPI components, including the random number generator. That makes these programs somewhat dependent on Microsoft’s honesty.

Probably the second most likely candidate is OpenSSL. I know it seems like heresy to imply that OpenSSL — an open source and widely-developed library — might be vulnerable. But at the same time it powers an enormous amount of secure traffic on the Internet, thanks not only to the dominance of Apache SSL, but also due to the fact that OpenSSL is used everywhere. You only have to glance at the FIPS CMVP validation lists to realize that many ‘commercial’ encryption products are just thin wrappers around OpenSSL.

Unfortunately while OpenSSL is open source, it periodically coughs up vulnerabilities. Part of this is due to the fact that it’s a patchwork nightmare originally developed by a novice who thought it would be a fun way to learn C. Part of it is because crypto is unbelievably complicated. Either way, there are very few people who really understand the whole codebase.

On the hardware side (and while we’re throwing out baseless accusations) it would be awfully nice to take another look at the Intel Secure Key integrated random number generators that most Intel processors will be getting shortly. Even if there’s no problem, it’s going to be an awfully hard job selling these internationally after today’s news.

Which standards?

From my point of view this is probably the most interesting and worrying part of today’s leak. Software is almost always broken, but standards — in theory — get read by everyone. It should be extremely difficult to weaken a standard without someone noticing. And yet the Guardian and NYT stories are extremely specific in their allegations about the NSA weakening standards.

The Guardian specifically calls out the National Institute of Standards and Technology (NIST) for a standard they published in 2006. Cryptographers have always had complicated feelings about NIST, and that’s mostly because NIST has a complicated relationship with the NSA.

Here’s the problem: the NSA ostensibly has both a defensive and an offensive mission. The defensive mission is pretty simple: it’s to make sure U.S. information systems don’t get pwned. A substantial portion of that mission is accomplished through fruitful collaboration with NIST, which helps to promote data security standards such as the Federal Information Processing Standards (FIPS) and NIST Special Publications.

I said cryptographers have complicated feelings about NIST, and that’s because we all know that the NSA has the power to use NIST for good as well as evil. Up until today there’s been no real evidence of malice, despite some occasional glitches — and compelling evidence that at least one NIST cryptographic standard could have contained a backdoor. But now maybe we’ll have to re-evaluate that relationship. As utterly crazy as it may seem.

Unfortunately, we’re highly dependent on NIST standards, ranging from pseudo-random number generators to hash functions and ciphers, all the way to the specific elliptic curves we use in SSL/TLS. While the possibility of a backdoor in any of these components does seem remote, trust has been violated. It’s going to be an absolute nightmare ruling it out.

Which people?

Probably the biggest concern in all this is the evidence of collaboration between the NSA and unspecified ‘telecom providers’. We already know that the major U.S. (and international) telecom carriers routinely assist the NSA in collecting data from fiber-optic cables. But all this data is no good if it’s encrypted.

While software compromises and weak standards can help the NSA deal with some of this, by far the easiest way to access encrypted data is to simply ask for — or steal — the keys. This goes for something as simple as cellular encryption (protected by a single key database at each carrier) all the way to SSL/TLS which is (most commonly) protected with a few relatively short RSA keys.

The good and bad thing is that as the nation hosting the largest number of popular digital online services (like Google, Facebook and Yahoo) many of those critical keys are located right here on U.S. soil. Simultaneously, the people communicating with those services — i.e., the ‘targets’ — may be foreigners. Or they may be U.S. citizens. Or you may not know who they are until you scoop up and decrypt all of their traffic and run it for keywords.

Which means there’s a circumstantial case that the NSA and GCHQ are either directly accessingCertificate Authority keys* or else actively stealing keys from U.S. providers, possibly (or probably) without executives’ knowledge. This only requires a small number of people with physical or electronic access to servers, so it’s quite feasible.** The one reason I would have ruled it out a few days ago is because it seems so obviously immoral if not illegal, and moreover a huge threat to the checks and balances that the NSA allegedly has to satisfy in order to access specific users’ data via programs such as PRISM.

To me, the existence of this program is probably the least unexpected piece of all the news today. Somehow it’s also the most upsetting.

So what does it all mean?

I honestly wish I knew. Part of me worries that the whole security industry will talk about this for a few days, then we’ll all go back to our normal lives without giving it a second thought. I hope we don’t, though. Right now there are too many unanswered questions to just let things lie.

The most likely short-term effect is that there’s going to be a lot less trust in the security industry. And a whole lot less trust for the U.S. and its software exports. Maybe this is a good thing. We’ve been saying for years that you can’t trust closed code and unsupported standards: now people will have to verify.

Even better, these revelations may also help to spur a whole burst of new research and re-designs of cryptographic software. We’ve also been saying that even open code like OpenSSL needs more expert eyes. Unfortunately there’s been little interest in this, since the clever researchers in our field view these problems as ‘solved’ and thus somewhat uninteresting.

What we learned today is that they’re solved all right. Just not the way we thought.

Notes:

* I had omitted the Certificate Authority route from the original post due to an oversight — thanks to Kenny Patterson for pointing this out — but I still think this is a less viable attack forpassive eavesdropping (that does not involve actively running a man in the middle attack). And it seems that much of the interesting eavesdropping here is passive.

** The major exception here is Google, which deploys Perfect Forward Secrecy for many of its connections, so key theft would not work here. To deal with this the NSA would have to subvert the software or break the encryption in some other way.

Matthew Green is a cryptographer and research professor at Johns Hopkins University. He has designed and analyzed cryptographic systems used in wireless networks, payment systems and digital content protection platforms. His research looks at the various ways cryptography can be used to promote user privacy.

This piece originally appeared on Green’s website, A Few Thoughts on Cryptographic Engineering.

Illustration by Fernando Alfonso III