One of the cornerstones for scientific advancement is reproducibility. If you claim certain results, other scientists must be able to get the same results when they perform the same experiment. If they don’t, it casts doubt on the original results.

A group of 270 psychology researchers, called the Open Science Collaboration, attempted to do just that with 100 psychology studies. They were only able to replicate the results of 36 of them. Moreover, the studies they chose were highly regarded and informed what psychologists understood about personality, learning, memory and relationships, according to The New York Times. The results of the study are published open-access in the journal Science.

This news floats on a long stream of bad press for science. As science journalist Ed Yong wrote in The Atlantic,

Psychology has been recently rocked by several high-profile controversies, including: the publication of studies that documented impossible effects like precognition, failures to replicate the results of classic textbook experiments, and some prominent cases of outright fraud.

But that doesn’t mean we should just dismiss an entire field of study out of hand.

The problem

The study’s main outcome measure—the main result they were trying to replicate—was the p-value. P-values are a statistical measure of the probability that your data is meaningful and not just random noise. The lower the value, the better. P-values at or below 0.05 are generally considered “statistically significant” and worthy of publication in a scientific journal. Ninety-seven percent of the studies on the chopping block reported statistically significant p-values, but only 36 of them could be reproduced.

One of the problems, as we’ve written about previously, is publication bias. There’s a sense in scientific research that only positive results are worth reporting, so researchers tend to only send in their “successful” experiments for publication. Journals themselves also tend to accept exciting positive results and reject boring, ambiguous data.

This incentivizes p-hacking, or attempting to manipulate the data such that it yields a positive result. And it’s ridiculously easy to do—so easy that researchers aren’t always aware they’re doing it. Psychology may be especially vulnerable to p-hacking because it’s an inherently messy endeavor. Experiments involve probing the brain indirectly through tests with humans and animals, and then interpreting those results with imperfect statistical measures.

A psychologist advancing a particular theory based on a large framework of previous studies will have a reasonable idea of what results to expect from his experiment. So if his results don’t line up with expectations, it seems reasonable to question the methods. “Did I use the right test? This one person is an outlier, maybe I should exclude that data point.”

That the field of psychology is making a concerted effort to replicate studies demonstrates science at its best. Science is supposed to be self-questioning and self-correcting. The results of this study are disheartening, but highlights the need for more rigorous methods in social sciences.

The (proposed) solutions

Researcher and blogger Neuroskeptic notes that psychology isn’t the only field with a problem—the reproducibility of cancer research has also been questioned.

“The problem is formal and systemic. In a nutshell, false-positive results will be a problem in any field where results are either ‘positive’ or ‘negative’, and scientists are rewarded more for publishing positive ones,” he writes. If we can eliminate publication bias, that would go a long way toward reducing the number of false-positives.

WIRED thinks the Internet could be the solution. As part of the project, the Open Science Collaboration made a free, open-source software called the Open Science Framework, wherein researchers could input their materials, designs, and data. That framework, journalist Katie Palmer writes, would make future endeavors to replicate results much easier. If other researchers could scrutinize the experimental design of a study before it’s even carried out, they could curtail some of the p-hacking prevalent in the field.

Another solution may be to move away from scientific journals’ “impact factors.” The impact factor isn’t a term that makes its way into the mainstream a lot, but is something that many scientists consider important. Impact factors are statistical measures of a journal’s klout, essentially. Prolific journals like Science and Nature have very high impact factors, leading researchers and laypeople alike to automatically revere any studies they publish. But a staggering number of studies have been retracted from those journals, as well, casting doubt on the usefulness of impact factors.

Perhaps an alternative “replication-index” where the strength of a study is measured not by the journal it’s published in, but by the number of studies that replicated its results, is more appropriate.

But whatever the solution, the field of psychology has taken an important first step in admitting and proving that it has a problem. In the meantime, try brushing up on your science skills with our guide to understanding science on the Internet.

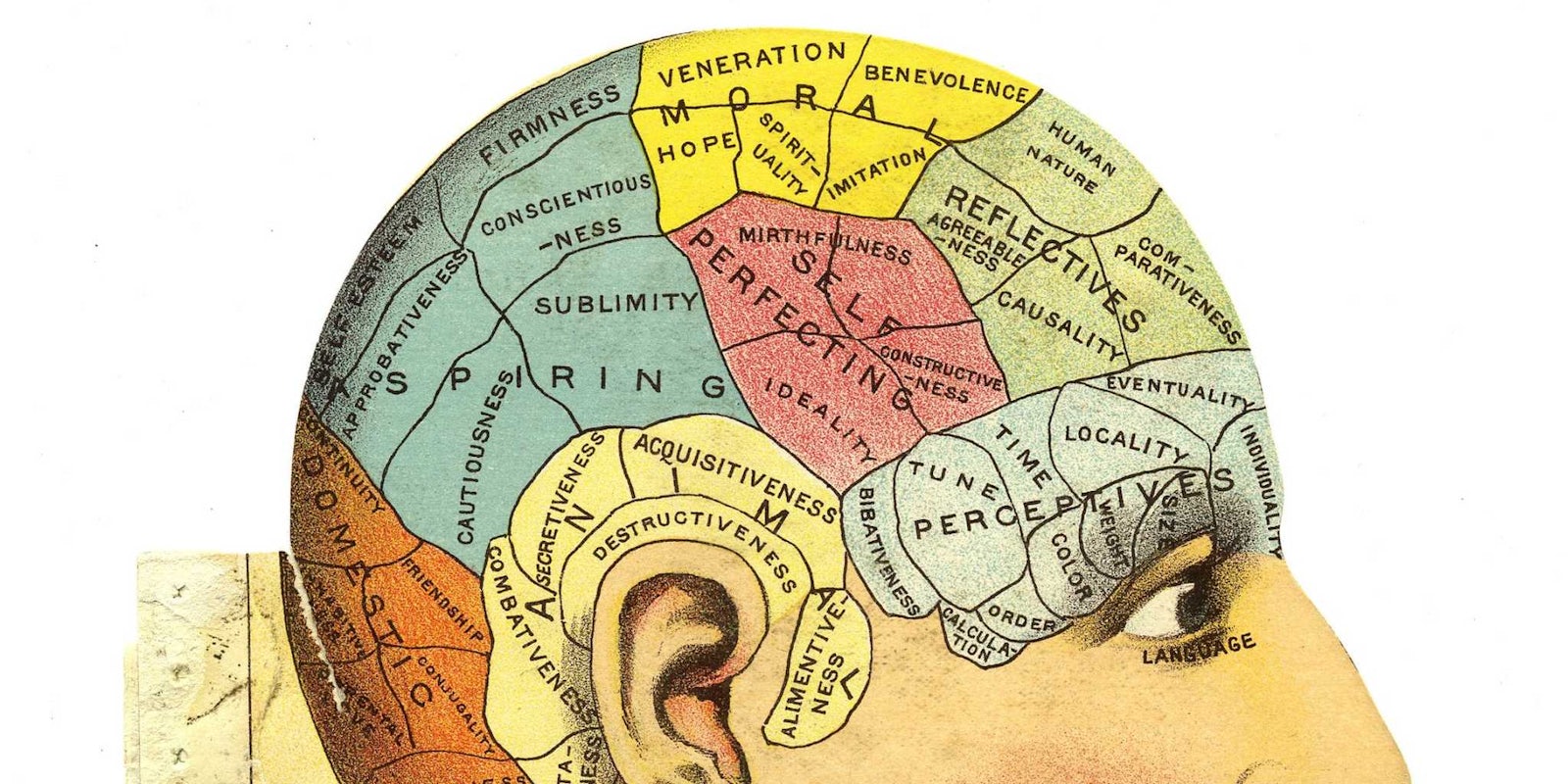

Image via William Creswell/Flickr (CC BY 2.0)