Human beings learn through play. Artificial intelligences seem to learn in the same way. So we really ought to stop feeding them video games, lest they beat all of our high scores.

Deep Mind, a company acquired by Google in 2014, has built an artificial intelligence that taught itself how to play 49 different games for the Atari 2600 game console. The games are ancient in their simplicity compared to modern fare—the Atari 2600 was released in 1977—but the fact remains that a computer was told only that pixels were on a screen and to get a high score. It then taught itself to dominate these games.

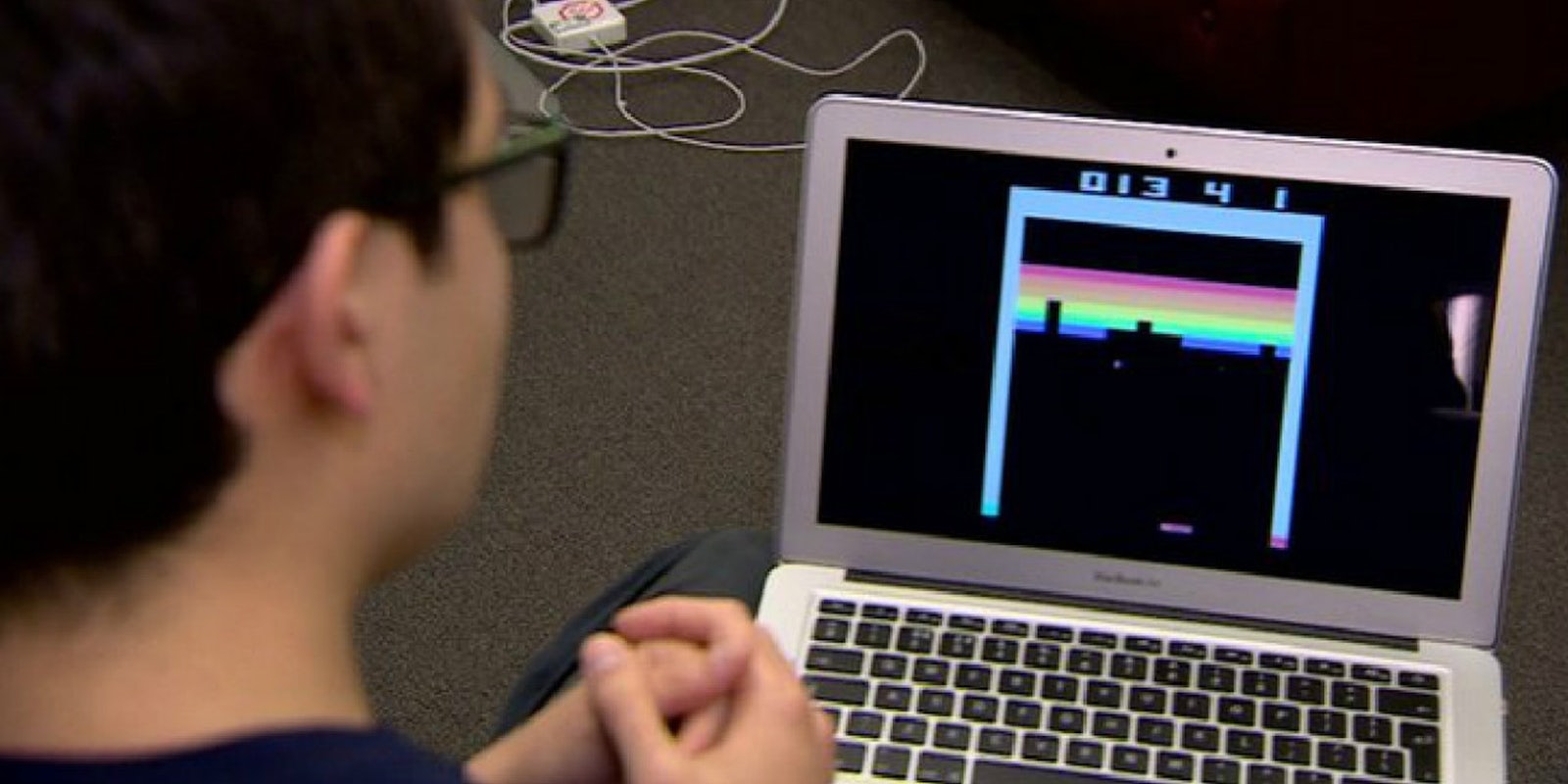

BBC News posted a video that provides a layman’s explanation of this study, published in the academic journal Nature. It also demonstrates proof that yet another stage in the evolution of the AI machines that will someday kill us all has been completed.

The essence of play is learning from experimentation. The serious games industry is an expression of the widespread recognition that video games are a valid educational tool. With the growing number of game-building tools and people who know how to use them, it’s easier than ever for educators to design their own educational games or commission them through third parties.

In order to create AI that outperforms humans in the majority of the 49 games it taught itself to play, the researchers behind the project utilized a learning system that mimics the way humans and some animals learn, called “reinforcement learning.”

Now imagine what happens when we have an AI that is not only capable of efficiently learning complex tasks, but also learns how to process language well enough that it can soak up knowledge on the Web.

We’re going to need Matthew Broderick to save us someday. Or a studio needs to do a reboot and produce a backup savior for humankind.

H/T Venturebeat | Screengrab via BBC