Trying to tell how a person feels by simply looking at their face can be hit-or-miss, but now an artificial intelligence project claims to be able to do just that.

The team behind Microsoft’s Project Oxford—an initiative that aims to arm developers with easy-to-use machine learning tools that’ll enable their app projects to identify words, sounds, and images—recently announced its plans to make beta versions of their entire portfolio available to the public, including a tool that can recognize emotions from photographs.

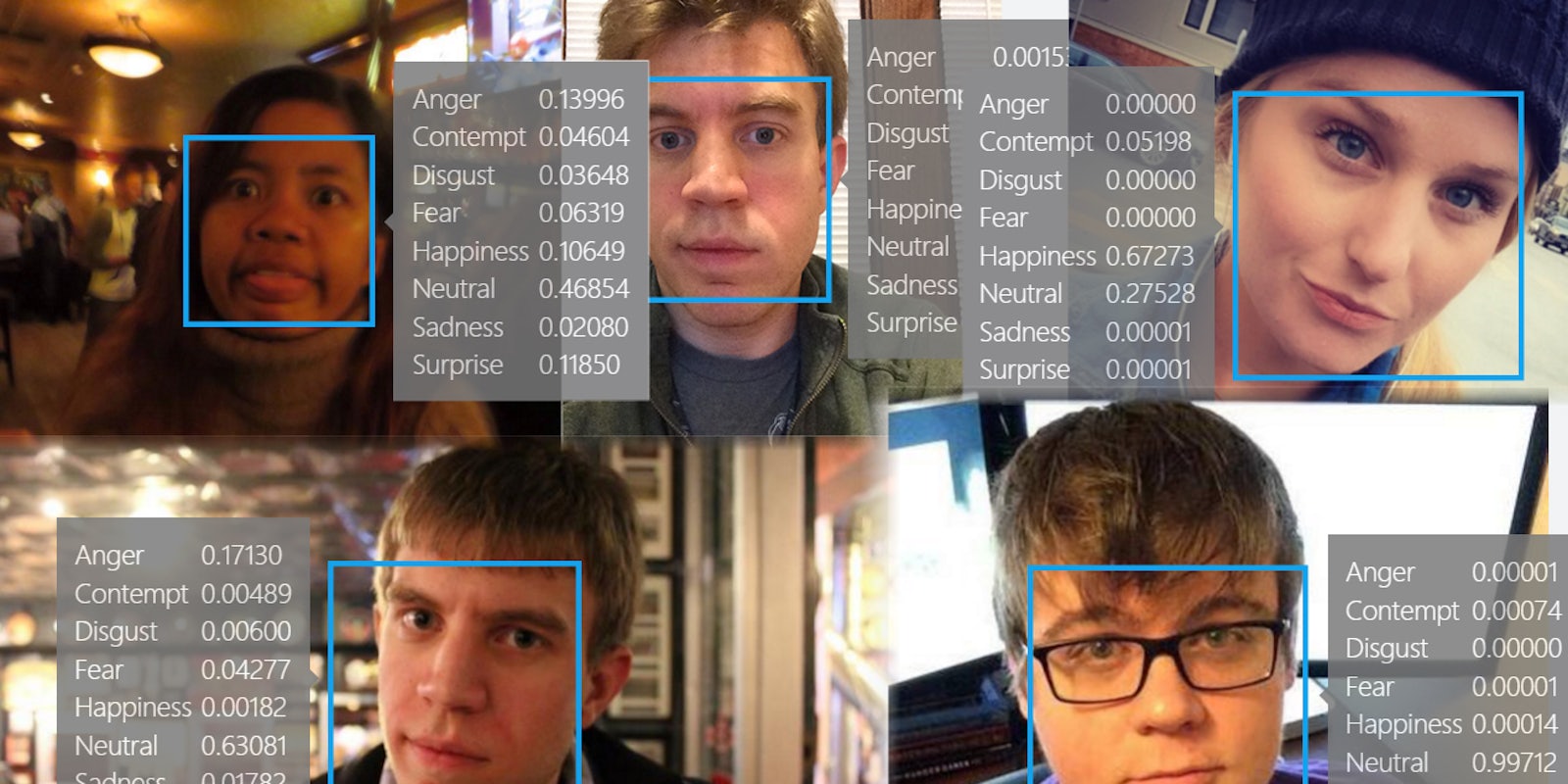

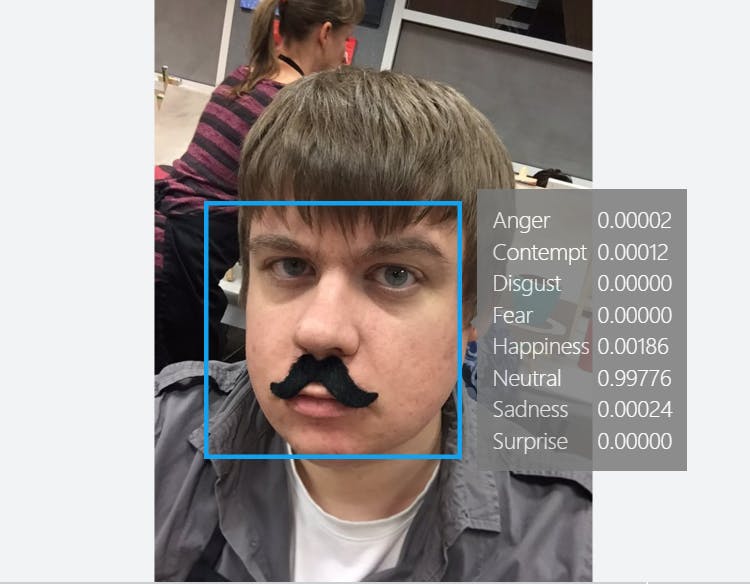

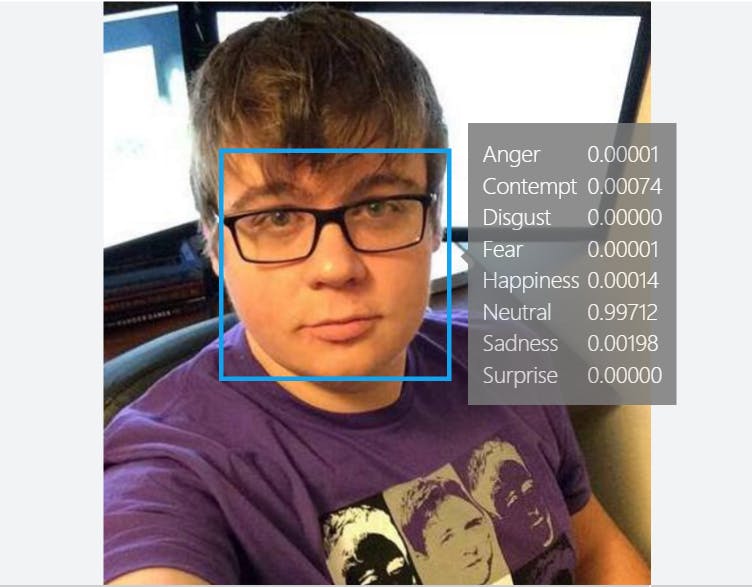

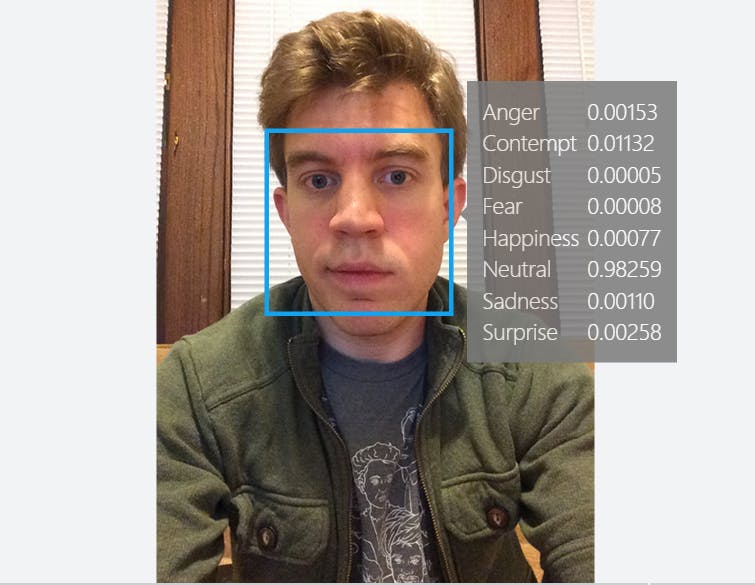

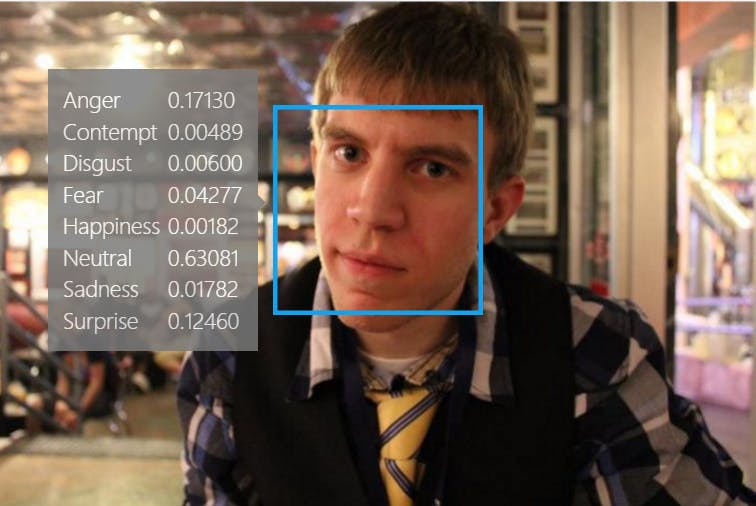

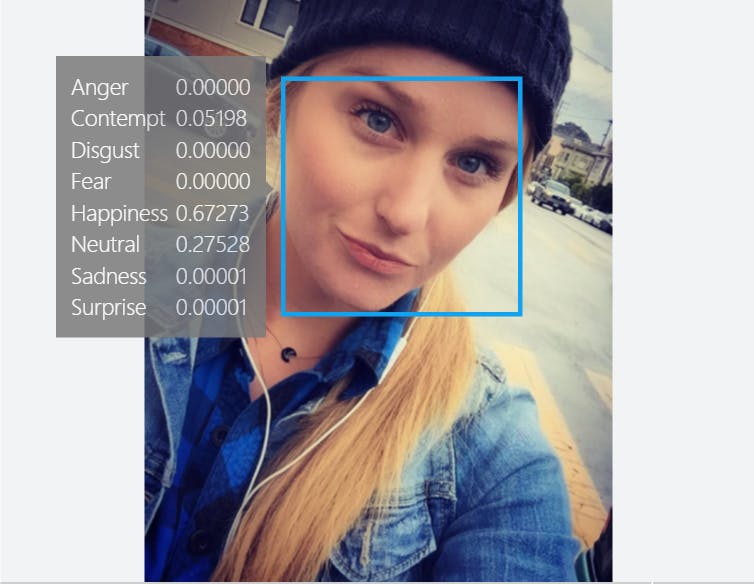

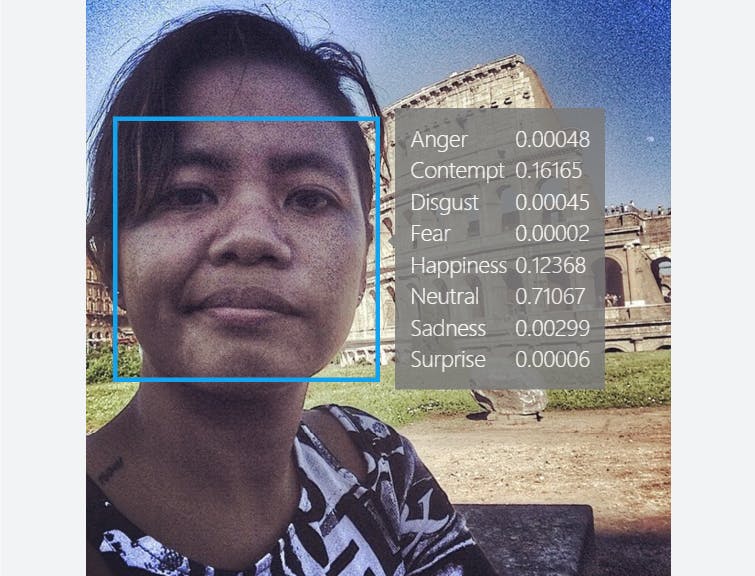

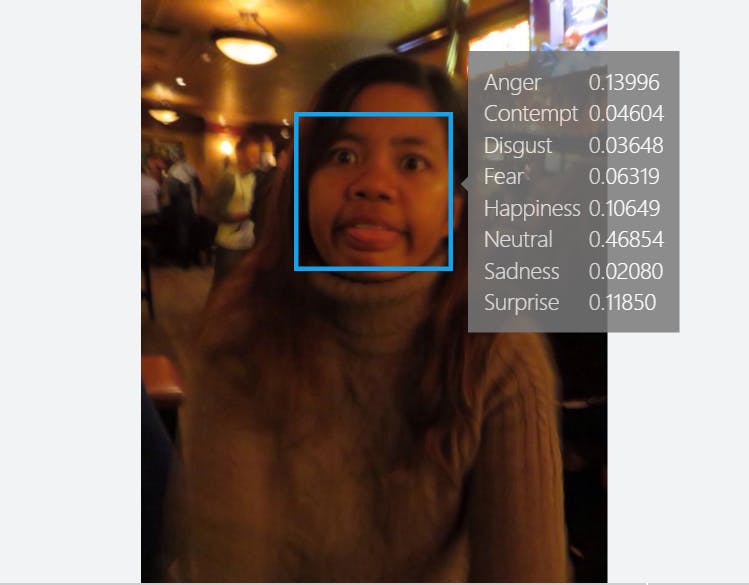

The emotion tool can be used to create an app or a project that has the ability to “recognize eight core emotional states—anger, contempt, fear, disgust, happiness, neutral, sadness or surprise—based on universal facial expressions that reflect those feelings.”

For us everyday, non-developer folk, it can be used to evaluate the selfies you and your friends have shared online. While Project Oxford made sure to clarify that “recognition is experimental, and not always accurate,” we find the tool’s results rather enlightening and useful for much needed self-reflection.

Here’s what happened when the Daily Dot’s tech team ran their photos through the tool’s demo portal:

You can run your own photos through the emotion tool demo beginning today. Make sure your images are in JPEG, PNG, GIF (first frame), or BMP formats, are more than 36 x 36 pixels and are less than 4MB. Beta versions of other tools will be made available by the end of the year for a limited free trial.

H/T Microsoft Blog / Photos via The Daily Dot