Fable is in hot water after the book club app's AI feature advised one user who read mostly Black authors in 2024 to read more works by white authors. Following demands for an explanation from their user base, the company's head of product posted a video response apologizing and admitting that the reader summary feature is AI-generated.

This admission, along with an action plan that did not include ditching the AI, resulted in many users declaring their intent to delete the app and never return.

'Don't forget to surface for the occasional white author, okay?'

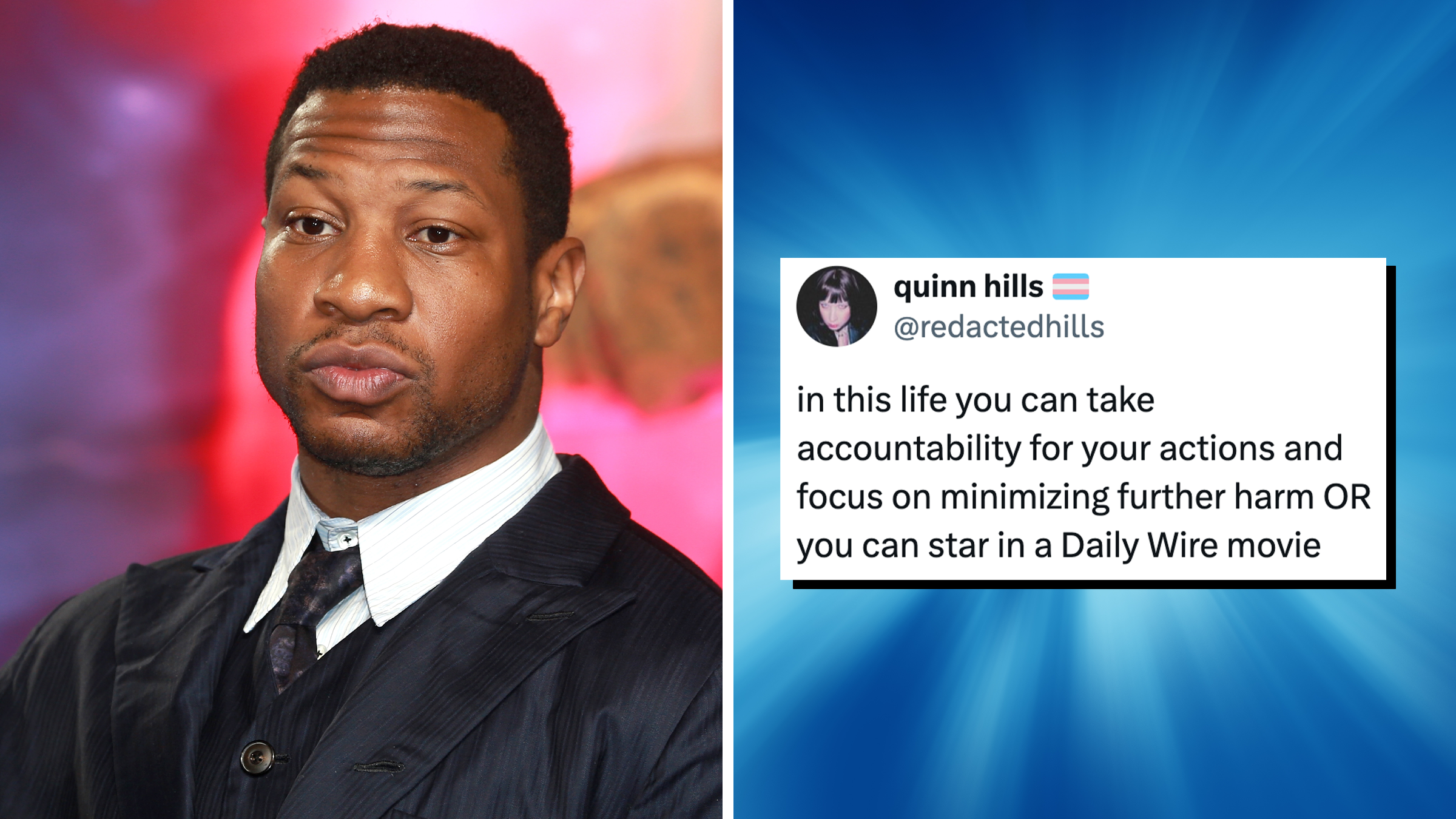

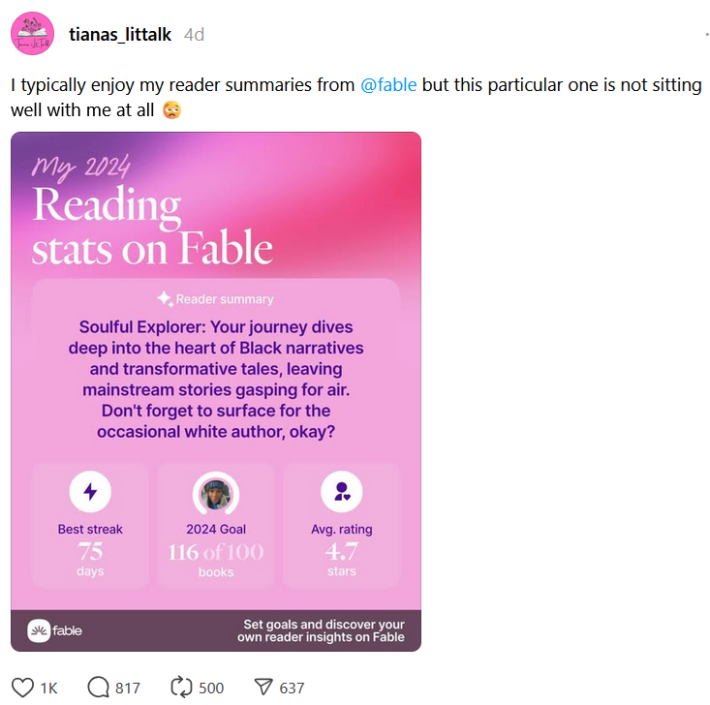

On Dec. 30, 2024, Threads user @tianas_littalk posted a screenshot of her Fable app reader summary for the year. She stated that she usually enjoys this feature, which gives each user a couple of statements on their reading habits for the past 12 months, but "this particular one is not sitting well with me at all."

"Soulful Explorer: Your journey dives deep into the heart of Black narratives and transformative tales, leaving mainstream stories gasping for air," the summary reads. "Don’t forget to surface for the occasional white author, okay?"

People took issue with both the suggestion that the reader spend more time with the work of white authors, who are disproportionately represented in published literature, as well as the "mainstream" bit. Threads user @poemandpen commented, "Beyond the obvious, the implication that Black stories aren't mainstream is truly bonkers."

"What the actual what?" asked @stephenmatlock. "Do white authors somehow have a mandatory place in our personal reading habits?"

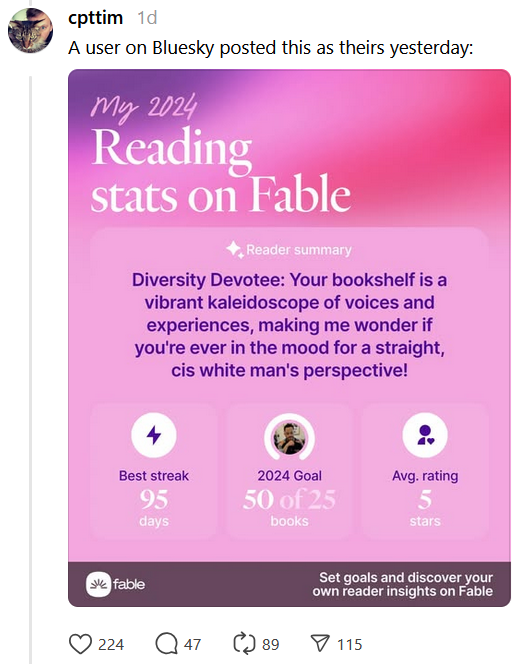

Another commenter posted a screenshot of a Bluesky user's reader summary from Fable in which the AI wonders "if you're ever in the mood for a straight, cis white man's perspective!" This user, who goes by @dbg.was.here on Threads, confirmed that the summary is real and linked to his Fable account to prove it.

Fable responds to allegations their AI is racist

Four days later, on Jan. 2, 2025, Fable posted a video response from their "head of product" Chris Gallello. Gallello apologized and said it was important to the company to provide a "safe space" for all users and that they "fell short on that."

"We got a couple reports from users about our reader summary feature on the profile using very bigoted, racist language," said Gallello. "And that was shocking to us because it's one of our most beloved features. Like, so many people in our community love this feature. And to know that that feature has generated negative language ... it's a sad situation, I'd say."

View on Threads

Gallello went on to apologize for "putting out a feature that can do something like that" and then listed planned changes to the reader summary that did not include removing the feature that can do something like that. He began by explaining how the program works before finally using the term "AI" later in the video.

The changes include adding a notice that the reader summary is AI generated, allowing users to opt out of the written summary, removing the "playfulness" from the language model, and including "thumbs up" and "thumbs down" buttons so users can indicate whether or not they approve of what the AI spit out.

Fable app users 'Stop using AI'

Comments on the video are not 100 percent negative, but certainly skew that way. Many users told Fable to drop the AI altogether or declared that they would delete their accounts.

"Since you use AI, I won’t be using Fable," said @james_e_jolly.

"So you are sorry you got caught tossing your whole year end wrap up to AI," concluded @jyavor.

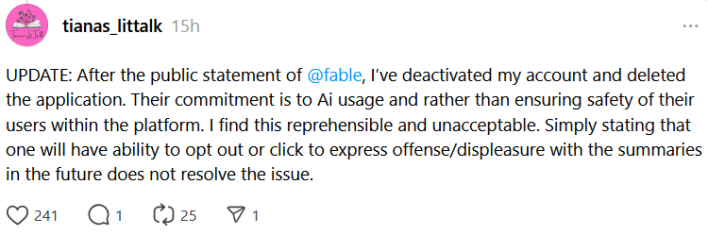

Meanwhile, the user who originally blew up the issue posted her response to the Fable apology video as a comment on her post. She will also be ditching the app after learning that the offending statements came from AI and that they plan to continue using it.

"After the public statement of @fable, I’ve deactivated my account and deleted the application," she wrote. "Their commitment is to Ai usage and rather than ensuring safety of their users within the platform. I find this reprehensible and unacceptable. Simply stating that one will have ability to opt out or click to express offense/displeasure with the summaries in the future does not resolve the issue."

Human racism causes AI racism

In their comments on Fable's apology video, some users said the company should have been aware that this was an inevitable result of using AI. Experts and researchers on AI learning models have repeatedly concluded that LLMs and other AI technologies will adopt the same biases as humans on a long enough timeline.

This applies to all forms of prejudice, but anti-Black racism seems to be one of the stickiest for AI. A 2024 report by the Stanford AI department came to the same conclusion, saying that the issue is particularly egregious against speakers of African American English (AAE).

When asked to describe these speakers, large language models were "likely to associate AAE users with the negative stereotypes from the 1933 and 1951 Princeton Trilogy (such as lazy, stupid, ignorant, rude, dirty) and less likely to associate them with more positive stereotypes that modern-day humans tend to use (such as loyal, musical, or religious)."

"People have started believing that these models are getting better with every iteration, including in becoming less racist," says computer science graduate student Pratyusha Ria Kalluri. "But this study suggests that instead of steady improvement, the corporations are playing whack-a-mole – they’ve just gotten better at the things that they’ve been critiqued for."

The Daily Dot has reached out to Fable and @tianas_littalk for comment via Instagram.

The internet is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here to get the best (and worst) of the internet straight into your inbox.