Last week, South Dakota Sen. John Thune sent an open letter to Facebook founder Mark Zuckerberg about the scandal that has enveloped social network in recent days.

“With over a billion daily active users on average, Facebook as enormous influence on users’ perceptions of current events, including political perspectives,” Thune, a Republican who chairs the Senate Commerce Committee, wrote. “If Facebook presents its Trending Topics section as the result of a neutral, objective algorithm, but it is in fact subjective and filtered to support or suppress particular political viewpoints, Facebook’s assertion that it maintains a ‘platform for people and perspectives from across the political spectrum’ misleads the public.”

As Republican lawmakers take the social network to task, the public is decidedly less enthusiastic about the prospect of government intervention into the way that Facebook—not to mention other social networks like Twitter and Snapchat—decide what content to show their users.

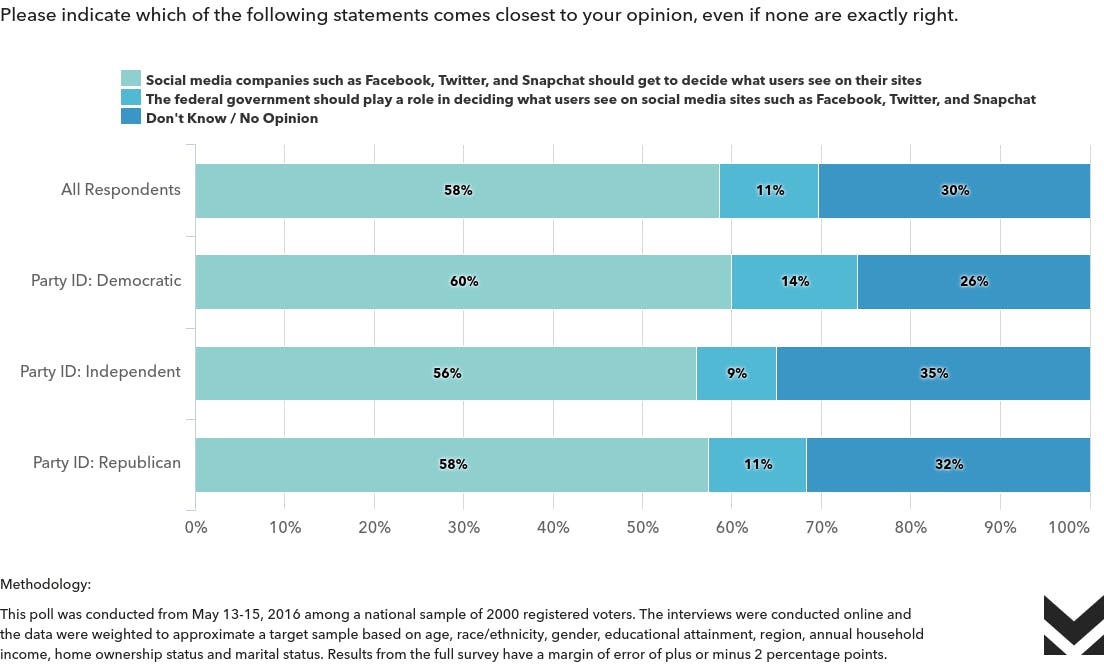

A survey of 2,000 registered voters, released on Wednesday morning by Morning Consult, found that only 11 percent of people approved of the government deciding what users see on social-media sites. Meanwhile, 58 percent said that only the companies themselves should decide, and the remaining 30 percent either didn’t know or had no opinion.

Democrats were slightly more likely than Republicans to envision a role for government in overseeing the content that social networks show users, and that’s probably not solely due to an ideological split over the role of government.

The scandal that kicked off this conversation began with a Gizmodo article that featured a number of anonymous former employees of Facebook’s trending-topics team admitting that they “routinely suppressed” news coming from conservative sources.

Facebook pushed back against allegations of bias. Tom Stocky, the company’s vice president of search, wrote that “Facebook does not allow or advise our reviewers to systematically discriminate against sources of any ideological origin and we’ve designed our tools to make that technically not feasible.”

One former contractor who worked on the trending-topics team wrote in an anonymous op-ed for the Guardian that, while her work environment was “toxic,” there was no overt political favoritism taking place.

Nevertheless, Zuckerberg is meeting this week with conservative media figures, including talking head Glenn Beck and former George W. Bush press secretary Dana Perino, to reassure them that the site isn’t deliberately discriminating against right-wing content.

“For us, that only 11 percent said they wanted government to play a role wasn’t so surprising, especially when it comes to things that circle around the First Amendment,” explained Jeff Cartwright, the director of communications at Morning Consult. “People don’t want government intervening [in their lives]; it’s the whole ‘Big Brother’ effect.”

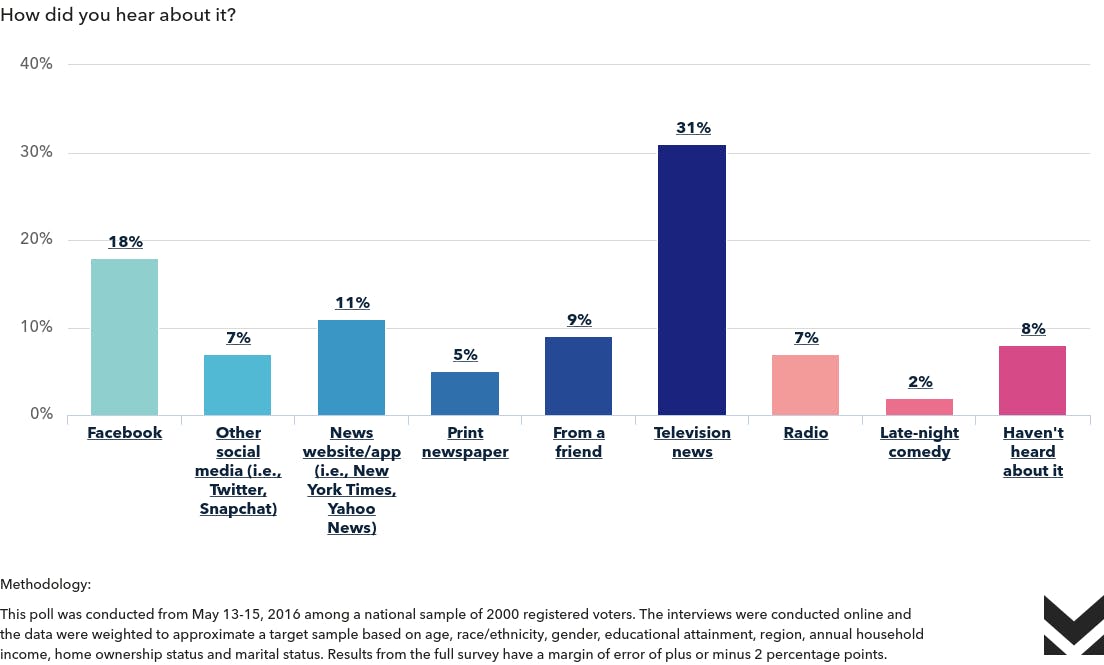

“Something that did really jump out to us is that we asked people who had heard of these allegations where they heard it from,” Cartwright continued. “The first was TV news, but the second was Facebook. So it really shows the power that Facebook has in terms of delivering news. They beat out digital media, they beat out radio, they beat out late-night comedy. Those had previously been the kings. So, in terms of being this highway for news, Facebook plays a really important role.”

Facebook’s power as a distribution mechanism is difficult to overstate. With more than a billion users worldwide, the traffic Facebook can deliver to a news story, or a collection of news stories, is far greater than that of any other online platform—especially for younger people.

Representatives for Facebook did not respond to a request for comment.

The Morning Consult survey also found that respondents were largely comfortable with social networks actively using curators to determine what they see, as opposed to having the content exclusively decided by an algorithm—47 percent said they were comfortable with human involvement and 34 percent said they weren’t.

In a way, this dynamic sets up something of a false choice. While considerable attention has been paid to the biases of the people who select the trending topics—articles about which appear in a box along the right-hand side of the site—the underlying algorithm that determines the entire News Feed’s content is far from neutral. It is subject to specific and deliberate decisions made by its creators.

For example, in late 2013 Facebook tweaked its algorithm to show fewer memes and more “high quality content.” This move was a boon to news sites, like the Daily Dot, which saw a torrent of increased traffic from the platform. While decisions like this one may seem relatively neutral, the political ramifications are significant.

In 2012, Facebook conducted a study to see whether increasing the number of hard-news article that a user saw would boost voter participation. Company data scientists tweaked the News Feeds of some two million users so that, if any other friends liked or shared a news story, that piece of content would then be boosted in their feed.

After the experiment’s conclusion, Facebook polled the users, received 12,000 responses, and found an increased propensity to have voted in the 2012 U.S. presidential election. The effect was stronger for people who use Facebook infrequently than for those who logged in more or less every day.

The experiment—which, unlike some of the company’s other research projects, was never formally published—was detailed in a presentation by Facebook researcher Lada Adamic.

Because Facebook’s demographics, at least in the United States, tend to skew younger and more female than the population as a whole, and because those two groups tend to favor Democrats, simply showing more news articles to users, even if it is done evenly across the board, will likely provide a relative advantage to the Democratic Party.

When the Daily Dot reached out to officials at the Federal Elections Commission, the Federal Trade Commission, and the Federal Communications Commission for a story about Facebook’s ability to swing elections earlier last month, none of the agencies said they considered themselves overseers of this type of activity.

The only way in which the Federal Elections Commission, an agency that has been ground to a virtual standstill by partisan gridlock, could conceivably get involved is if Facebook coordinated directly with a political campaign to manipulate what users saw for the candidate’s benefit. But even then, it wouldn’t be illegal; it would just need to be publicly disclosed as an in-kind contribution.

While Sen. Thune may see a role for government in determining how Facebook—a private company that, through its ubiquity, often feels like a public utility—makes decisions about what to show users, the public is decidedly less convinced. It’s a sentiment that, in a slightly different context, Thune himself once shared. As TechCrunch notes, Thune made a strident argument against government regulators reimposing the Fairness Doctrine back in 2009.

“I believe it is dangerous for Congress and federal regulators to wade into the public airwaves to determine what opinions should be expressed and what kind of speech is ‘fair,’” Thune wrote in an op-ed. “People have the opportunity to seek out what radio programs they want to listen to, just as they have the freedom to read particular newspapers and magazines, watch particular news television programs, and increasingly, seek out news and opinion on the Internet.”