In 2023, when a job seeker with autism attempted to complete an automated machine-based assessment as part of the hiring process, he came to the conclusion that the test was designed to filter out people with certain disabilities.

He recalled to the American Civil Liberties Union (ACLU) that the personality assessment was full of scenario-based questions such as “I tend to avoid large groups of people,” “I don’t know why I get angry sometimes,” and “I have difficulty determining how someone feels by looking at their face.” These are common symptoms of autism spectrum disorder.

A popular product developed by Aon Consulting, a recruiting technology vendor that reportedly has the second-largest share of the pre-hire assessment market globally, the personality test claimed to be “objective,” “fair,” and “culturally irrelevant.” Aon advertised that the test would enable employers to look at candidates’ behaviors and personality traits, and select “the best candidates regardless of race, class, gender or other irrelevant factors,” the ACLU wrote in a complaint against Aon it filed to the Federal Trade Commission on behalf of the job seeker.

Aon's job screening products use "algorithmic or AI-related features," per the ACLU. The ACLU alleges that, by screening for personality traits and behaviors that are irrelevant to job performance, Aon's tests create a "high risk" that qualified people with certain disabilities and mental health issues could be "unfairly and illegally screened out."

“Autistic people or people with other similar disabilities are likely to have a different pattern of response than neurotypical people, including being more likely to answer psychological test items in a literal or ‘suggestible’ way,” the complaint states.

The company insists that its tools are designed to be fair to all.

“The design and implementation of our assessment solutions—which clients use in addition to other screenings and reviews—follow industry best practices as well as the Equal Employment Opportunity Commission, legal and professional guidelines,” Aon told Legal Dive in an emailed statement.

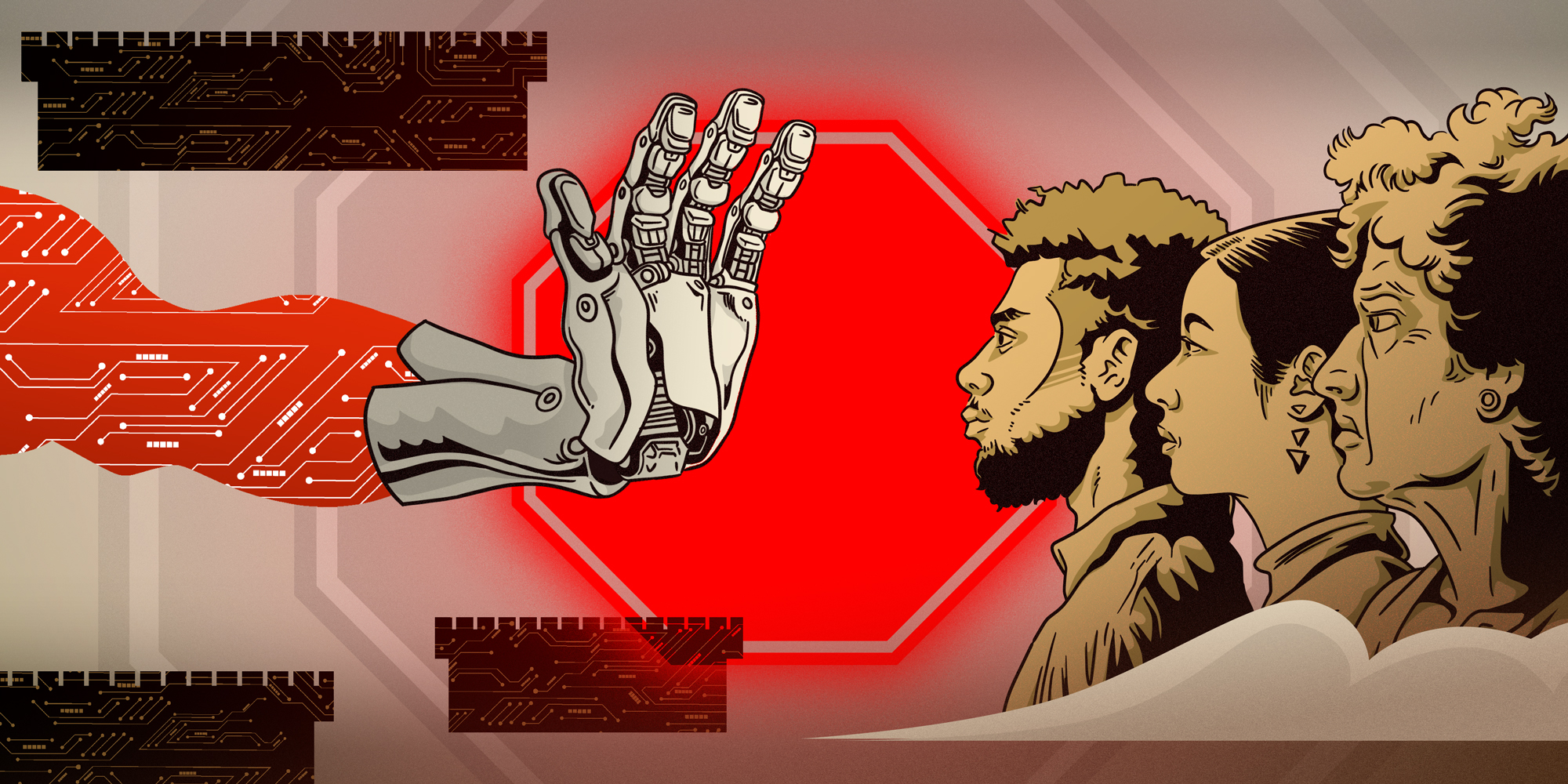

Rejected by a robot

The use of these tools in hiring has exploded in recent years.

Amid the boom of artificial intelligence (AI), a growing number of companies are counting on this technology to streamline the hiring process to find what many call “model candidates” to fill positions. A January 2023 report showed that at least 70% of employers use automated tools to screen applications, score candidates, and conduct background checks, despite equal rights groups' and tech professionals' concerns that biases are embedded in the datasets that train these tools.

”Most of our AI was built within racist, sexist, ableist, capitalist systems, so all of these practices and realities have been built into AI systems, and are just being executed at a higher level within hiring," said Caitlin Seeley George, campaigns and managing director of Fight for the Future, a non-partisan advocacy group in the area of digital rights.

"We have been saying that we desperately need policies to address these harms. Without those protections, the disparity and discrimination from AI can just be augmented when it comes to employment, housing, and education."

It's not just people with disabilities who are suspicious of these tests. Many job applicants feel that it may be unfair to use AI in hiring. Some have expressed concerns that the tests can be too broad, such as Aon’s assessment reportedly seeking candidates who have what critics view as non-job-related traits, including positivity, liveliness, and ambition. Others say their applications seem to be rejected automatically, and wonder if the algorithms work against them.

"I've been getting rejected instantly, the same day," one person posted on the Recruiting subreddit. "I've applied to several jobs that I think are low-hanging fruit, no experience required. Retail jobs, front desk jobs, dishwasher jobs, etc."

The job seeker wrote that they are an experienced lab technician who studied biomedical engineering.

"I must be getting auto-rejected by AI because there's no way they genuinely think I can't do these jobs. I don't believe a human actually looked at any of my applications," they continued. "How much of a match does it need to be to get past the computer?"

The job market may be getting tougher. Treasury Secretary Scott Bessent recently said that “there are no guarantees” the country won't slip into a recession.

A black box

Many proponents of AI claim that machines are immune to human emotional swings, and thus are fairer and more objective.

That’s not how AI works, experts say. They point out that AI models are trained on massive volumes of past data, and many industries have been historically geared toward straight white men. Civil rights advocacy groups are concerned that the blind pursuit of AI in hiring, lending, and other areas may further spread real-world harms across American life, exacerbating discrimination on the basis of race, gender, disability and other protected characteristics.

“AI is not neutral, it has bases from training data, the historical data which have tons of biases baked into it,” said Calli Schroeder, senior counsel and lead of the AI and human rights projects at Electronic Privacy Information Center.

Schroeder told the Daily Dot that machines don’t understand context.

“The reason why incarceration rates are higher for Black people historically is not because Black people are more criminal. It is because of the way our prison system is built, the way our criminal justice system is built,” she said. “Just like with hiring, it’s not going to look at historical data and realize, for a hundred years, women were not allowed to work in this profession, that’s why there’s a lot of historical data about men doing this job.”

Regulation is vital because machines don’t correct themselves, Schroeder added. Humans need to be able to interrogate the system and fix errors, otherwise the machines will keep producing flawed results.

Can AI discriminate?

The difference between AI and production machines roaring in factories is the complex algorithms behind AI's ability to “think” and to accomplish tasks “creatively” using massive amounts of data at speeds faster than humans. This has resulted in ordinary users often being unable to detect or correct built-in mistakes, critics say, allowing harms to be amplified.

For example, AI resume screening excels at pulling different information and making inferences.

“When it reads through resumes, there are certain organizations or clubs that may indicate your political leaning, whether you’re gay or straight, whether you’re a woman or a man,” said Schroeder. “If they read enough information in their training data sets, they’ll make those connections.”

Even when the requester asks the tool to remove all the terms about race, gender, and sexuality, it will still draw conclusions about these characteristics, said Schroeder, because there is no perfect way to define and avoid keywords associated with such. Membership in a certain sororities, for example, may strongly indicate that a candidate is a Black woman.

“When someone mentions they can speak Spanish, or they’re part of a Latino group, it’s not absolutely clear that they’re not white, but it’s very likely that they are Latino or tied to that community, so even if you’re not looking for keywords, you’re able to put these things together,” she said.

Human recruiters make mistakes as well, but at least they need to “articulate an answer on how they made those decisions in an uncomfortable conversation,” said Schroeder. “For AI, it doesn’t even make an effort to explain it.”

This adds to the difficulty of litigating against AI recruitment tools. For example, the machine may not have a definitive answer as to why it disproportionately selected white male candidates for a position traditionally held by the same due to centuries of systemic racism and sexism.

“We can only argue with the results, we can’t talk about the process, because even the people using the systems don’t always know the process,” she said.

Critics also say that it is impractical to expect job seekers to understand AI tools in hiring and how they could work against them.

Now some legislators are starting to take note. Local Law 144 is a New York City ordinance that is one of the first attempts to regulate the use of such tools. It requires employers to conduct bias audits of automated recruitment tools and inform the public that they use such tools.

Some say this doesn't go far enough because job seekers are unlikely to object to being screened by AI—if they read the fine print on an application or job posting in the first place.

“In a lot of cases, if you don’t consent, then your resume just doesn’t get considered, so it’s a kind of punishment for the applicants,” said Schroeder. “It’s a really frustrating use of the law and definitely not what the city intended when they passed it, and there are so many people not following it that I don’t know if they even have the resources to actually fine everybody who’s violating it.”

Dismantling AI regulation

The AI boom is affecting industries worldwide. Many experts agree that the law hasn't caught up with the rapidly developing technology. They say that a lack of regulation encourages irresponsible development and use of AI that may undermine the civil rights and safety of the most vulnerable and underrepresented groups.

Some say the U.S. has recently taken steps backwards.

In the first few days of President Donald Trump's second term, he declared his desire to accelerate AI development and swept aside regulatory and transparency requirements for AI at the federal level.

Olga Akselrod leads the ACLU's racial justice program, which tracks algorithmic discrimination in employment and advocates for centering civil rights in policy-making regarding AI and other automated decision-making technologies.

AI regulation was just getting started during the Biden administration, said Akselrod. In November 2023, the former president issued an executive order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, which created some transparency and reporting requirements for AI development. It mandated that developers share testing results with the federal government and explicitly stated that the federal government would punish violations of privacy and civil rights laws.

Some experts felt it was a good, but tepid, first start at regulating AI. The order largely created created task forces, advisory committees, and reporting requirements, Scientific American reported. It also directed agencies to issue AI guidance within a year.

Trump repealed the executive order on the first day of his second term. Three days later, he issued his own executive order on AI. The order goes in the opposite direction. It revokes what it described as "barriers" to development. It further orders federal agencies to overhaul measures that impede the development and deployment of the technology.

Some observers were alarmed.

“Now we are seeing the administration begin to unravel those basic safeguards at the same time as the government is dangerously touting and deploying AI to slash government programs and grants, make personnel decisions, and replace workers, even though AI can't actually do any of those things accurately, humanely, or without bias,” said Akselrod.

Akselrod’s team also noticed that some of what they view as common sense guidance on AI was removed from public agencies’ websites. The sites included information on workers' basic rights and steps for employers to ensure their AI use complies with the law, she said.

However, she noted that Trump's executive order doesn't change what's required of employers under federal civil rights laws. Companies must ensure hiring practices do not run afoul of existing laws that bar discrimination or create work barriers for workers.

"So, employers are still on the hook for discriminatory AI tools, and they would be unwise to ignore those risks just because somebody else is president," said Akselrod.

The ultimate harm

Some fear that the federal government's ambition for the AI industry may lead to irresponsible development and deployment of the technology, including in hiring.

According to the ACLU’s press release about the complaint the autistic job seeker filed against Aon, not only does the tool pose immediate harm to job applicants, the deceptive claims about the AI products’ fairness and objectivity are also likely to mislead employers, putting public agencies and private companies at an increased legal liability for violating civil rights and anti-discrimination statutes.

“I think that employers and vendors have really been sticking their heads in the sand around their risk of liability for these tools,” Akselrod told the Daily Dot.

Both Akselrod and Schroeder said they are not optimistic about the current federal administration strictly enforcing civil rights laws regarding discrimination, but there is some faint hope that local legislative and regulatory bodies are catching up.

Schroeder worries that once the norm of laissez-faire AI regulation is established, it will be difficult to lock the industry back into the cage.

“When you let technology run rampant with no restrictions, like how the technology is made and how it develops, how you’re allowed to use it and sell it, it’s very, very hard to push it back and say ‘You have to completely redo this,’” said Schroeder. “Making the options broader is always easier than trying to narrow them after the fact.”

Send Hi-Res story tips and suggestions here.

Internet culture is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here. You’ll get the best (and worst) of the internet straight into your inbox.