Facebook was launched in 2004. Fifteen years later, the social network announced it was banning white nationalism from this site.

But was that nothing more than lip service to the outcry over hate on the platform?

In the wake of the decision, Media Matters published a list of 43 pages that “push white supremacy or belong to known white supremacists.”

As of June 13, three months later, 38 of those pages remain live.

So while Facebook says it’s banning white nationalism, pages like White People Origins, not out of Africa, and White Lives Matter Movement remain. The former discourages white people from having interracial relationships and denies that human life originated in Africa, as is scientifically accepted fact; the latter has encouraged whites to celebrate Martin Luther King Jr.’s killer on MLK Day and claimed that “Diversity is just [a] code word for white genocide.”

There’s plenty of hate elsewhere, too. In February, the Daily Dot reported that, of the 66 groups then in the Southern Poverty Law Center’s extremist files, 33 had Facebook pages; collectively, they had more than six million members.

Four months later, 32 still have Facebook pages.

Not only are these groups alive and well on the site, they continue posting content that violates the community standards, whipping their followers into hateful lathers about Muslims, LGBTQ people, Black people, and others. Some of the hateful content is the post itself; some of it is in the comments below.

In addition to the pages for groups identified by Media Matters or the SPLC as trafficking in white supremacy or hate—all of which, generally speaking, deny being racist or hate groups—there are an unknown number of other pages, groups and public figures on the site doing the same.

Among these, you will often find the worst of the worst of hate speech.

At the House Judiciary Committee hearing on hate crimes and the rise of white nationalism on April 9, a witness specifically referenced “It’s Okay to be White” as an example of a white supremacist group on Facebook. As of this writing, there are several Facebook pages bearing variations of “It’s Okay to be White” or “It’s Alright to be White” still up on the platform.

Last June, the largest “It’s Okay to Be White” page, which has 20,300 followers, posted a meme that read, “Persons of Jewish origin don’t see themselves as white. Please respect their wish to not be lumped in with us.”

The page did not respond to the Daily Dot’s question about how it responds to allegations of racism.

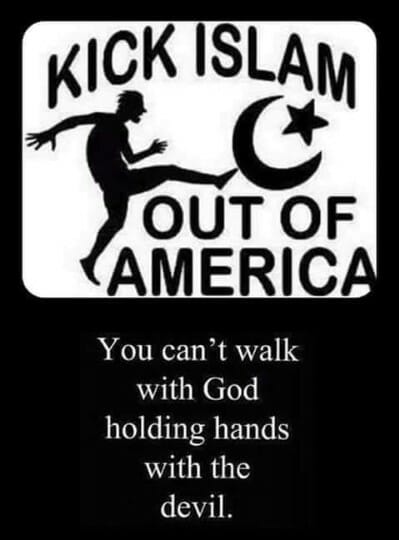

Posts like the one below remain just a few clicks away. Not only is the below meme ignorant of the fact that Christians and Muslims (and Jews) worship the same god, it clearly violates Facebook’s standards that prohibit attacks based on religious affiliation.

Another such page recently posted approvingly of Waterville, Maine Mayor Nick Isgro, who has been criticized for his anti-immigrant and Islamophobic comments, as being one of the state’s “best bets against anti-white racism.”

Beneath the post, one person commented, “I’m a Mainer, on Monday, it was announced that there is a bill that was introduced to make the holahoax a mandatory class for all schools in Maine.” (‘Holahoax’ is a term used by Holocaust deniers to refer to the genocide of the Jewish people during WWII, which they don’t believe happened.)

Bigoted comments are commonplace on pages like these.

Public pages like this persist. On Facebook, one can find posts such as a meme of a Black man wielding a sledgehammer and looming menacingly over a frightened-looking Black toddler, captioned “Never give up on your dream of having it aborted,” suggesting that the man should kill the child, referred to in dehumanized terms as “it.”

Another page has a racist meme of an empty prison cell captioned, “Why don’t black people get sunburn? Because prisons are indoors.”

With violence and hate crimes on the rise in countries around the world, society has increasingly started to ask social media companies what, if any, responsibility they bear for either allowing such content or failing to effectively deal with it. Many believe Facebook is the worst of the lot, partly due to its role as the largest social media company, partly because of how it groups users together based on shared interests, but mostly because it has long appeared unwilling to effect meaningful change.

For its part, Facebook has responded to the intensifying backlash by at least attempting to address the problem of hate on the site.

However, that system is extremely lacking.

In April, Neil Potts, the company’s public policy director, confidently assured the House Judiciary Committee hearing that Facebook is serious about eradicating racist and other hate speech, so serious that it employs more than “30,000 people focused on safety and security,” ranging from subject matter experts, former law enforcement officers, former intelligence officers, content moderators, engineers who write code for AI, etc.

The company, he said, also partners with nonprofit organizations that are experts on various forms of hate speech, along with other tech and social media companies, to identify and understand hate speech; and empowers its users to self-report such content, describing the cumulative effect as a “holistic method” to police hate on the platform.

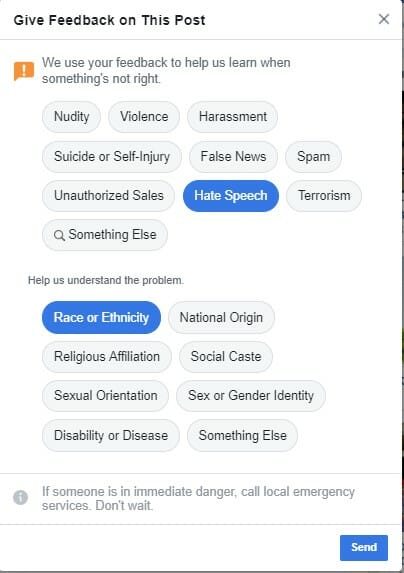

To test the reporting process, on May 15 a Daily Dot reporter gave feedback and reported two racist memes on public Facebook pages. Within hours, the first of the two, which approvingly referred to segregation, had disappeared.

On May 18, the company notified the reporter that it had taken down the post for violating its community standards, and notified the user who posted it that it had done so, without revealing the identity of who reported it.

The company did not take down the second, a post on the Its Okay to Be White page that used a racial slur, referred to “terrorist Israel,” and urged votes for the openly anti-Semitic Patrick Little, then a Republican candidate for a Californian U.S. Senate seat.

On May 21, the company sent an apparently automated message via the support inbox in which it said the post did not violate its community standards and suggested a few options for avoiding bumping into such content in the future, such as blocking, unfollowing or unfriending.

“No one should have to see posts they consider hateful on Facebook, so we want to help you avoid things like this in the future,” the notice states in part. “You may also consider using Facebook to speak out and educate the community around you. Counter-speech in the form of accurate information and alternative viewpoints can help create a safer and more respectful environment.”

Empowering users to easily and anonymously self-report violations of Facebook’s standards does help deal with the real problem that AI may never be completely effective at making subjective decisions about what is “hate.”

Yet some complain that Facebook doesn’t always remove reported content, as happened with the post encouraging votes for Little, and that it is unfair to rely on users to report content and individuals that may reasonably terrify them.

“They have this sort of way of operating where they say you should report content to us, and we’ll do something about it. But that places the burden on the victim,” said Madihha Ahussain, special counsel for anti-Muslim bigotry at Muslim Advocates, who said this applies to all social media companies, not just Facebook.

“We’ve tried to express to Facebook that the burden cannot be on any one victim to report, especially when it’s happening at such a vast scale and at a frequency that’s very alarming,” she added.

“You would expect more empathy from these companies for their users and for the experiences their platforms are exposing them to,” said Keegan Hankes, senior research analyst with the SPLC “…This type of speech stifles access to underrepresented communities.”

Ahussain also cited the opaqueness of Facebook’s adjudication of hate-based content, saying that although she’s been talking to them for years, “I don’t fully understand the ways they make decisions about that.”

Like others, Ahussain opined that part of the problem could be in the company’s structure itself. Founder Mark Zuckerberg’s control is total; even the board is not really empowered to overrule him.

“[This] creates a weird dynamic where there is less accountability over the company,” she said.

Others believe that there is some disconnect between the highest ranks and the rank-and-file at Facebook. While the 30,000 employees who Potts referenced may, and probably do, comprise some passionate, knowledgeable advocates and experts in the realm of hate speech and white supremacy, the machine is only good as its motherboard.

“I think individually the people that work on the policy teams care very deeply … [but] the leadership at Facebook has failed,” Hankes said.

A newer development is that, when users search the site for a few of the pages associated with known hate groups, a warning appears, “These keywords may be associated with dangerous groups and individuals. Facebook works with organizations that help prevent the spread of hate and violent extremism.”

A “Learn More” button below the message links to the nonprofit Life After Hate, which helps people leave violent, extremist hate groups.

This feature too needs some work; of all the hate groups that the Daily Dot searched for, only five prompted this warning: American Renaissance, Identity Evropa, Keystone United, Proud Boys and Westboro Baptist Church.

Those who defend Facebook often point out that identifying and defining hate speech is difficult and highly subjective, partly because words and phrases can have multiple meanings ranging from racist to mundane.

While searching for pages espousing racism, the Daily Dot stumbled across an Aryan Brotherhood Royal Mech 2k12-16 page that seems to be a social group for sports enthusiasts and friends in India, as well as a White Revolution page that describes itself as an “agricultural cooperative” that works “to promote and activate dairy industry,” and primarily posts pictures of cows and other content related to dairy.

Neither appear to either be based in the U.S. or have any association with hate groups or speech.

There’s also a Patriotic Front page, which is extremely similar in name to the SPLC-designated hate group Patriot Front. The latter is described as a “white nationalist hate group” by the SPLC; the former is the official page for the largest political party in Zambia.

If Facebook’s AI were programmed to flag all accounts labeled “Aryan brotherhood,” or “white revolution,” or those that have similar-sounding names to hate groups (a common tactic hate groups use to evade enforcement), pages like these would be innocent victims.

As the platform has ramped up enforcement efforts, some of those affected have responded by whining.

Pages associated with groups that espouse hate are littered with complaints that their posts keep disappearing, or that they’ve been temporarily banned for violating Facebook’s community standards. The irony of bigots complaining about discrimination is apparently lost on them.

The White House has wholeheartedly embraced complaints of social media companies’ being unfair to its supporters in the far right, last week rolling out a portal for social media users to report bias against them.

Others who have been caught up in Facebook’s anti-hate dragnet have moved to smaller, fringe social networks like Gab, MeWe, 4chan, 8chan, and the like.

This, Hankes said, is progress.

Some, including activists in the field, worry that pushing hate groups underground will make it harder to track them. Hankes differs. “It’s very, very difficult for me to stomach that argument given the fact that we should be trying and fighting to contain this,” he said.

Dark places on the internet are nothing new. Letting hate groups have the run of mainstream social media platforms, rather than banishing them to the peripheral, only emboldens them, and gives them the opportunity to infect more minds, causing damage that will continue to unfold into the future. At this point, Hankes said, containment is the best, and only, option.

“Pandora’s Box is open, and we will be dealing with the consequences for years,” he said.

READ MORE:

- YouTube/Facebook comments on hate speech hearing shut down due to hate speech

- Report: Facebook bans leading white nationalist Faith Goldy

- The Hatebook: Inside Facebook’s thriving subculture of racism

Got five minutes? We’d love to hear from you. Help shape our journalism and be entered to win an Amazon gift card by filling out our 2019 reader survey.