When’s the last time you read a website’s privacy policy? Like really stopped whatever else you were doing on the internet, poured yourself a tall, refreshing glass of lemonade, and curled up with your laptop and a legal document detailing what Snapchat can do with your data?

It’s probably been a while. In fact, in order to read in full every privacy policy the average person comes across in a singe year would take an estimated 76 days. Nobody does that—but when they do, it actually has a negative effect on how much a user trusts an online company.

According to a new study by George Washington University Strategic Management and Public Policy Professor Dr. Kristen Martin, the invocation of formal privacy notifications actually decreases the amount of trust users have in a website.

What’s contained in those privacy policies does matter to consumers, but significantly less than what Martin labels “informal privacy norms,” which basically means not doing things that seem creepy or unethical like using information visitors provide to advertise to their friends or selling personal information to a third-party data aggregator.

“The results suggest that the mere introduction of formal privacy contracts decreases trust.”

Martin’s study has been accepted by the Journal of Legal Studies and is awaiting publication.

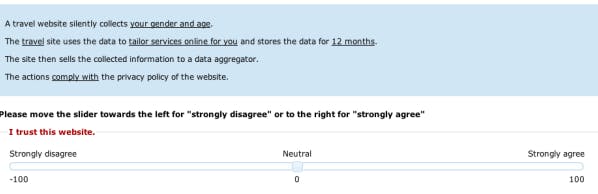

Over a four month period in early 2015, Martin conducted a pair of surveys measuring what factors were important to users in terms of online privacy. The questions were aimed at determining if the use of formal contracts, in the form of privacy agreements, were effective in boosting user trust, and if that level of effectiveness was greater than informal privacy norms.

The 1,600 respondents who participated in the study rated 6,400 different ways websites might use their data. Sometimes those actions violated widely held social norms about data usage and other times they didn’t; sometimes the actions violated the sites’ stated privacy policies, other times there were no formal policies in place to violate.

Martin’s goal was to determine how much importance people put on a company following, or not following, the letter of the behavioral guidelines they set for themselves in their privacy policies. She discovered privacy notices are often counterproductive in maintaining user trust and are considerably less important than a general sense that the site is being respectful of user privacy—regardless of the language of its privacy policy.

“The results suggest that the mere introduction of formal privacy contracts decreases trust,” Martin wrote. “In addition, respondents distrusted websites for violating informal privacy norms even when the scenario was said to conform to or was not mentioned in the formal privacy notice.”

“The mere inclusion of a statement about privacy notices—which systematically varied between conforming to, not being mentioned in, and violating the privacy notice—decreased the average trust rating of the vignettes,” she continued.

The reason that consumers tend to put little stock in formal privacy polices is, Martin posits, due to a fundamental information asymmetry between a website’s users and its operators. Privacy polices are typically buried deep within a website and are often full of dense legal or technical language. On top of that, it’s rarely clear what consequences a company would face for violating a policy its own managers came up with in the first place.

The survey respondents did penalize companies for violating their own privacy polices, but that effect was largely secondary.

“I would say that what their practices are in regards to user information is more important than writing a vague notice and making sure you conform to it,” Martin said in an email. “Right now, the U.S. places an emphasis on mere conformance to a notice without any regard to if a company sells the data or uses it in a way that users consider a violation of privacy.”

There have been a number of high-profile instances in which this phenomenon played out in the real world.

In 2014, researchers at Facebook published the results of a study in the Proceedings of the National Academy of Sciences where the company increased the frequency of positive or negative messages appearing in users’ news feeds to determine how the changes would affect the frequency with which those users posted content. After the study’s publication, Facebook was slammed by critics for conducting what was widely viewed as “emotional manipulation” on users without getting prior consent.

However, Facebook did technically get users’ consent to be used as guinea pigs. Facebook officials initially defended the experiment as saying it conformed to the the company’s privacy policy. That defense, as it turned out, was largely unconvincing to the public at large and Facebook later apologized.

Ultimately, it matters less that Google‘s motto is “don’t be evil” than it does that people actually perceive Google aren’t doing things that seem evil.

Contact the Author: Aaron Sankin, asankin@dailydot.com