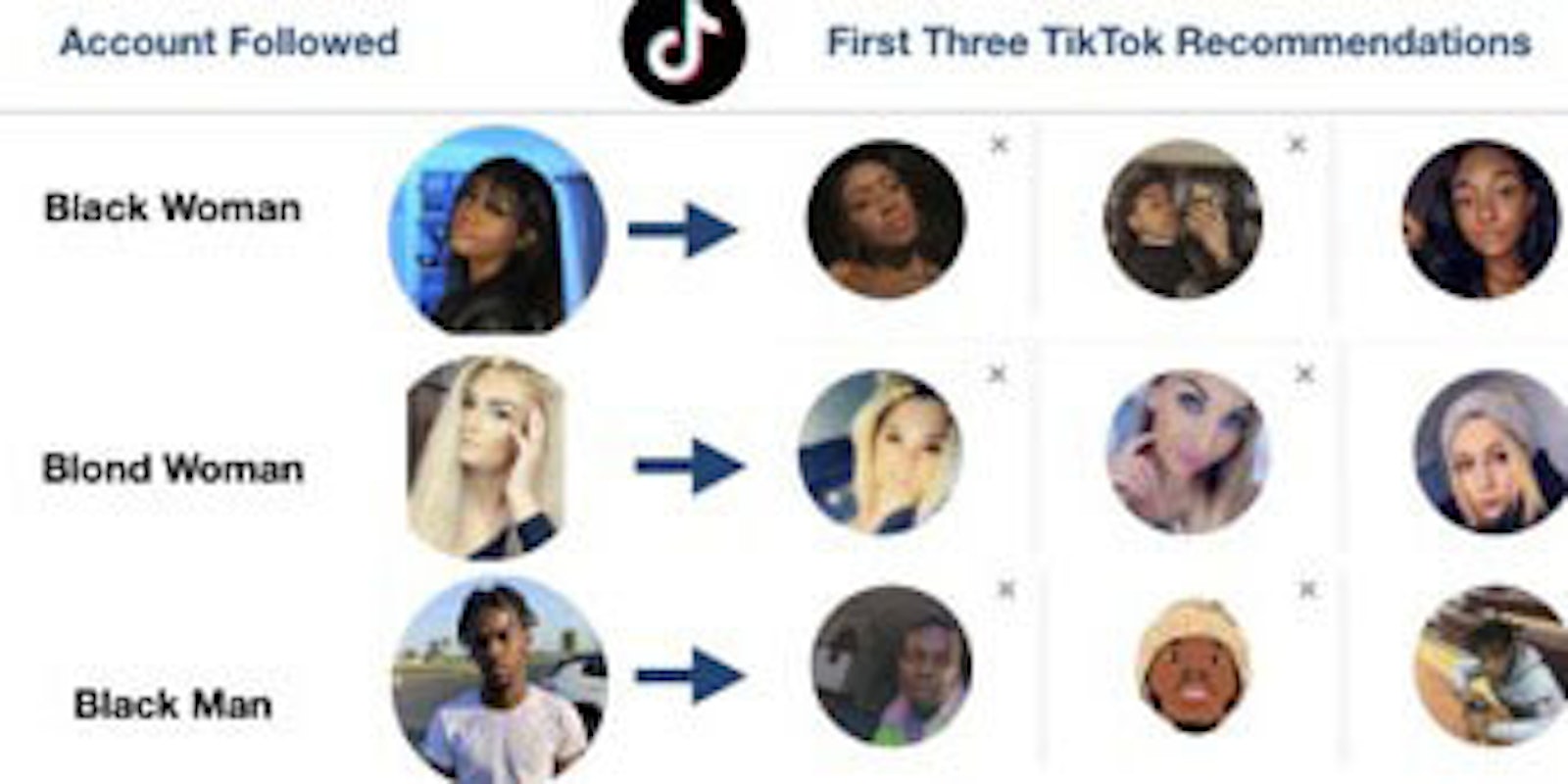

According to an experiment performed by artificial intelligence researcher Marc Faddoul, the algorithm TikTok uses to suggest new users to follow might have a racial bias.

Faddoul, an AI researcher from the University of California, Berkeley, who specializes in algorithmic fairness, first pointed out his findings on Twitter this week.

“A TikTok novelty: FACE-BASED FITLER BUBBLES,” Faddoul wrote. “The AI-bias techlash seems to have had no impact on newer platforms. Follow a random profile, and TikTok will only recommend people who look almost the same.”

Faddoul explained to BuzzFeed News that when a user on TikTok follows an account they are then suggested a series of other accounts they could follow. Faddoul said he noticed similarities in these accounts, from users being of the same race, hair color, and having similar appearances.

Faddoul said he repeated the experiment again with a new account with similar results.

“Clearly, recommendations are very physiognomic,” Faddoul said. “But it’s not just gender and ethnicity, you can get much more niche facial profiling. TikTok adapts ‘recommendability’ on hair style, body profile, age, how (un)dressed the person is, and even whether they have visible disabilities.”

A representative from TikTok told BuzzFeed that the algorithm isn’t based on race, or the account’s picture, but based on the content of the account. According to the representative, this is called collaborative filtering, a similar process used by YouTube and Netflix.

“Our recommendation of accounts to follow is based on user behavior: users who follow account A also follow account B, so if you follow A you are likely to also want to follow B,” a representative told BuzzFeed.

But according to Faddoul, if this is the case, it could still render a racial bias.

“A risk is to reinforce a ‘coverage bias’ with a feedback loop,” Faddoul said. “If most popular influencers are say, blond, it’s will be easier for a blond to get followers than for a member of an underrepresented minority. And the loop goes on…”

This is not the first time the company has found itself in hot water, back in December, TikTok admitted it was burying content made by queer, fat, and disabled users.

READ MORE:

- Is this the end of the Instagram’s follow-unfollow trick?

- Royal Family’s website accidentally links to porn instead of charity

- Instagram influencer scams followers out of $1.5 million

H/T BuzzFeed News