Amazon’s Alexa is always listening, even when you don’t want her to be.

A woman on TikTok found out her partner was having an affair through the device’s history. Her video of the shocking receipts has reached 3 million views on the platform.

@jessicalowman1 #EndlessJourney #cheatersgettingcaught #alexa #exboyfriend #liar #cheatingboyfriend #alexacaughtyou ♬ original sound – Jessica Lowman

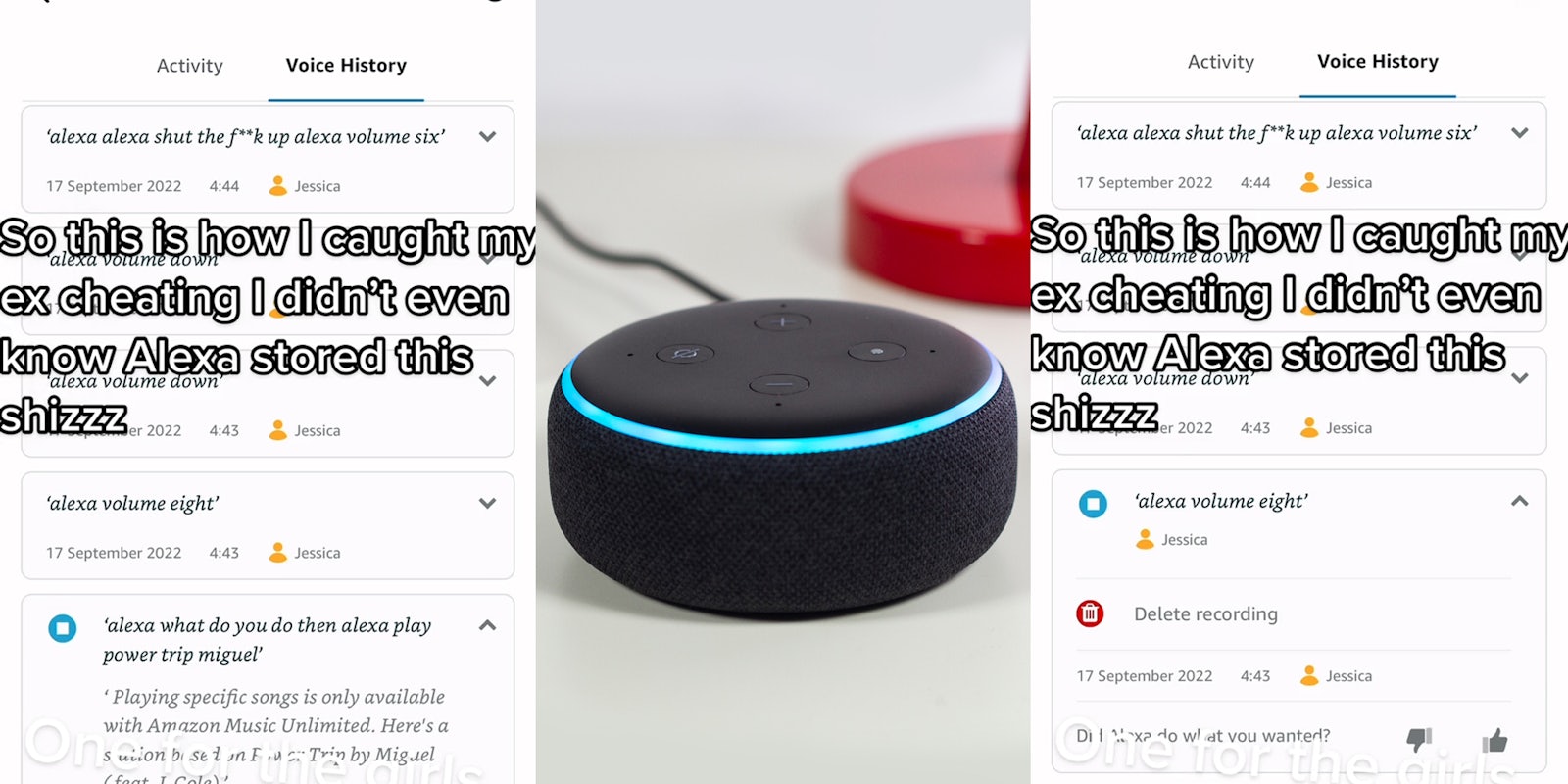

In the original video, user Jessica Lowman (@jessicalowman1) shows a screen recording of her household’s chat history with ‘Alexa,’ the virtual assistant technology by Amazon. Users can see seemingly innocuous quotes like, “Alexa play power trip miguel” and “Alexa volume down.”

But when Lowman clicks play on each recording sample, a woman — who is presumably not her — and man’s voice can be heard talking to the device, catching Lowman’s partner in the act with another woman.

‘Alexa’ users are still discovering the depths of the virtual assistant’s memory. The Washington Post reported in 2019 that Alexa keeps conversation fragments as part of the machine learning features of the advice. Reporter Geoffrey Fowler found errant Downtown Abbey clips and jokes from houseguests, but also more private information discussed inside his home.

“There were even sensitive conversations that somehow triggered Alexa’s ‘wake word’ to start recording, including my family discussing medication and a friend conducting a business deal,” he wrote.

While Amazon claims Alexas are always listening for their “wake word,” that doesn’t mean they store everything in the cloud. Still, some might find reprieve in deleting the device’s voice history, which you can do by going into its dialogue settings. However, according to CNET, this doesn’t mean the text transcripts will go away.

“Amazon lets you delete those voice recordings, giving you a false sense of privacy. But the company still has that data, just not as a sound bite. It keeps the text logs of the transcribed audio on its cloud servers, with no option for you to delete them.

Amazon said it erases the text transcripts from Alexa’s “main system,” but is working on removing them from other areas where the data can travel,” the article states.

In Lowman’s comments section, many users joked the ‘Alexa’ cheating scandal showed the device had women’s best interests in mind.

“Girl code alexa,” one user wrote.

“Alexa is a city girl,” another commented.

Other users said they had the exact same ‘Alexa’ cheating revelations happen to them.

“This was how I found out he was seeing someone else. Can’t believe im not the only one!” one TikToker shared.

User @brkofficial99 thinks a trend has started. “Everyone gonna be gettin Alexa for Christmas,” they wrote.

The Daily Dot reached out to @jessicalowman1 via TikTok comment for this story.