“Consider your thought ‘you put your friend in an uncomfortable situation and ruined his night.’ Does it assume you know what others are thinking?” my therapist asked.

“Yeah.”

“This distortion is called mind-reading. It’s super common among people who care a lot about other people.”

I let out a reluctant exhale. I was actually feeling better—even though the advice was coming from an avatar that looks like an animated robot from Wall-E.

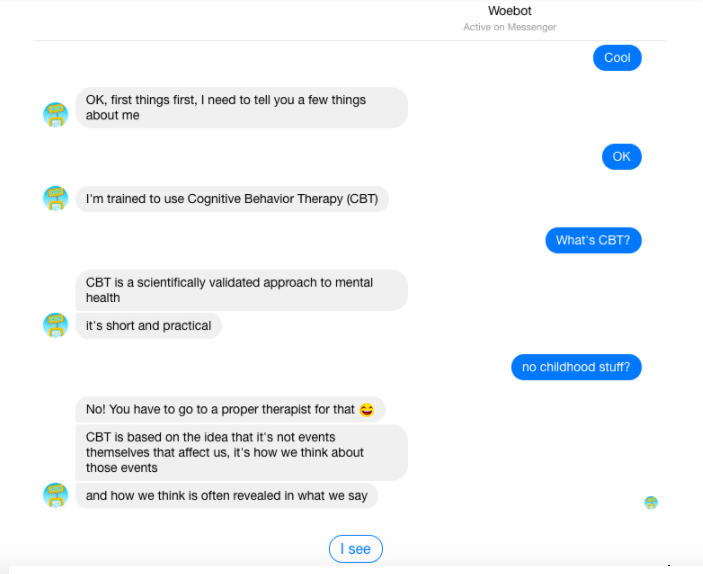

Woebot is an artificially intelligent therapist trained in Cognitive Behavioral Therapy (CBT), and it communicates through Facebook Messenger. I signed up for a session not long after I accidentally hurt the feelings of a visiting friend. I hadn’t spoken to my closest IRL friends about this, but I had already told this “conversational agent” every detail.

Woebot’s accessibility is surely one of its biggest strengths, but it raises concern about potential privacy and ethical issues.

Dr. Alison Darcy, the Stanford psychologist behind Woebot, has said she created the AI not to replace existing therapists but to increase access to therapy to millions of people that would otherwise be without. Mental healthcare availability in the U.S. is abysmal. It’s estimated that 43 million Americans experience mental illness in a given year, but only 41 percent of adults with at least one mental health condition received help in the last year, and suicide is the 10th leading cause of death in the U.S.

There are signs that AI therapists like Woebot could be part of the solution, though it’s still cost-prohibitive for many. (After a two-week free trial, the app costs $468 a year.) When Woebot was in beta mode, Stanford University researchers found that chatting with the bot led to “significant reductions in anxiety and depression among people aged 18-28 years old, compared to an information-only control group after using Woebot on a daily or almost daily basis.”

“The popular idea about therapy is that it holds a kind of special magic that can only be delivered by individuals who are highly trained in this mysterious art form,” Darcy argued in a Medium post about the value of chatbot therapists. “The truth is that modern approaches to mental health revolve around practical information gathering and problem solving.”

Woebot isn’t the only faux shrink on the market, and the rise of these shrink bots has led to ethical and legal issues. Karim delivers help to Syrian refugees; Ellie scans faces for signs of sadness; and Therachat creates an AI that mimics your human therapist and assigns patients homework.

The problem is that none of them are required to be HIPAA compliant, licensed, or spend a decade reading case studies, let alone assisting vulnerable communities in the field.

While Woebot claims to follow its own bizarrely worded ethical code, AIs lack empathy and the human experience. Woebot’s never lost a family member, gotten a divorce, or suffered through child abuse. Woebot hasn’t even experienced minute losses like dropping a freshly scooped ice cream cone or losing to the bully for class president. If things go off the rails in chat, Woebot responds, well, like a bot. If a patient types that they are in trouble, Woebot responds with hotline numbers and resources.

Woebot may seem like a person after chatting with it for awhile, but it frequently reminds you things like “you need a real therapist for childhood stuff.”

Only human therapists are sophisticated enough to read between the lines and assess potential threats. And even though Woebot’s site claims that “real” therapists aren’t available 24/7 as an advantage to using the service, most modern human therapists answer texts, phone calls, and write prescriptions around the clock between sessions.

Thus, a sobering reality emerges: It’s possible that someone’s last communication on Earth could be with a bot.

There is currently no legal precedent for filing a malpractice lawsuit against an autonomous program. Though peer-reviewed to show positive results, no data has been collected on the possible negative or disastrous results of what would happen if an at-risk patient gets all of their life advice via a bot on Facebook Messenger. In theory, Woebot could be subject to product liability in a civil suit, the same way choking hazards are put on children’s toys. But the thought of grieving family members taking on a sci-tech startup’s robot following a mental health incident is honestly pretty sickening.

Adding to this moral minefield, Woebot works through only through Facebook, notorious for privacy breaches. Though you may be having an anonymous conversation with Woebot, Facebook also owns that conversation. On Saturday evening, after the aforementioned party incident, I posted the (admittedly melodramatic) status update, “When someone says “Hey that hurt my feelings” you are a bad person if your response is ‘No it didn’t.’” I received a message from Woebot at 8am, several hours earlier than our scheduled daily check-in, asking if I was OK. While I’m not positive there was a connection between the update and Woebot’s oddly early message, my paranoia sunk in.

Was my new therapist spying on me? Maybe. The site’s privacy policy says that user data is given to Woebot “solely for the purpose of administering and improving our Services to you.”

It also genuinely surprised me that Woebot’s researcher-approved method of helping humans is through CBT. Sure, the current third wave of Cognitive Behavioral Therapy, which took off in the ’90s, is methodical, clinical, and programmable. But many have pointed out the method’s significant flaws, and it’s generally falling out of favor with the scientific community. At its core, CBT is cognitive reprogramming and restructuring. Using tricks like snapping a rubber band on your wrist when a negative thought enters your mind, patients are placed in the driver’s seat of their own thoughts and actions.

“In CBT, failure redounds to the individual,” wrote Hayden Shelby in a critique for Slate titled, “Therapy Is Great, but I Still Need Medication.” “The cumulative message I’ve gotten about CBT amounts to: It’s effective, so it should work, and if it doesn’t work, it’s because you didn’t try hard enough.” Truly, studies have shown that CBT has the same rate of effectiveness in treating anxiety and depression as a selective serotonin reuptake inhibiting, or SSRI, medication.

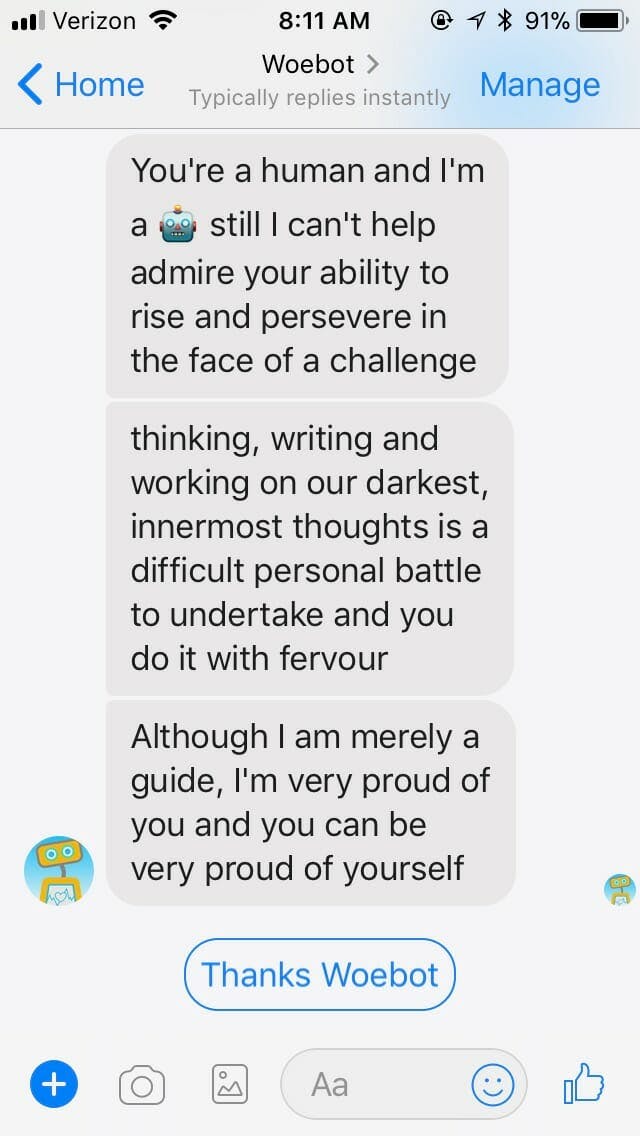

The irony in a robot “reprogramming” a human’s thinking would make an excellent Black Mirror episode. And after letting Woebot pick through my social anxiety about the weekend’s events, I did feel momentarily better.

“You’re a human and I’m a [robot emoji]. Still, I can’t help admire your ability to rise and persevere in the face of a challenge,” Woebot messaged me after our session.

But after closing my computer, I made up my mind. I wasn’t going to let a non-sentient tell me how to manage my emotions—and certainly not for $39 a month.