Twitter‘s chief design officer acknowledged over the weekend that the algorithm the company uses to automatically crop photos can unintentionally prioritize white faces over Black ones.

Newsweek reports that ad hoc tests run by users first identified the issue. After someone tagged Chief Design Officer Dantley Davis in the thread, he agreed that the company needed to fix the issue.

The inquiry began with a Black professor trying to figure out why Zoom kept removing his head when he tried to add a virtual background. Colin Madland said on Friday that he had determined it was due to Zoom’s face-detection algorithm.

“Turns out @zoom_us has a crappy face-detection algorithm that erases Black faces…and determines that a nice pale globe in the background must be a better face than what should be obvious,” Madland tweeted.

Zoom responded by asking Madland and his colleagues to meet with its virtual background engineers to discuss the issue further.

But the process of tweeting about the issue on Zoom led to yet another question: Why did Twitter automatically crop the Black professor out of the preview of images of he and Madland, who is white?

After some testing, Madland and others determined that the cropping was not an isolated incident. When Twitter user @NotaFile shared images of a Black man and a white man, the preview of both images focused on the white man. To see the Black man, you had to click on the image.

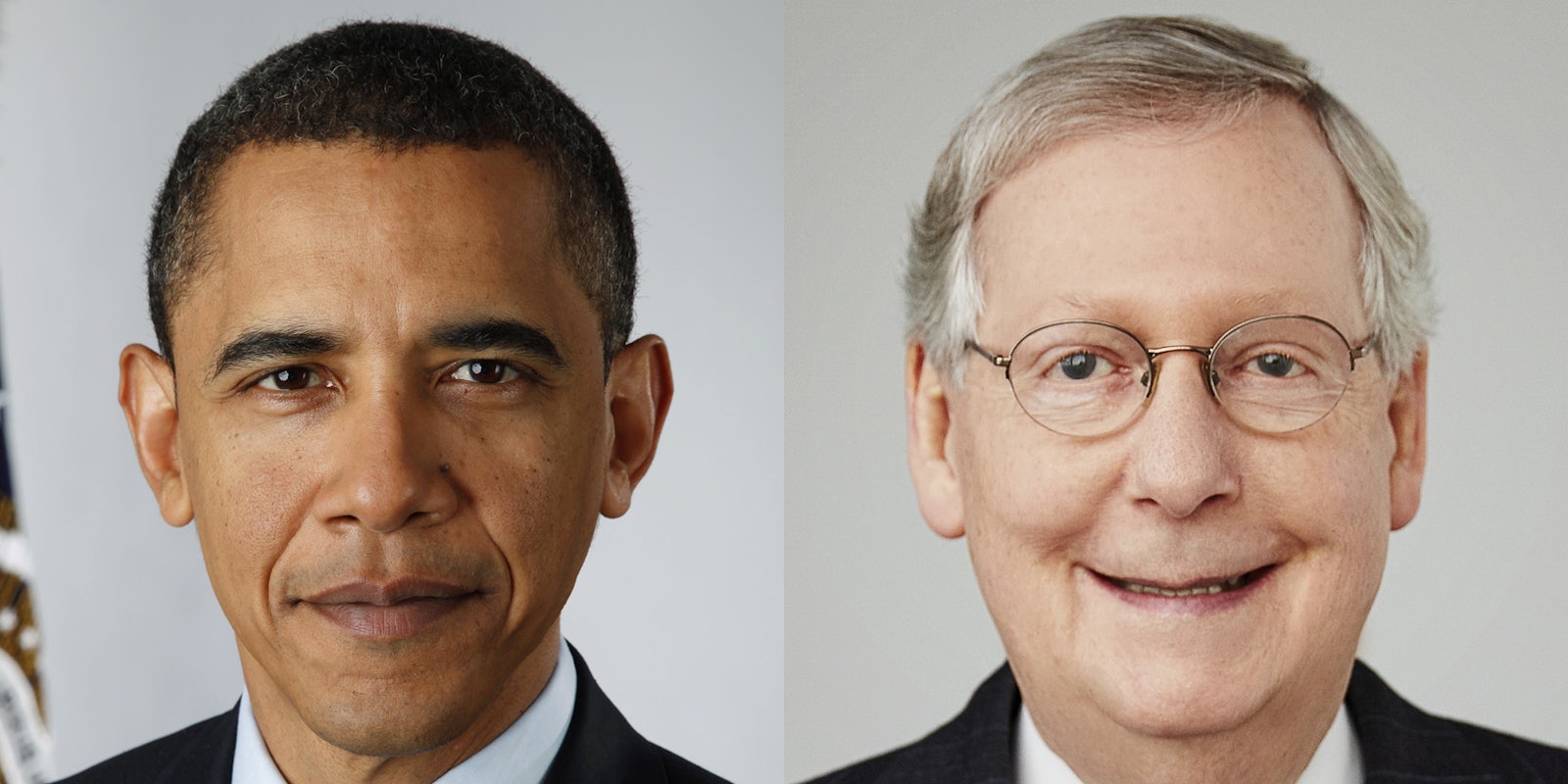

Others found that the algorithm cropped former President Barack Obama out of a photo of he and Senate Majority Leader Mitch McConnell (R-Ky.). That comparison post went viral.

Once the issue was identified, someone tagged Davis, of Twitter, and he owned it.

“It’s 100% our fault. No one should say otherwise. Now the next step is fixing it,” he tweeted.

He also added that had run his own experiments on the cropping issue.

Twitter’s neural network, a form of artificial intelligence, focuses on the “saliency” of an image, that is, which portions of it a viewer is likely to focus on.

In a 2018 blog, Twitter explained, “Academics have studied and measured saliency by using eye trackers, which record the pixels people fixated with their eyes. In general, people tend to pay more attention to faces, text, animals, but also other objects and regions of high contrast.”

This data is used to train the algorithm’s neural network to behave accordingly.

“The basic idea is to use these predictions to center a crop around the most interesting region,” Twitter wrote.

Many people were impressed with Twitter’s speedy response and willingness to immediately tackle the issue. Others suggested that the platform let users, rather than artificial intelligence, select which portions of an image to focus on.

Some wondered if Twitter had tested for racial bias. This is hardly the first time that technology has been biased in the way it handles images of people of color.

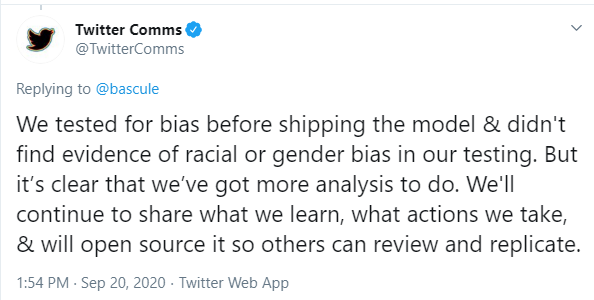

The company claims that it did test for bias.

“We tested for bias before shipping the model & didn’t find evidence of racial or gender bias in our testing,” the company tweeted. “But it’s clear that we’ve got more analysis to do. We’ll continue to share what we learn, what actions we take & will open source it so others can review and replicate.”

Twitter says it will publicly disclose its analysis of the issue and any changes it decides to implement to eradicate racial bias in the way its algorithm crops photos.