Twitter on Friday announced several steps it is taking to combat misinformation about the 2020 election as Election Day gets closer.

The company described the changes and its roll out in a blog post on Friday.

Perhaps the most prominent change will be for the potential reach for posts from politicians that contain misleading information. Twitter says that if a candidate, campaign account, or other accounts have tweets that are labeled for misinformation, users will have to tap through a warning to see the tweet and won’t be able to like or comment on it.

“We expect this will further reduce the visibility of misleading information, and will encourage people to reconsider if they want to amplify these tweets,” the company said in a blog post.

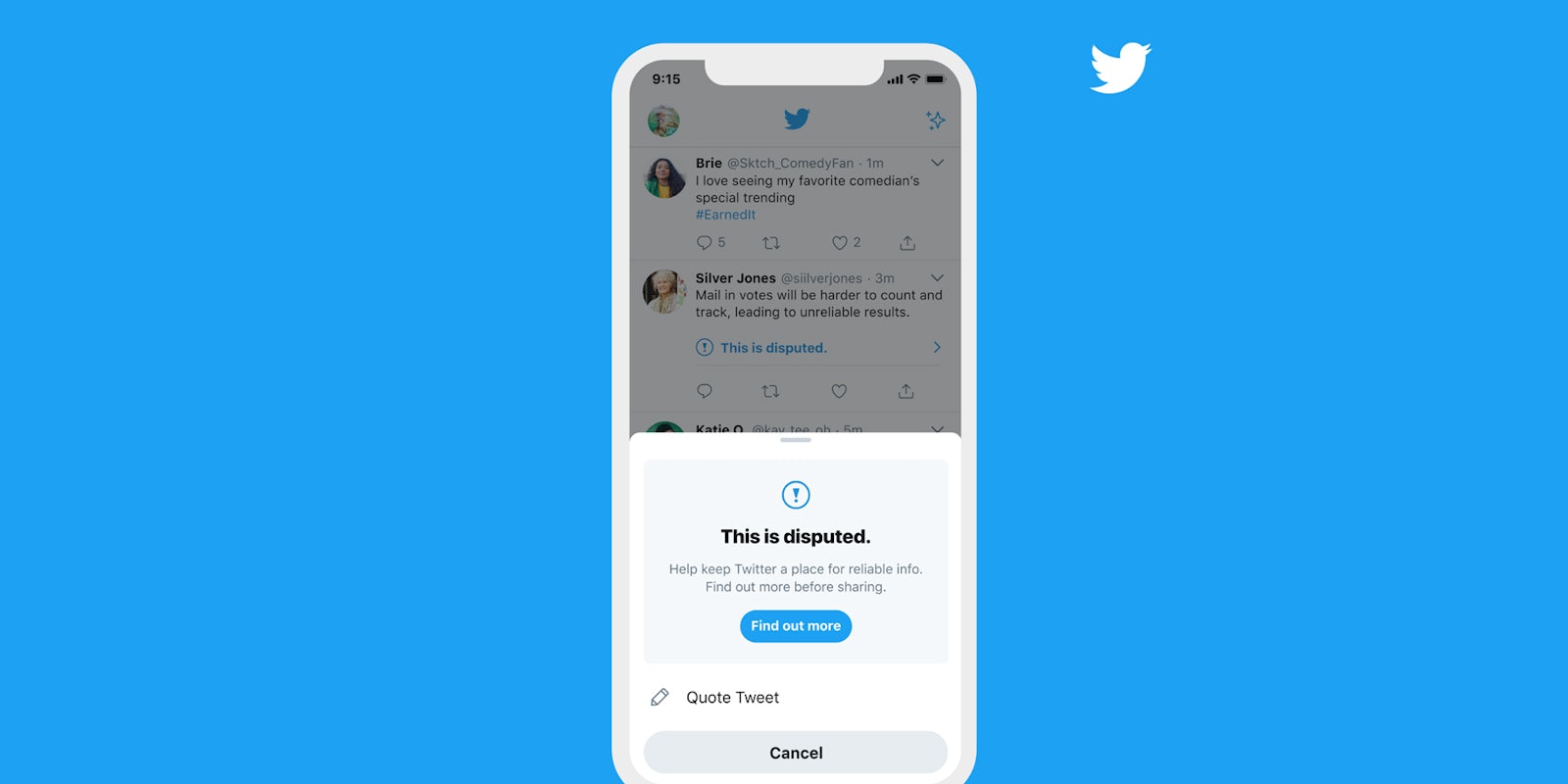

Another change will be for users who are trying to retweet a post that has already been slapped with a misleading information label. Starting next week, if a user tries to retweet a labeled tweet, they will see a prompt saying “This is disputed. Help keep Twitter a place for reliable info. Find out more before sharing.”

Finally, starting on Oct. 20 through “at least the end of election week,” Twitter will automatically prompt people to add their own commentary to something they are trying to retweet. The goal, Twitter says is to “encourage everyone to not only consider why they are amplifying a tweet, but also increase the likelihood that people add their own thoughts, reactions and perspectives to the conversation.”

The company also reiterated that it will label tweets that falsely claim a win for a candidate prematurely and will remove tweets that call for violence or people to interfere with voting.

The changes could affect President Donald Trump, who has had a growing number of his tweets fact-checked, labeled, or flagged by Twitter for violating its policies—including the company’s misinformation policy.