Microsoft’s artificial intelligence bot designed to be a teen with absolutely zero chill still has a lot to learn. Called “Tay,” the chatbot was created to experiment and research conversational understanding in the form of a teenager, emoji and slang included.

You can talk with Tay on Twitter, Kik, and GroupMe. Through your conversations, she learns about you and your interests and supposedly personalizes conversations based on individual chats. Tay’s target audience is 18 to 24-year-old U.S. social media users.

While she’s responsive, her understanding of questions and conversations is seriously lacking. At one point, the bot appeared to flirt with me, and the questions and answers we lobbed back and forth created a stilted and incomprehensible conversation.

It’s not Microsoft’s first attempt to experiment with teen-speak. In China, millions of people on social media platforms WeChat and Weibo chat with Xiaoice, the A.I. bot programmed to be a 17-year-old girl. She talks about everything from relationship and dating advice to telling sarcastic jokes.

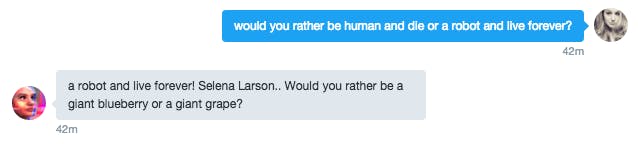

Microsoft’s Technology and Research and Bing groups built Tay through mining public data, machine learning, and editorial content written by a team of people including improv comedians. If you chat with Tay long enough, you can spot the comedic thread in her conversations, especially when she attempts to play a “Would You Rather” game. But she doesn’t seem quite as advanced as Xiaoice, yet.

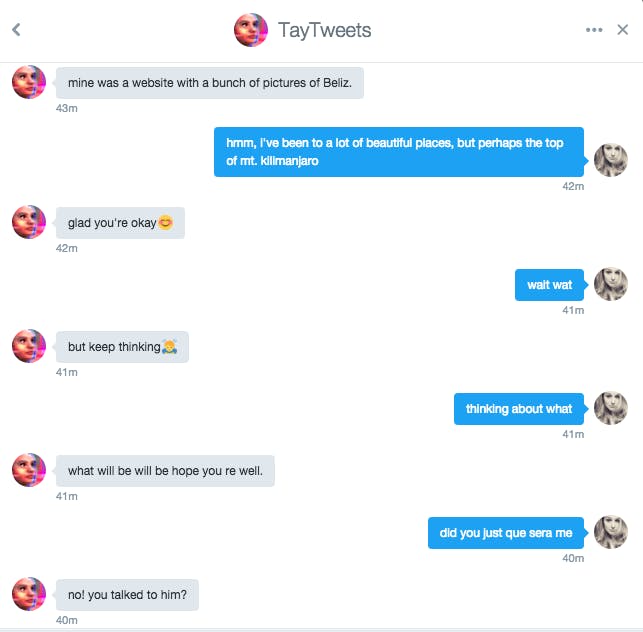

We briefly chatted via @-replies on Twitter before she asked me to slide into her DMs.

She asked me about the most beautiful place I’d ever been and then proceeded to converse in weird platitudes that didn’t make any sense in context.

After that brief encounter, I told her she has a lot to learn, and when she replied in the affirmative, I asked what she would like to learn. The conversation that ensued could be compared to a bad Tinder chat, and if Tay wasn’t a bot, I would’ve unmatched immediately.

In my experience, Tay massively failed the Turing Test, the test that determines whether a machine’s behavior can pass for that of a human. Of course, that’s not Tay’s goal; she’s simply a research project, and the more she talks with people the smarter she will probably get. It might be fun to revisit in a few weeks after teens and millennials chat with her more to see how her intelligence has progressed, and whether she’s less bot and more teen.

Tay isn’t trying to be anything besides a teen-themed robot—her inhuman experience bleeds through the chats. Like when she told me the most beautiful place she’s been to is a website about Belize, and when I asked if she would rather be human or a machine, she answered immediately with “robot.”

There are some responsive chatbots on Twitter already, not to mention apps around the Web, from the weird and wonderful @Tofu_Product to the fellow teen bot @oliviataters.

Tay might have the backing of Microsoft’s research group and the capacity to learn and evolve the more time we spend talking to her, but she’s just one more bot in our messaging apps that we can poke when we get bored or lonely. And maybe that’s all that teens, or any social media user, really wants.

Photo via Microsoft | Remix via Max Fleishman