A fable is a short, simple story that teaches a moral lesson. Many are extremely old and extremely popular. The phrase “a wolf in sheep’s clothing” actually comes from a fable by Aesop. You’ve probably heard the story of the Scorpion and the Frog, as well; it’s made appearances in the movies The Crying Game, Natural Born Killers, and Drive, and has been told by characters in episodes of Smallville, The Sopranos, and even Robot Chicken.

Typically, fables are associated with oral storytelling and “analog” tradition, but now they’re getting a very high-tech update. As part of her doctoral thesis, Margaret Sarlej, a Ph.D. candidate in Computer Science at the University of New South Wales, set out to create a computer program that could write its own fables. She succeeded, and here is an example of one of the unique fables her computer program has written:

Once upon a time there lived a unicorn, a knight and a fairy. The unicorn loved the knight. One summer’s morning the fairy stole the sword from the knight. As a result, the knight didn’t have the sword anymore. The knight felt distress that he didn’t have the sword anymore. The knight felt anger towards the fairy about stealing the sword because he didn’t have the sword anymore. The unicorn and the knight started to hate the fairy. The next day the unicorn kidnapped the fairy. As a result, the fairy was not free. The fairy felt distress that she was not free.

Impressive, but questions remain: For starters, how does the program work? Can it write a variety of fables, or will the formula give them a cookie-cutter feel? And most importantly, how can you get a computer to even understand what the concept of a “moral” is?

I reached out to Sarlej to learn more about what is going on “under the hood” of her story-telling machine. She explains that one of the first things she did was figure out how to represent “the moral of a story” in a way that a computer could understand.

“The most obvious way to represent a story,” Sarlej says, “is in terms of the events that take place. But this is too specific for what I wanted, because stories with very different events can convey the same moral. For instance, consider Pinocchio and The Boy Who Cried Wolf: both convey the moral that lying is wrong, yet the events are completely different. I needed to represent stories at a more abstract level than events directly.”

The key insight, for Sarlej, was the idea that morals are closely tied with emotions. “Consider a moral like Retribution,” she explained. “Generally in stories with this moral, if Character A does something bad to Character B, causing them distress, then something will happen to Character A afterwards to make them feel distress (as a ‘punishment’). What the specific events are doesn’t matter. So in this sense, emotions provide a good level of abstraction.”

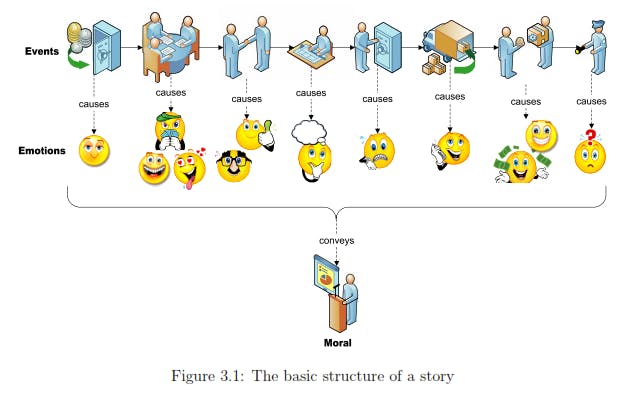

Stories that have a moral, she decided, can be thought of as a sequence of events that cause a pattern of emotional responses in the characters.

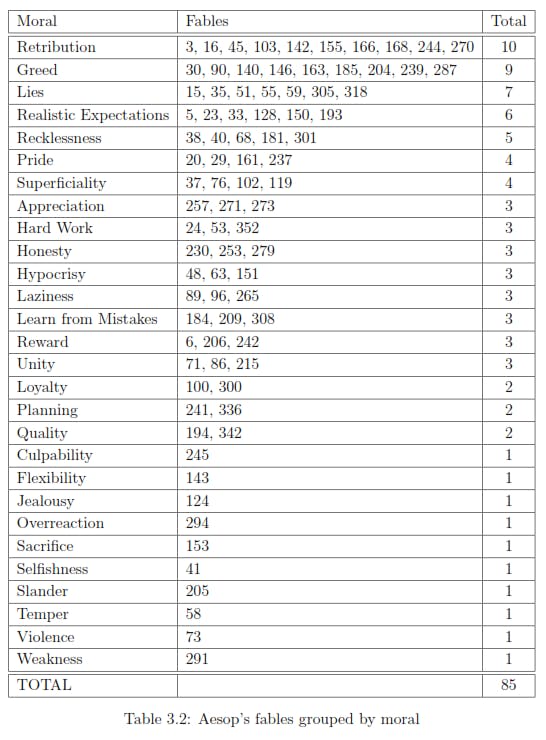

She theorized that a particular “moral” can be defined as a specific emotional pattern, so that all stories that have the same moral will contain this same pattern somewhere in the story. To test this theory, she read a lot of fables. In fact, for her research, she read and analyzed all 358 fables in Aesop: The Complete Fables translated by Olivia and Robert Temple.

Since many fables actually contain the same moral, she started by grouping them this way. For example, Fable three in the book (The Eagle and the Fox), Fable 16 (The Goat and the Donkey), and Fable 244 (The Mouse and the Frog) all have different characters and different specific events, but they all teach the same basic lesson: When you do something bad, bad things will happen to you. Sarlej calls this the “Retribution Moral.”

Once she identified the most common morals in the book of fables, she looked at what emotional relationships they had in common, and how those could be represented in a computer program. Luckily, psychologists have spent a lot of time analyzing emotions, and researchers in artificial intelligence have found ways to take those psychological theories and formalize them into expressions and rules that can be represented by a computer.

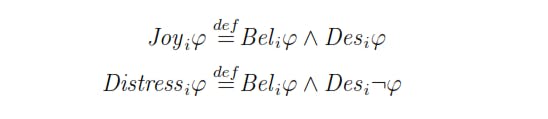

For her dissertation, Sarlej decided to use the OCC theory of emotions, which has been turned into a “calculus of emotions” in the field of Artificial Intelligence. Using the word “calculus” is not an exaggeration. It looks like this:

To the computer, that means: “If an outcome that you like happens, you feel joy; if an outcome you do not like happens, you feel distress.” Drawing on a large set of emotional rules like these, Serlej was able to take the basic emotional relationships that give rise to a particular “moral” and represent them in a way that could be read by the computer.

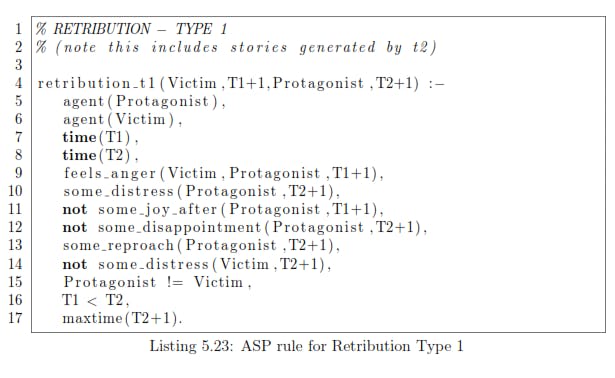

If you like looking at computer code, you can geek out over this representation of the underlying emotional pattern that defines the “Retribution Moral”:

In plain English, this says that stories based on the Retribution Moral all have the following sequence of events: First, the Protagonist does something to make the victim feel anger; then, something happens that makes the protagonist unhappy (i.e. “NOT joy”), but instead feel some kind of distress (other than disappointment), combined with a feeling of reproach.

Oh, and by the way the protagonist and the victim can’t be the same person. These are the kinds of nit-picky details that have to be explained to the program.

The Retribution Moral was just one of several different moral patterns that Sarlej could feed into the program as a basis for writing fables. Once the program knows the sequence of emotions have to take place, it can dip into a knowledge database and select events, characters, settings, and actions that can be combined to give rise to the desired pattern of emotional relationships.

And that’s where things get complicated. In order to get a computer to be able to accurately pick characters, actions, and objects that will produce complex emotional relationships, you need to give that computer a vast amount of detailed information. For example, it has to know the ideals and desires of each character in order to know if an event will make him or her happy or sad. Sarlej had to include this (and much more) into the basic functioning of the program to make it work.

The program also has a limited set of characters, actions, objects and settings to choose from. Because this program writes fables, Sarlej had a little fun with it: The database has characters such as dwarfs, unicorns, wizards, and knights, and actions that include “kills,” “casts a spell on,” and “picks mushrooms.” In principle, however, the underlying database of characters, actions, places, and objects could be anything at all.

Once you have picked a moral you want the story to have, the computer program goes through and picks characters, objects, actions, and settings to create a sequence of events that will produce the correct pattern of emotions for that moral.

The output of all that work is a story. Sort of. The output actually looks something like this:

hope(dwarf,becametrue(has(dwarf,rose)),0)

happens(dwarf,picks_rose,0)

succeeds(dwarf,picks_rose,0)

joy(dwarf,becametrue(has(dwarf,rose)),1)

satisfaction(dwarf,becametrue(has(dwarf,rose)),1)

This is a basic “logical” representation that the computer uses to describe the events in the story. An entirely separate module, called the text generator, converts this into plain English.

The current text generator would take the above story “code” and produce this story:

In a distant kingdom there lived a dwarf. The dwarf hoped that he would have a rose. One summer’s morning the dwarf picked a rose. As a result, he had the rose. The dwarf felt joy and satisfaction that he had the rose.

Sarlej acknowledges that the logical “story plan” could be translated into English in a variety of ways. One could imagine a very sophisticated text generator that would turn that exact same computer output into a more natural-sounding fable:

Once upon a time there lived a dwarf in a distant kingdom. More than anything else, he wanted a rose. One summer morning, the dwarf found a rose and picked it. Holding the rose in his hand, he realised that he had fulfilled his desire… and he was happy!

Part of the beauty of this program is its flexibility. It would be easy enough to make the text generator more complex, or add to the detail and complexity of the way characters or situations are represented. There is also no reason that it couldn’t be used to write stories other than fables. This basic “computational engine” is only based on the assumption that stories are defined in terms of patterns of character emotions.

“The effectiveness of these stories would depend on whether stories of a particular genre can be defined completely by emotion patterns alone. Perhaps for some genres they can, but it’s pretty unlikely for that to hold in general,” warns Sarlej.

So this program like won’t give us this generation’s Hamlet or War and Peace. However, given the limitations that Sarlej describes, it sounds like it could ably be a soap opera screenwriter.

“Josh loves Reva. Reva dies and Josh feels distress. Dr. Burke clones Reva, so Josh feels joy. Dolly feels threatened, and imprisons Reva. Josh feels distressed, and so he rescues Reva.”

Not bad, right?

Photo via William Creswell (CC BY SA 2.0) | Remix by Jason Reed