Earlier this week, the Los Angeles area was shaken by an earthquake. However, it was a news article about the tremor that sent shockwaves across the journalism world.

Here’s the article in question, which was published to the Los Angeles Times site shortly after the shaking subsided:

A shallow magnitude 4.7 earthquake was reported Monday morning five miles from Westwood, California, according to the U.S. Geological Survey. The temblor occurred at 6:25 a.m. Pacific time at a depth of 5.0 miles.

According to the USGS, the epicenter was six miles from Beverly Hills, California, seven miles from Universal City, California, seven miles from Santa Monica, California and 348 miles from Sacramento, California. In the past ten days, there have been no earthquakes magnitude 3.0 and greater centered nearby.

This information comes from the USGS Earthquake Notification Service and this post was created by an algorithm written by the author.

The article was created automatically by a computer program called Quakebot. According to its author, journalist/programmer Ken Schwencke, Quakebot was designed to monitor the data feed from the U.S. Geological Survey, which publishes information about earthquakes as soon as they happen, and then turns those reports into short news stories. Schwencke told Slate that the initial, computer-generated article was only meant as a quick placeholder for a much longer report on the subject that was subsequently updated 71 times over the course of the day.

The initial reaction among many journalists was a combination of ?woah, computers can write new stories?!” and ?uh oh, robots are coming for our jobs!”

While the quake story was the first piece of algorithmic journalism to significantly get our attention, computers have been independantly turning out stories for years. In fact, odds are you’ve already read a number of articles written by computer programs without even noticing.

There are a handful of companies that have created algorithms with the ability to take information pulled from data feeds and transform it into highly readable copy almost indistinguishable from content created by flesh-and-blood human beings.

One of the most prominent firms is North Carolina-based Automated Insights. ?When first we started three years ago, no one was doing anything like this, ?said Automated Insights Vice President Joe Procopio. ?We were pariahs in the news business.”

The point, he insists, isn’t to create cheap, entirley robotic newsrooms. Instead, it’s aimed at freeing up reporters from writing boring, labor-intensive stories so they can focus on the type of interesting investigative pieces that algorithms are unable to convincingly put together because they don’t rely solely on highly structured information like sports scores or stock market performance.

Procopio says much of the initial stigma is rapidly fading—especially in light of a recent study showing test subjects were largely unable to tell the difference between an article written by Automated Insights’ algorithm and one written by a real journalist. In fact, the article written by the software was rated as more ?informative” and ?trustworthy” than the human-created piece.

?When someone isn’t focused on determining the authorship of a piece of content, they’re not going to notice if it was written by an algorithm 99 percent of the time,” Procopio boasted, predicting that, in a few years time, there will likely be a significant growth in the percentage of hard news stories being generated algorithmically.

Procopio concedes that many of Automated Insights’ clients—a group that includes organizations like Yahoo and the Associated Press—often don’t disclose when a given article was written by an algorithm. The reason for that is because a lot of media companies are just as uncomfortable admitting a piece of content was written by an external third-party as they are admitting that it was written by a robot.

To get a sense of how what algorithmic journalism looks like in practice, I’ve compiled a few clips of stories that were written by Automated Insights’ computers. The take-away here is that it’s actually really difficult to tell if the article you’re reading is just kind of dry or if it was written by a computer program.

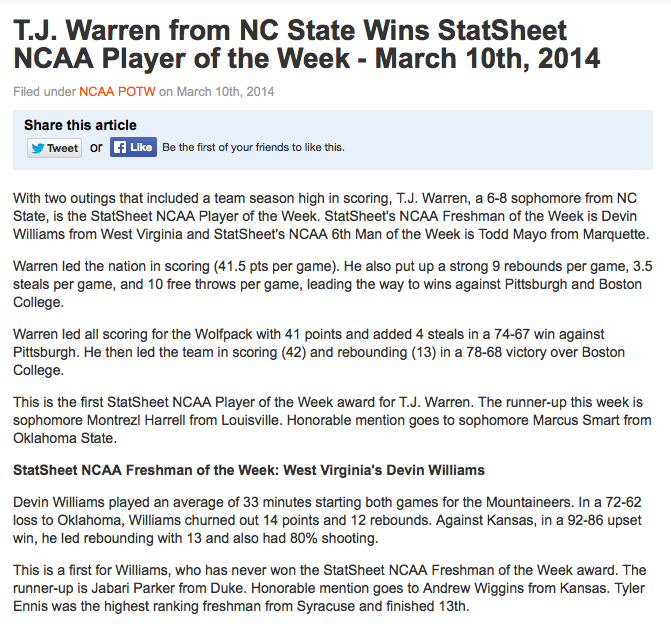

Here’s a clip from an article that Automated Insights’ Statsheet program created highlighting a college’s athlete’s recent accomplishments for a recurring “Player of the Week” feature:

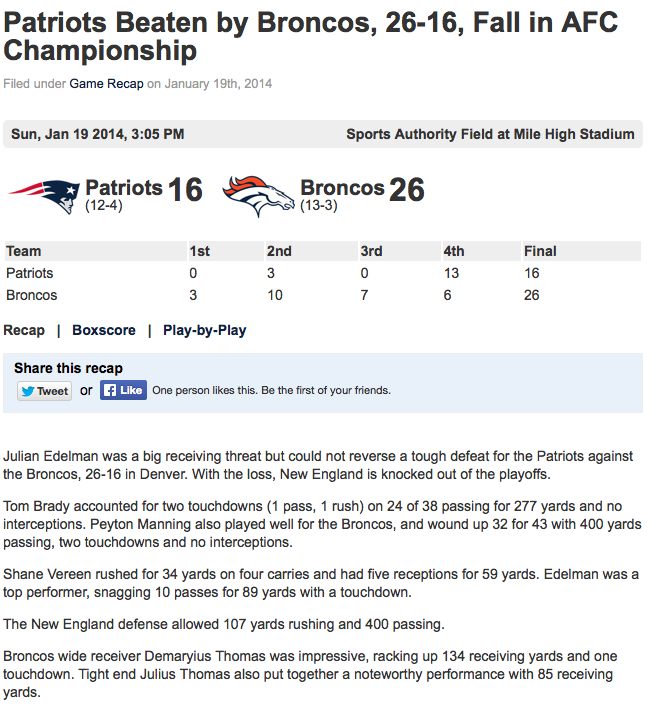

This article is from Statsheet’s popular Pat’s Huddle blog about the New England Patriots’ season ending loss to the Denver Broncos in January’s AFC Championship game:

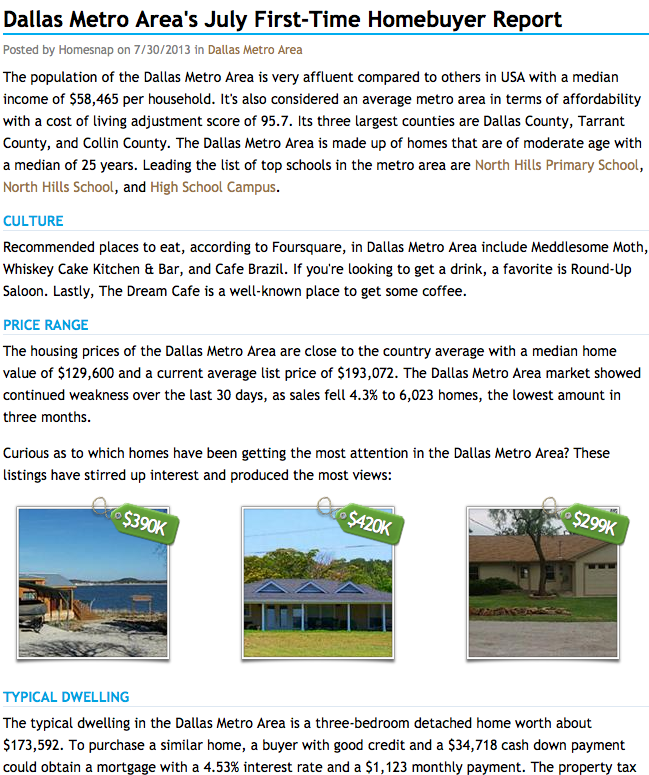

This article from real estate site Homesnap is a guide for first-time homebuyers looking to enter the Dallas, Texas housing market:

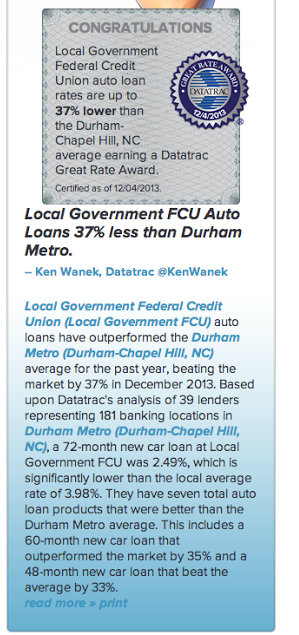

And this piece of content was created by Automated Insights’ algorithm for finance information site Datatrac:

If you randomly stumbled across any of these pieces of content, could you tell they were created by a computer?

The larger question probably is: would you care?

Photo by Mirko Tobias Schaefer/Wikimedia Commons