On Aug. 4, Las Vegas hosted the final round of DARPA’s cybersecurity capture the flag competition, dubbed the Cyber Grand Challenge. With nearly $4 million in prizes on the line, teams competed in real time to find, diagnose, fix, and exploit software flaws in a setting reminiscent of a scene from The Matrix. But the competition was different from any other cyber-CTF event: It was the first to feature completely automated, bug-hunting machines.

The idea of AI-aided cybersecurity has been around for a while. Hackers and security experts develop automated and machine-learning-based tools and software to take on some of the time-consuming tasks of their job, such as probing for known security holes and vulnerabilities and identifying malicious behavior. But there’s always a human brain that is finding new flaws and planning attacks and defense strategies. CGC was the first manifestation of robots taking on the whole gamut of cyber-warfare, trekking into uncharted territory, automatically spotting and plugging security holes while digging behind enemy lines to find and exploit unknown vulnerabilities.

The implications may be huge for the future of cybersecurity.

What is the Cyber Grand Challenge?

Cyber Grand Challenge (CGC) was developed by DARPA in 2013 as “world’s first automated network defense tournament.” The goal was to help spur the development of machines that can take on fundamental security tasks previously tied to humans, such as fuzzing, dynamic analysis, and sandboxing. After three years of research, development, and qualification rounds, seven teams made it to the finals, which were held in the ballroom of Paris hotel in Las Vegas, where thousands of eager spectators came to catch a glimpse of what the future of cybersecurity might look like.

Participants developed systems that can take both defensive and offensive cybersecurity tasks without the help of humans. Here’s how it works: Contestants are assigned servers running arbitrary code. Each hack-bot is supposed to protect its charge by finding and patching its vulnerabilities while at the same time making sure it’s up and running. Simultaneously, each contestant has to scan opponent servers for vulnerabilities and exploit them to disrupt their operations. Meanwhile, the development team sits in a cordoned-off area—watching its bots battle opponents from the sidelines.

The CGC final was played in 96 rounds of time-tested competitions, which lasted a total of eight hours, during which the machines found a total of 650 software bugs and made 421 corrections.

The winner was Mayhem, a cyber-reasoning platform developed by ForAllSecure, a Carnegie Mellon University spin-out company based in Pittsburgh. A $2 million prize went to the Mayhem team, a handsome recompense for its three-year efforts.

Second-place winner Xandra, developed by the TECHx team from New York and Virginia, earned $1 million, and the third-place team, Shellphish from California, raked in $750,000 for the performance of their hack-bot, Mech Phish.

What are the implications?

Although the efforts to create fully autonomous cybersecurity bots are still in their preliminary stages, the prospects are very significant. With artificial intelligence and machine learning being able to identify and patch security holes at speeds that surpass those of humans, developers and security experts will be much more capable of sealing their systems against attacks. Software can be shipped with fewer flaws from the beginning, and analysts will be able to beat cybercriminals at spotting undiscovered security flaws in their systems.

The same technology can be used by malicious actors in the reverse order—and the effects can be devastating.

This is a step beyond fuzzing work done by tech giants such as Microsoft and Google, and it can serve to even the odds between hackers and defenders, becoming an important component for the security of our increasingly connected and software-oriented lives. Attackers have the advantage of only having to find one vulnerability, whereas defenders have to win every battle and patch every hole. Technologies such as those developed in the course of the CGC can help neutralize this advantage.

“Cyber Grand Challenge is here to put hackers out of work,” Andrew Ruef, a researcher at Trail of Bits, said last year.

But there’s a flip side to this coin: The same technology can be used by malicious actors in the reverse order—and the effects can be devastating.

The Electronic Frontier Foundation’s Nate Cardozo published a blog post on the same day as the CGC final, in which he praised DARPA’s initiative but also warned against the threats it might create. Cardozo describes a scenario in which a worm uses the CGC concept to automatically discover zero-day exploits—software vulnerabilities that haven’t been reported and patched before—and devise new ways to attack computers and other connected devices. Unfortunately, an automated patching solution wouldn’t be a perfect defense, because not all devices can be patched easily. Of special concern are smart appliances, such as connected doorbells, fridges, kettles and even children’s toys, which continue to grow in numbers at a chaotic pace while lacking basic security features.

This doesn’t mean that efforts such as CGC should be stopped (or can be stopped at all). Instead, as Cardozo put it, researchers should proceed cautiously and be conscious of the risks.

“Until our civilization’s cybersecurity systems aren’t quite so fragile,” he wrote, “we believe it is the moral and ethical responsibility of our community to think through the risks that come with the technology they develop, as well as how to mitigate those risks, before it falls into the wrong hands.”

Are bug-hunting bots ready to compete with human hackers?

While the implications of hack-bots fighting cyberwars are indeed exciting—and spooky—they still have a long way to go before they can match up with human hackers. Mayhem, the CGC champion, was immediately invited to the annual CTF tournament at the neighboring hacker convention Def Con, where it competed against human hackers.

Mike Walker, CGC’s manager and a respected CTF player, didn’t expect Mayhem to finish well, and stated so in a press release: “Any finish for the machine save last place would be shocking.” While Mayhem was trounced as predicted, it didn’t finish last and managed to best some of the contestants, a notable feat considering that Def Con’s contestants are among the world’s best.

Walker admits that challenges like CGC aren’t the right solution to every problem, and security analysts cannot be replaced by AI yet. For the moment, the results can be used to augment the work that humans are doing by assisting analysts in finding security holes faster and helping developers write code that’s more secure. The mere fact that machines are accomplishing so much by themselves is a leap forward, however, and we can expect much more in the months and years to come.

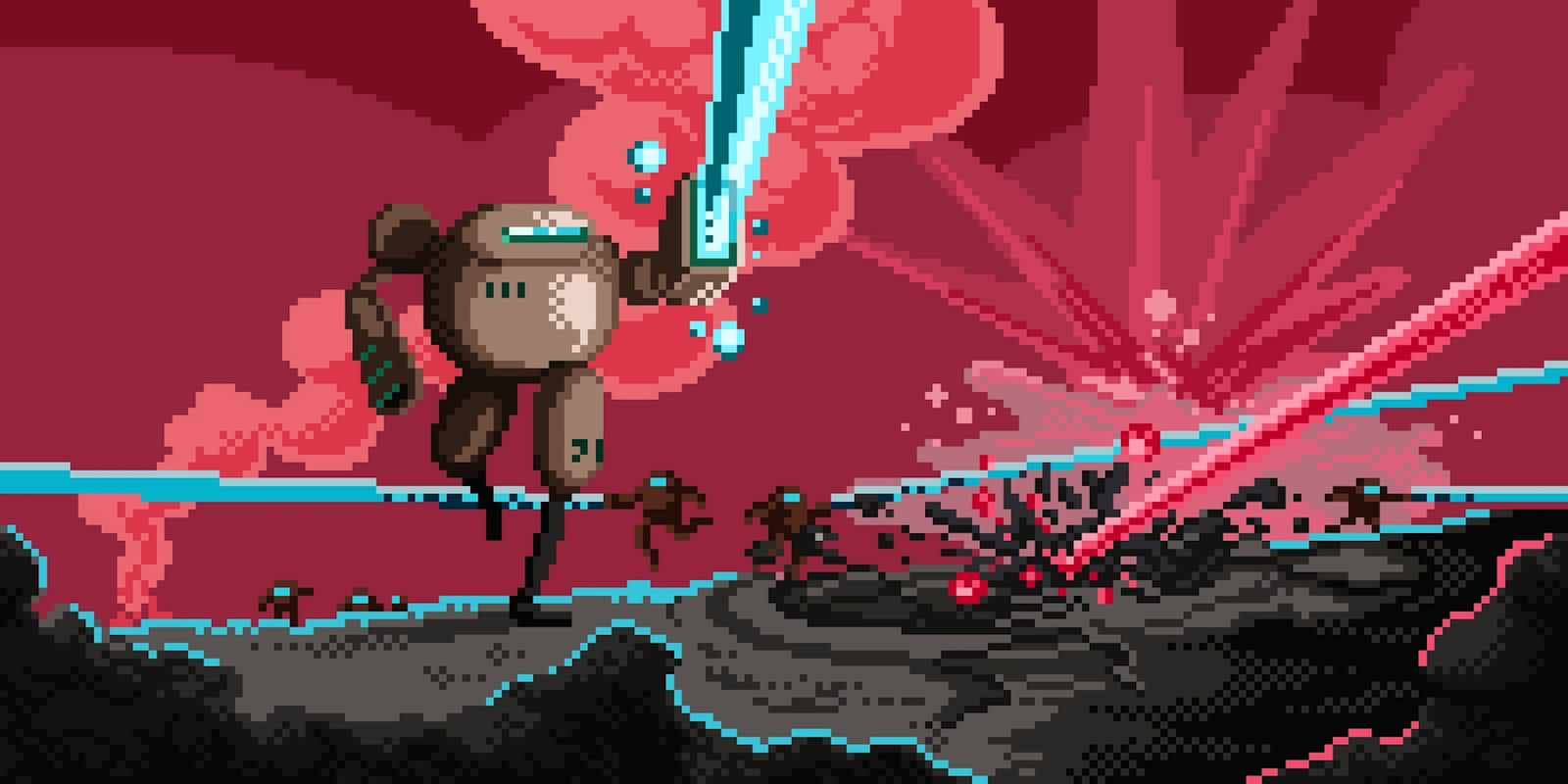

In a conceivable future, hackers might take a backstage seat and watch their artificial minions confront each other on the cyber battlefield.

Ben Dickson is a software engineer at Comelite IT Solutions. He writes about technology, business, and politics. Follow him on Twitter or read his blog.