“Behaviour at these toilets is being recorded for analysis.”

At the CHI 2014 tech conference in Toronto, participants saw signs hanging on the washroom doors notifying toilet uses that their “behaviour” was being analyzed. This project, called Quantified Toilets, provided a “real-time” feed of results from urine analysis from people who used the toilets at the conference.

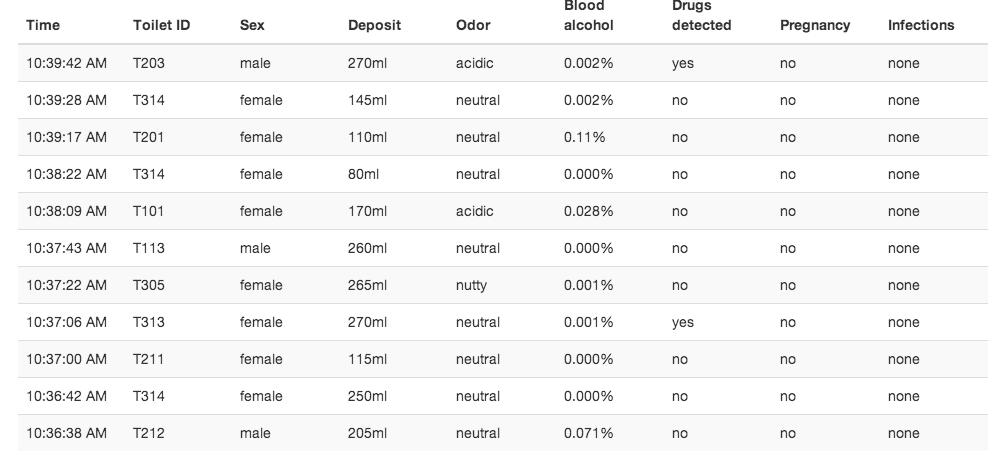

The results revealed that some participants had sexually transmitted diseases. They also revealed when participants were pregnant, or when they had drugs or alcohol in their system. One male participant is listed with a blood alcohol level of .0072 percent. He had gonorrhea.

People took notice of the analysis, and plenty tweeted about it; some thought it was clever, while others were disturbed by the privacy implications. There wasn’t out-and-out outrage that the conference bathrooms were being monitored in this way, suggesting that a 2013 study where 70 percent of respondents said they’d be OK with having a smart toilet share their personal data wasn’t an anomaly, as long as it meant lower healthcare costs.

The Quantified Toilet experiment wasn’t meant to show people that real-time health monitoring wasn’t as scary and invasive as privacy advocates said. In fact, it was meant to provoke dialogue about privacy issues. While the technology is available for this kind of monitoring, the Quantified Toilet project didn’t actually collect and analyze urine samples. The feed it ran online was fictional. It was a hoax with a purpose, a thought experiment designed to make people think about surveillance.

The Atlantic pinpointed some of the more disturbing privacy implications the hoax presented. “If the government installs smart toilets in public venues, the option to opt out isn’t necessarily available,” Jennifer Goldbeck writes. She points out this could lead to employers scrutinizing employee urine to find out who had taken drugs, or which female employees were pregnant. It could be used by stadiums, conferences, and other large events to see when people had the highest blood alcohol levels, which could be used to sell more alcohol or to crack down on rowdy patrons.

This kind of default bio-surveillance could relegate job promotions, hirings, arrests, admittances, and other decisions to what comes out of the body. While it could help weed out alcoholics and detect diseases sooner, it would also require a willingness to be surveilled counter to many notions of personal freedom and privacy.

Karen Tanenebaum, a researcher and game developer at UC Santa Cruz with a PhD in interactive arts and technology, organized the hackathon workshop where the Quantified Toilet idea started. She thought CHI 2014 was the perfect place to create this kind of experiment because the attendees are the people who could make projects like this a reality. “CHI is a conference for researchers on human-computer interaction, and as such we have the ability to influence technology design, deployment, and policy. We need to be thinking critically about the societal as well as personal implications of what we are doing,” she told the Daily Dot in an email.

David Nguyen, one of the six masterminds behind the project, explained that the project started at the conference itself; in a workshop during the first few days, a team decided to do a thought experiment over the rest of the conference. “We hope we’ve added to the conversation regarding surveillance, privacy, and data. And we hope the conversation continues,” he said.

H/T The Atlantic | Photo via Flickr/dirtyboxface (CC BY 2.0)