How balanced is the content you read online? How much do you respect opposing views? These are questions that are becoming more and more important as the news we consume online are becoming more and more personalized by algorithms.

In the past few years, there’s been increasing focus on the adverse effects of filter bubbles. Platforms such as Facebook, Twitter, and Google use AI algorithms to tailor their content to show us things that confirm our preferences. While this gives us a false sense of satisfaction and keeps us glued to the applications of these companies, it also amplifies our biases and makes us less tolerant of things that don’t confirm our views.

New York-based startup Nobias believes the first step toward solving the negative effect of content curation algorithms is to educate users about their biases and help them maintain a balanced diet of news content. And ironically, they’re using AI algorithms to address the problem.

An explosion of online content

“We’re seeing a lot of content being published every day, and there’s no way for consumers to be able to see or read all of it. That’s why online platforms and news providers use algorithms to distill it in some way,” says Tania Ahuja, founder and CEO of Nobias.

These algorithms fill users’ news feeds with content that they’re more likely to engage with. The more they click on the items they like, the more their content becomes personalized to their tastes.

“Ultimately you get wrapped in a filter bubble. For instance, in the case of political bias, if you’re always reading articles that are left-leaning, you will end up with a news feed that is primarily left-slanted, and publications with the other point of view will get buried in your news feed,” Ahuja says, pointing out that these algorithms are influencing people’s perceptions on really important topics like politics and business, creating a fractured society with hyper-polarized groups.

“This makes it easy for bad actors to manipulate people. This is really causing a problem in the society,” Ahuja says.

While acknowledging that in the past few years, increased awareness and activism has forced platforms such as Google and Facebook to update their algorithms to address concerns about consumer privacy, fake news, echo chambers, manipulation, and bias, Ahuja believes that we’re nowhere near solving the problem in a fundamental way.

“The process is very slow, and also, to some extent, the business model of a lot of these platforms are predicated on free access to their platforms, and is financed by digital ads, which in turn is driven by engagement and sharing rather than the actual value of the news. I don’t think the tech companies that run these platforms should be deciding what we see,” she says.

The Nobias browser extension

Nobias was created as a free tool to help users take back control of their news feed, Ahuja explains. The point is not to curate content for users, but to warn them if the news they’re reading does not represent all viewpoints on a topic.

“We let them know if they’re only reading one side of a story. They might choose to do so—it’s their prerogative. But at least for those who would want to have access to both sides of information, then we give them this information right in their news feed so they can be selective of what they read and become aware of what other people are sharing and reading so they can develop their own unique point of view,” Ahuja says.

The tool, which Ahuja describes as a “Fitbit for news,” is a Chrome extension.

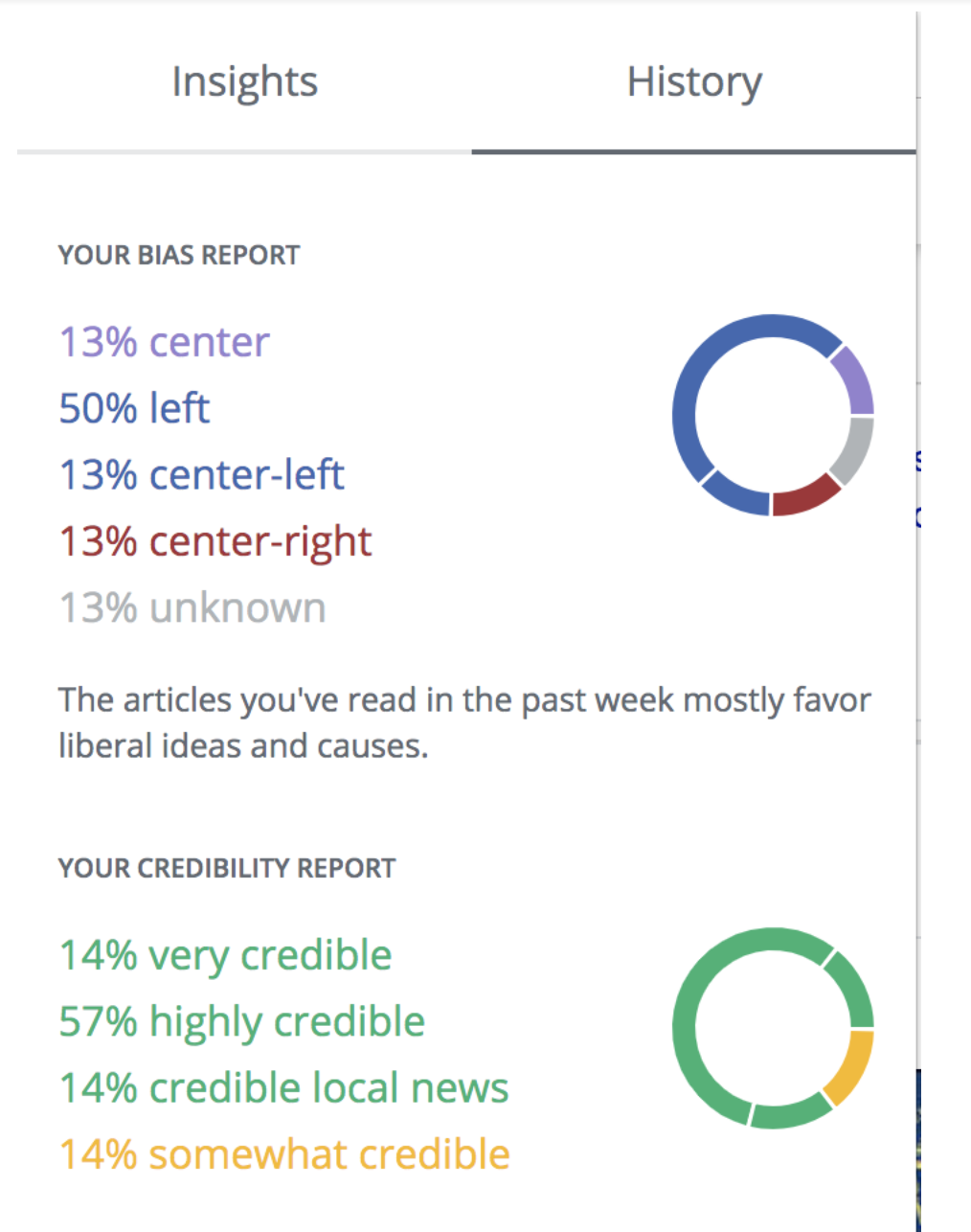

Once installed, Nobias will decorate news pages with colored paw icons that show the political slant of the featured articles. The paws score news items between left (blue) and right (red), with an unbiased center (purple) standing in the middle. There’s also an unknown (grey) paw for articles Nobias can’t classify. When hovering over the link to each article, Nobias will give you a quick summary of its political slant, the credibility of the news source and the readability of the content.

The tool works in platforms that curate news such as Google News, Facebook, and tracks popular news sites such as New York Times, Washington Post and Fox News, with more ou

tlets being gradually added to the list.

Nobias works at the article level. This means that aside from the general political alignment of a publication, the tool also analyzes the content of each article and gives an assessment for that specific article. “The articles themselves might have features that are left- or right-leaning. But a lot of articles are also center and you can get factual information from them. Also, some center articles might have both left and right features that cancel each other out,” Ahuja says.

Nobias will also let you track your diet of online content by giving you a summary of the general political bias of the articles your read as well as the credibility of the sources you get your news from.

Using AI to determine slant and bias

Determining the political slant of an article is not an easy task. To overcome the challenge, the team at Nobias employed a published methodology by Matthew Gentzcow and Jesse Shapiro in Econometrica, a top economics journal, in 2010 and 2016, which uses the congressional record of speeches to set baselines for political alignment. (The full methodology can be found here.)

“In Congress you know if somebody is Democrat or Republican. It’s a lot more objective. We assume Democrats are left-leaning and Republicans are right-leaning,” Ahuja says.

The company used the transcripts of speeches to create an index of keywords that can be considered representative of left and right views. They then used the gathered data to train a machine-learning algorithm that can determine the determine the slant of news articles. Machine learning algorithms are AI programs that analyze large sets of data and find common patterns and correlations between similar samples. They then use these insights to classify new information.

In an oversimplified example, if you train a machine learning algorithm with a large set of speech records, each labeled with its corresponding political standing (left or right), it will find the common traits between each of the classes. Afterwards, when you give the algorithm the text of a new article, it will determine its political slant based on its similarities to the samples it has previously seen.

According to Ahuja, Nobias’ algorithm can determine slant with a 5-10% error rate. If users feel an article has been wrongly classified, they can submit their opinion. The developer team will use the feedback to retune their algorithm.

The civic duty to read balanced news

Nobias is a good example of putting AI to good use to counter some of the negative effects that algorithms themselves have created. However, it’s not a perfect solution.

“We want to make it easier for people to discover their own biases. That’s why we view this more as a productivity tool. You know that you need to have a balanced diet. This just helps you to get there without much work,” Ahuja says.

At the end of the day, a tool like Nobias will help you identify your biases—but it’ll be your own responsibility to overcome them. You’ll have to move out of your way, investigate the news on your own, study the different angles of a story, and learn to read and respect views that oppose yours. That is something that no amount of automation can do in your stead.

READ MORE:

- This AI-powered camera can see 28 miles away through smog

- Instagram will use AI to tackle anti-vax posts

- Artificial intelligence and the death of decision-making