What happens to the value of art when a computer can apply the stylistic talent of Rembrandt to selfies?

It’s a question we’ll need to start asking ourselves as artificial intelligence begins to understand and interpret data in ways that can transform the way humans create art.

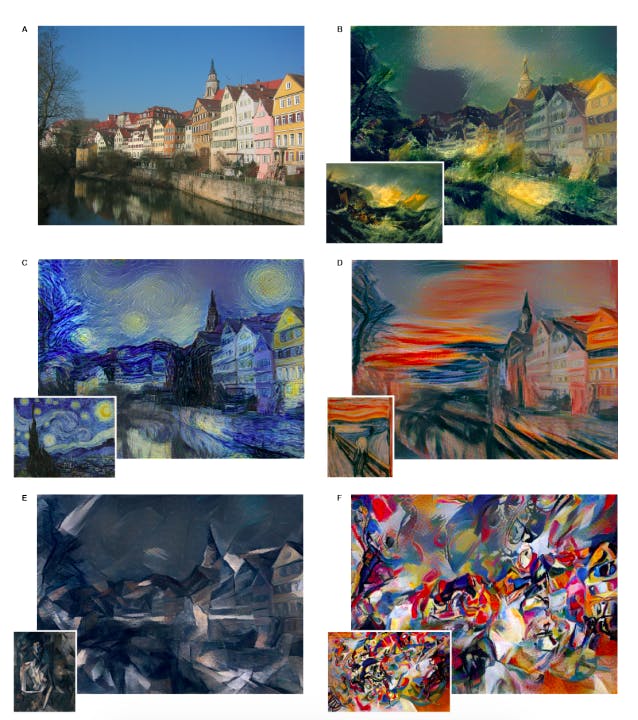

Researchers at the University of Tubingen in Germany built an artificial system using deep neural networks that separates image content from the style of the image. It’s based on an image recognition model programmed to recognize and classify a massive database of images—similar to Google’s DeepDream artificial intelligence program that allows people to experience the weird ways in which computers see the world.

When a photo is fed through the network, it goes through multiple layers, each layer analyzing a different piece of the photo, including colors, shapes, or more complex object recognition like “dog” or “cat.” Previously, these networks could only identify the style and content of an object or a photo as one piece of data.

Now researchers have made it possible for these artificial systems to parse style and content totally separately by introducing style reconstructions on top of the existing neural network. It computes similarities between the features of each different layer and discards information about the content. This means they can take a photo of a street, and the system can turn it into an image that looks like it was painted by Van Gogh.

“If my intuition is correct, it’s going to be much bigger than DeepDream,” Samim Winiger, an artificial intelligence researcher who wasn’t affiliated with the project, said in an interview.

As Winiger explains, this technology could be applied to things beyond photos and paintings. Theoretically, architects could use similar software to design buildings. People could create a network with a million or more 3D models of buildings, and then program the network to produce a building based on stylistic information—you could tell the program to create a house in Greco-Roman style. It could also provide new creation avenues for things like music, fashion, and other forms of art or engineering.

When the researchers release the software for anyone to use—like Google did for DeepDream—it’s possible someone could create 10,000 images in the style of Edvard Munch’s The Scream or Paul Cézanne’s fruit bowls with just a few clicks. That raises entirely new questions surrounding computational aesthetics.

“When it comes to applications in the designs, it’s a bit of a complex problem, because how do you judge good outputs from bad ones?” Winiger said. “These generative systems, they generate unlimited content. Once you’ve trained one of these things, mathematically provable unlimited things come out the other end.”

With an endless supply of content, and style stripped from talented greats over the ages, another question arises: What’s important for us to keep, and what should we get rid of? And who puts value on art and styles that begin to saturate the Internet?

It would be fairly easy to enter the market, assuming you have technical skills to pull it off. But whether or not your outputs would be worth anything, or be considered “beautiful,” remains an unanswered question.

“If you have a machine that generates beautiful outcomes and it’s really more powerful than any human artist or fashion designer, and there’s a central figure in the game, like a network operator, the Google or Facebook, the discussion becomes really interesting really quickly,” Winiger said.

Many people are skeptical about technologies that can reproduce objects quickly and accurately. Artists, obviously, wouldn’t be pleased with this type of machine learning, but other industries could also suffer job loss. Winiger uses the example of a Web developer: You can get 50,000 webpages to train the neural network, and then program it to generate more pages in that particular style. Though he adds the caveat that the content might not actually make sense yet.

Additionally, there’s a question of who should be in charge of establishing a set of ethics regarding machine learning and artificial intelligence, and how to deal with the potential backlash these technologies create.

Some of the most prominent scientists and engineers of our time, including Elon Musk and Stephen Hawking, have cautioned against weaponizing them, and encourage treading safely and ethically in development. Unforeseen circumstances could make people even more wary.

In July, Google came under fire after its Google Photos app, which uses facial recognition, tagged black people as “gorillas.” The company disabled the specific word tag, but it underscored the fact that machine learning algorithms still have a lot to learn.

As artificial intelligence advances, it creates exciting opportunities for people to develop new tools and applications, especially if research and software is kept in open communities, where academics and engineers can access it. However, pressure for patents and potential payouts can force new research in-house, behind walled gardens or closed doors.

Earlier this year, Uber poached Carnegie Mellon University’s entire robotics lab—staffed with brilliant PhDs and engineers—under the guise of a “partnership.” The company took what at first was an academic research partnership and turned it into a corporate talent grab, bringing the engineers, and the tech, inside the company.

While Uber works on self-driving cars, neural networks are being used in other industries to predict market values or determine insurance policies. And unfortunately, Winiger said, there’s not enough widespread understanding of how machine learning might already be impacting our everyday life, or jobs in the future.

“We have to talk about it right now, especially because it’s such a complicated topic and there’s next to zero understanding in the broad cultural sense,” he said. “We need to get beyond this silly notion of Terminators and start to talk about the fine-grained problems that are unfolding, and are more interesting, frankly.”

Photo via Sharon Mollerus/Flickr (CC BY 2.0)