With smarter AI-powered tools and better surfacing of Reddit responses in search, Microsoft is hoping to give internet citizens more reason to turn to its search engine Bing for their web surfing needs. But one of its updates, created in the name of transparency, could end up proving more problematic than informative.

For starters, you can now ask Bing conversational queries—full sentence-style questions such as “What is net neutrality?” And taking a cue from Google, results will often be accompanied by easy-to-read cards. These outline the basics to your question, along with photos, related videos, and more.

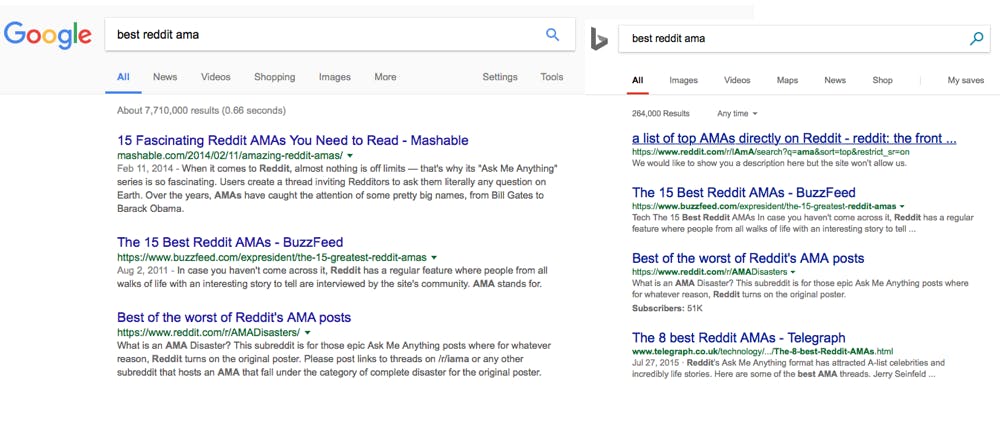

Bing also partnered with Reddit to better surface interesting information from the site. Now, for example, when you search for a question answered by a Reddit AMA (“ask me anything”) thread, the social news site’s form of a Q&A, that Reddit link will show up prominently in results.

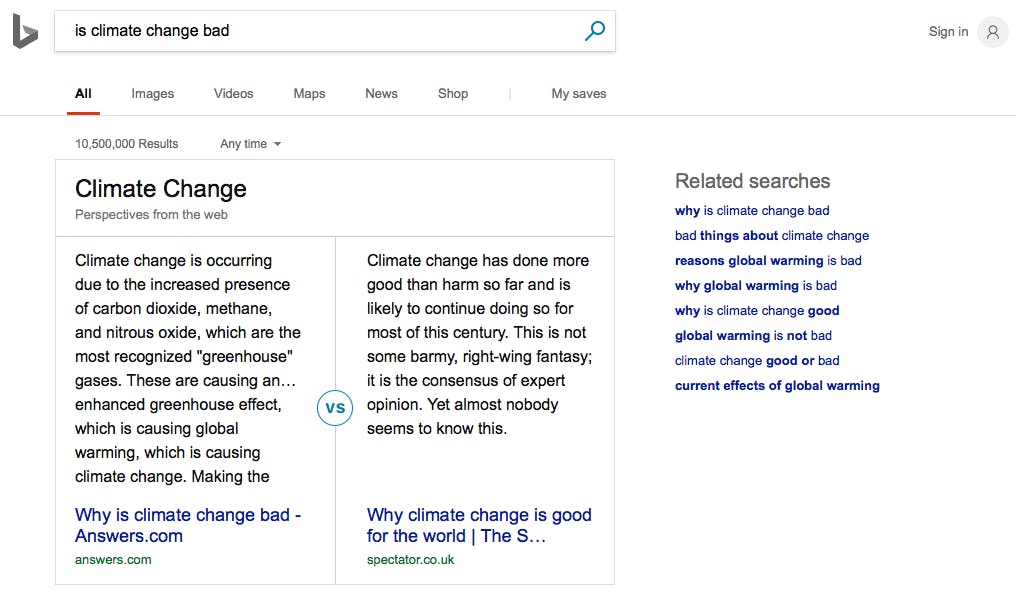

However, the most intriguing development Microsoft made with its AI integration in Bing is in delivering search results with different viewpoints. If you ask the search engine something like “Is cholesterol bad?,” instead of a traditional list of popular websites targeting that question, Bing will serve up responses that reflect differing perspectives. The results are shown sign by side so you can easily read up and compare them.

In one respect, offering these dueling search results is good for presenting the full picture for an opinion-based query. In the cholesterol scenario above, for example, it offers information on the two main types of cholesterol, LDL and HDL. Another health-focused question, “Is ginger good for you?” presents the purported benefits of eating ginger root with the negative side effects of ingesting too much.

However, this feature could also support the belief of conspiracy theories and non-factual information. A search for “is climate change bad,” for example, offered a prominent result that claims climate change would have net positive results for the next century or so. This result is shorter and easier to read than the more scientifically worded explanation for why climate change is not good for our planet. It’s not hard to imagine the more technical perspective being disregarded in favor of the one that’s easier to read.

Bing’s search update could unintentionally create a showdown between facts and alternative facts, with very little context given for the sources provided.

Microsoft has good intentions with this feature.

“We want to provide the best results from the overall web. We want to be able to find the answers and the results that are the most comprehensive, the most relevant and the most trustworthy,” Jordi Ribas, Microsoft’s corporate vice president of AI products, said at a Microsoft AI event in San Francisco Wednesday introducing these and other AI-related updates to Microsoft’s products.

What would make this feature far more powerful—and less susceptible to abuse or promoting conspiracy theories—is if Microsoft only sourced perspectives from peer-reviewed journals or other fact-checked sources. Many of its responses come from Answers.com, with counterpoints from editorial websites. While this may indeed fulfill Ribas’ mission to provide comprehensive results, it may not check off the boxes for being relevant or trustworthy.

Right now, this dual perspective option in results seems limited. Many queries (including “Is feminism bad?” and “Is democracy good?”) still show a “normal” results listing. More complicated questions, such as “Is turkey better than chicken?” also show results the normal way. Questions about more newsworthy topics, such as net neutrality, instead default to cards.

It’s clear that tech companies want to make AI work—and work for them. Using AI to surface news and opinions still doesn’t seem quite ready for prime time. However, at least Microsoft is making a concerted effort to give readers the full picture on topics. Competitors like Facebook and Google are still struggling with that concept.

H/T TechCrunch