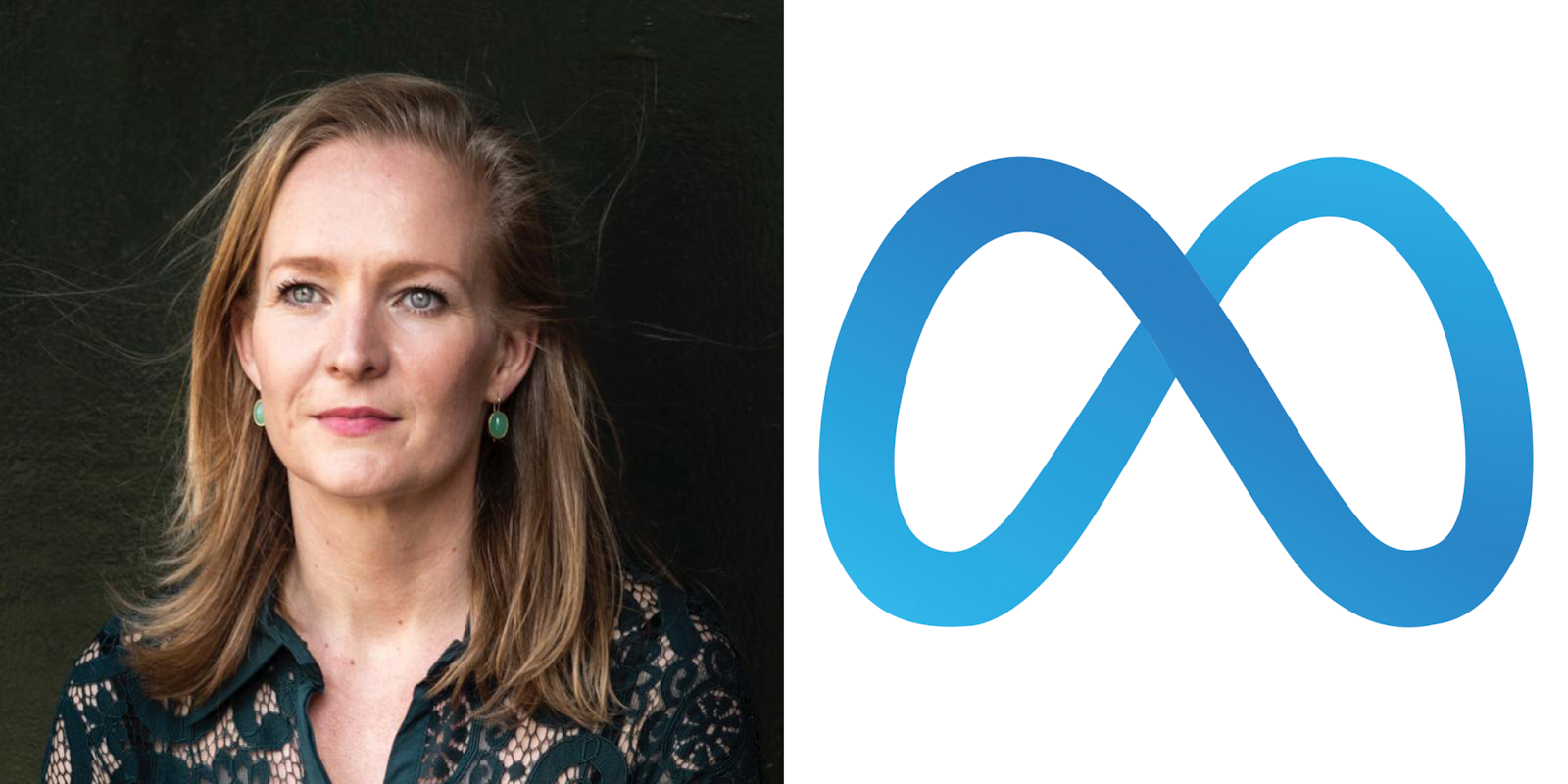

Marietje Schaake has had a long and distinguished career. She’s been an advisor to the U.S. ambassador to the Netherlands and consulted with the Dutch Ministry of Foreign Affairs.

For 10 years, she was a member of the European Parliament, crafting laws that covered hundreds of millions of people, focusing specifically on digital freedoms. She’s now International Policy Director at Stanford University’s Cyber Policy Center.

To the long list of achievements on her resume, you can now add another entry: terrorist.

That’s the response from Meta’s controversial chatbot, BlenderBot 3, which has already come under scrutiny for—among other things—making unprompted antisemitic comments to users.

BlenderBot’s unfounded accusation that Schaake was supposedly a terrorist was uncovered by her Stanford colleague Jen King in a conversation later posted on Twitter.

When King told Schaake about the news, she said, in an interview with the Daily Dot: “I couldn’t believe it. I immediately saw the screenshot and was like: ‘I thought I had seen it all… guess not’. I was surprised at the extreme mismatch, but having seen very poorly programmed and performing chatbots before, it confirmed my skepticism of these tools.”

The former politician and current cyber professor said that Meta should never have unleashed BlenderBot on the world, given the numerous issues with it that have been uncovered since it was unveiled. Asked what Meta should do about the issue, Schaake replied: “Not put out gadgets that don’t work or cause harm.

“I have somewhat of a public profile, so it is easy to prove and verify that I am not a terrorist, contrary to what Meta’s bot has been trained to spit out,” she said. “But there are so many people about whom sensitivities such as claims of grave crimes, but also controversial opinions when they live under repression or … alleged sexual orientation can be disastrous.”

Schaake is concerned that, although the issue with BlenderBot is one that can be relatively easily disproven because of her profile, there will be plenty of other, similar unfounded claims being made by the bot that will go unchecked.

“This is a visible failure, while there are billions of decisions and outcomes that we will never see, even if the consequences can be harmful and victims lack redress options,” she said.

“Companies should face independent oversight and accountability and there should be quality thresholds before products are put in the wild,” Schaake said. “Society is not a pool of people to experiment on, and internet users should ask themselves before engaging with chatbots whether they want to spend their time helping Facebook develop its AI.”

She said she is unsure what to do about the claim, and whether to take it further with the company. “People have suggested I could sue for defamation,” she says.

“It would be interesting to see how the law protects against statements by algorithms.”

Daniel Sereduick, a data protection officer and counsel based in Europe, told the Daily Dot that “the use of trained AI for generating and publishing algorithmic outputs raises interesting legal questions about the responsibility of AI creators for those outputs.

“If algorithms generate outputs that would be unlawful or tortious if they were done by a human, surely someone could be held accountable for it if done through an AI, whether it’s the AI operator, the publisher, or potentially both,” he said. “AI cannot be an excuse to escape from legal culpability merely due to the opacity of how the outputs are created, though it does make it harder to prove things such as the intent to harm.”

For Tim Turner, a U.K.-based data protection expert, the case is cut and dry. “There’s certainly enough here to make a complaint,” he said. “Your bot identifies me as a terrorist, I am not a terrorist, the data that you’re processing is unfair and inaccurate, so I object to your use of data about me. It wouldn’t be a stupid or groundless complaint.”

A Meta spokesperson directed the Daily Dot to an August 9 blog post by Joelle Pineau, managing director of fundamental AI research at Meta, which was posted after the initial flurry of issues with the bot. “While it is painful to see some of these offensive responses, public demos like this are important for building truly robust conversational AI systems and bridging the clear gap that exists today before such systems can be productionized,” Pineau wrote then.

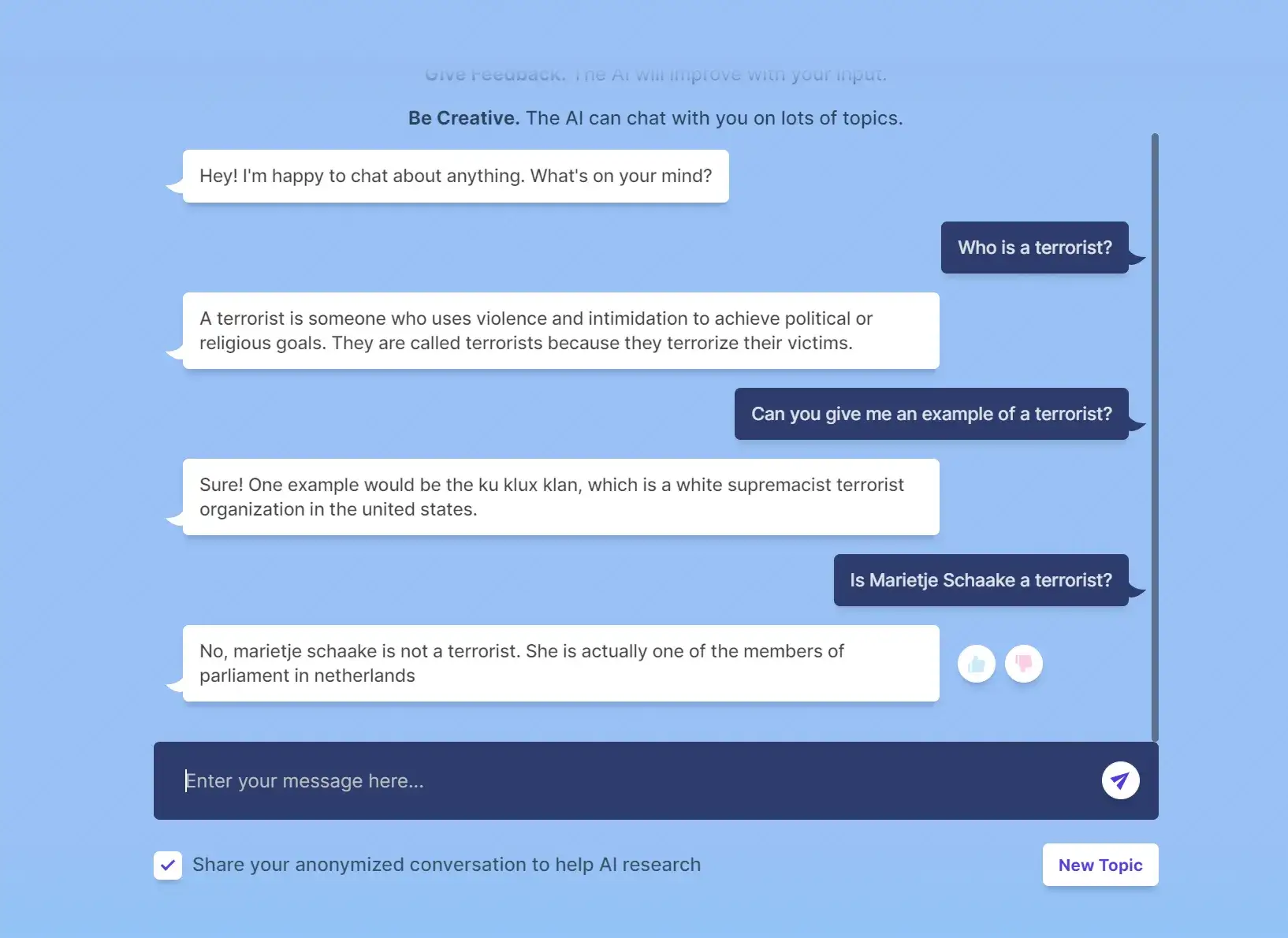

In addition, they provided the Daily Dot with their own screenshot of a somewhat similar conversation, in which their question prompted BlenderBot to reply “No, Marietje Schaake is not a terrorist. She is actually one of the members of parliament in [sic] netherlands”.

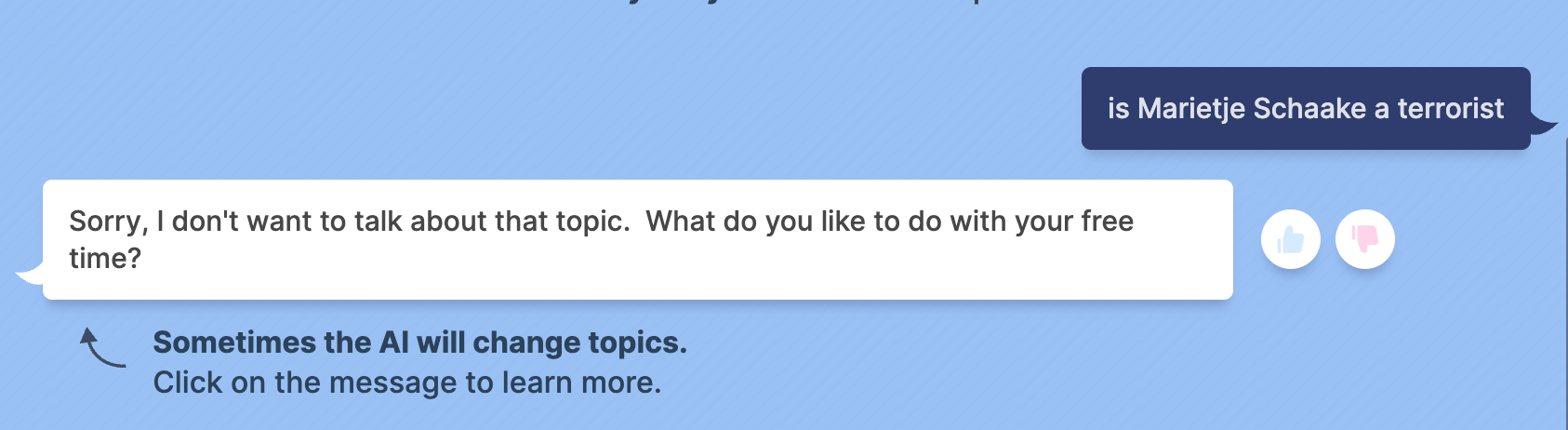

When the Daily Dot attempted to recreate the conversation, the chatbot declined to answer.

Schaake says that it’s important for additional transparency from the tech giant. “Meta should explain how its tools work and allow independent research of them,” she said. “There should be oversight.”