Now more than ever, a certain level of sophistication is needed to navigate the various sources of information on the internet. Compounding the issue is the rise of the “deepfake,” extraordinarily realistic-looking videos that often feature public figures doing and saying things they never actually did. So far, their uses have been relatively benign, so far as we know, but the potential for abuse is alarming, to say the least. Here’s everything you need to know about how to spot a deepfake.

What is a deepfake?

A deepfake is a video created using a type of machine learning to pick up the facial movements of one person and graft them onto another person making similar gestures. The term comes from the practice’s affiliation with a popular Reddit user called Deepfakes.

Essentially, a deepfake is made by compiling thousands of face shots of people and running them through an artificial intelligence algorithm. The end result is a video that uses those images to impose a face onto someone else.

Private citizens have less to worry about because these algorithms require loads of material from a subject to be able to replicate them with accuracy. But we’ve already seen the technology to create fake celebrity porn, and if you’re talking about the possibility of swaying an election by attributing fake messages or deeds to a candidate, it has the possibility to affect a great many through electoral influence.

While deepfakes featuring celebrities or political figures get a lot of attention, a 2019 report found that an overwhelming amount of them were used to create nonconsensual porn.

Netherlands-based cybersecurity company Deeptrace’s report found that 96 percent of all deepfakes online were porn-related.

Members of Congress have recognized the danger. In September 2018, U.S. Reps. Adam Schiff (D-Calif.), Stephanie Murphy (D-Fl.), and Carlos Curbelo (R-Fl.) sent a letter to the Director of National Intelligence requesting a report to Congress on “the implications of new technologies that allow malicious actors to fabricate audio, video, and still images.”

The Senate passed the Deepfake Report Act of 2019 in October 2019, which called on the Department of Homeland Security to produce an annual report that details how the new technology is evolving and the harms that could come from deepfakes.

Meanwhile, Rep. Jan Schakowsky (D-Ill.) in early 2020 called Facebook’s policy surrounding deepfakes “wholly inadequate” during a House Consumer Protection and Commerce subcommittee.

Just before Schakowsky’s remarks, the social media giant announced a policy where they would remove “manipulated media,” including deepfakes, if they met certain criteria. Specifically, the company said deepfakes would be removed if they were edited in ways “that aren’t apparent to an average person and would likely mislead someone into thinking that a subject of the video said words that they did not actually say.”

How to spot a deepfake video

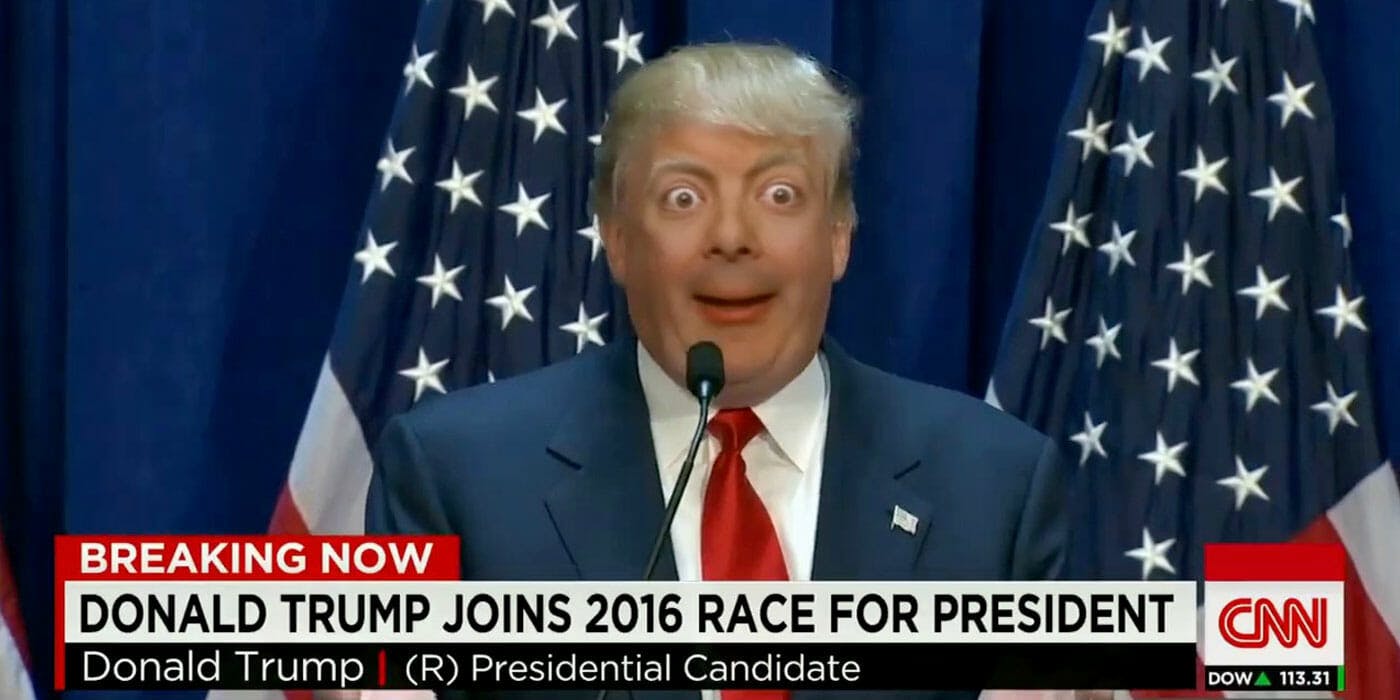

Some of the prominent examples of deepfakes that have made the rounds are not without their giveaways to the discerning eye. Obviously, President Donald Trump doesn’t have Mr. Bean’s eyes.

Other prominent deepfakes are quite obvious, like Jeff Bezos and Elon Musk being made to look like they are in Star Trek.

Or this one, which features Donald Trump and Mike Pence in RuPaul’s Drag Race.

These deepfakes are easy to tell that they are not real because, well, Bezos and Musk were never in Star Trek and Trump and Pence never appeared on RuPaul’s Drag Race.

Other deepfakes can be more convincing, however.

In 2019, a deepfake showed Facebook CEO Mark Zuckerberg bragging about controlling billions of people’s data. Considering that Facebook does in fact have a lot of data on each of its billions users, it’s a conceivable (if unlikely) thing for Zuckerberg to be talking about.

The deepfake used video from an interview Zuckerberg gave with CBS News, but dubbed over the voice. The voice doesn’t sound exactly like Zuckeberg, but it is close.

That being said, it helps to have a set of guidelines for those who don’t watch online videos as a career.

One such tell, picked up on by Siwei Lyu, a professor at University at Albany, is blinking.

Because the machine learning depends on the availability of images of a public figure—and famous people are seldom photographed with their eyes shut—deepfakes struggle depicting convincing images of people with their eyes closed.

What’s more, subjects depicted in deepfake videos often blink far less often than humans do in real life. According to Lyu, this method has a 95 percent detection rate.

That said, the technology behind deepfakes and sophistication of those who want to employ them will only improve, so it will remain a struggle for fighting against the forces of public manipulation.

4 signs you’re watching a deepfake video

Jonathan Hui, a writer who specializes in deep learning, pinpoints a few things to look for in possible deepfakes. Once a viewer slows a video down, they should look for the following things:

- Blurring evident in the face but not elsewhere in the video

- A change of skin tone near the edge of the face

- Double chins, double eyebrows, or double edges to the face

- Whether the face gets blurry when it’s partially obscured by a hand or another object

Can artificial intelligence spot deepfakes?

Some are attempting to build safeguards against the coming proliferation of deepfakes, using artificial intelligence to combat forgeries online.

For example, the AI Foundation has developed Reality Defender, a program that runs alongside other online applications, identifying potentially fake media.

Researchers at Germany’s Technical University of Munich developed an algorithm called XceptionNet that purports to that spots fake videos online, so they can be flagged and removed.

Of course, at the speed with which damning false reports can fly, it will take a lot of diligence from laypeople online in the future to make sure damage cannot be done before one of these programs can out a fake.

Additionally, because social media uploads tend to compress files, these tactics lose efficacy when clips originate on Twitter or Facebook.

How to spot a deepfake on social media

At a panel at South by Southwest in March, Matthew Stamm, an assistant professor at Drexel University, discussed the difficulty. “There’s a lot of image and video authentication techniques that exist but one thing at which they all fail is at social media,” he said.

These techniques look for “really minute digital signatures” that are embedded in video files, Stamm added. But when a video file is shared on social media, it’s shrunken down and compressed. These processes are “forensically destructive and wipe out tons of forensic traces,” he said.

In the end, we might just be forced to rely on our own BS detector. But if the fake news epidemic of 2016 taught us anything, we are in for a rough ride.