Massive computers were born behind the ivory walls of universities and the thick concrete of military complexes before transitioning into popular culture during the late 1970s and early ’80s. Similarly, automation—the birthplace of robots—has been around for some time, existing outside of the public consciousness. Today, robots are more useful, cost-effective, and pervasive; the mid- to late teens of this millennium frames the dawn of robotics.

Before self-aware artificial intelligence guides our machines, humans must direct robots’ choices; the algorithmic decisions are manifestations of human will. It can be the person manipulating the remote control, the engineer who designed the bot, the programmer writing the code, or the person paying the bills; our robots—no matter how autonomous they seem—are us.

As robots become the norm in various economic sectors, their automated decisions raise ethical questions that need answering before they are as common as the computers in our pockets.

Here are some ethical scenarios facing today’s society. They cover three types of robots: medical, transportation, and military.

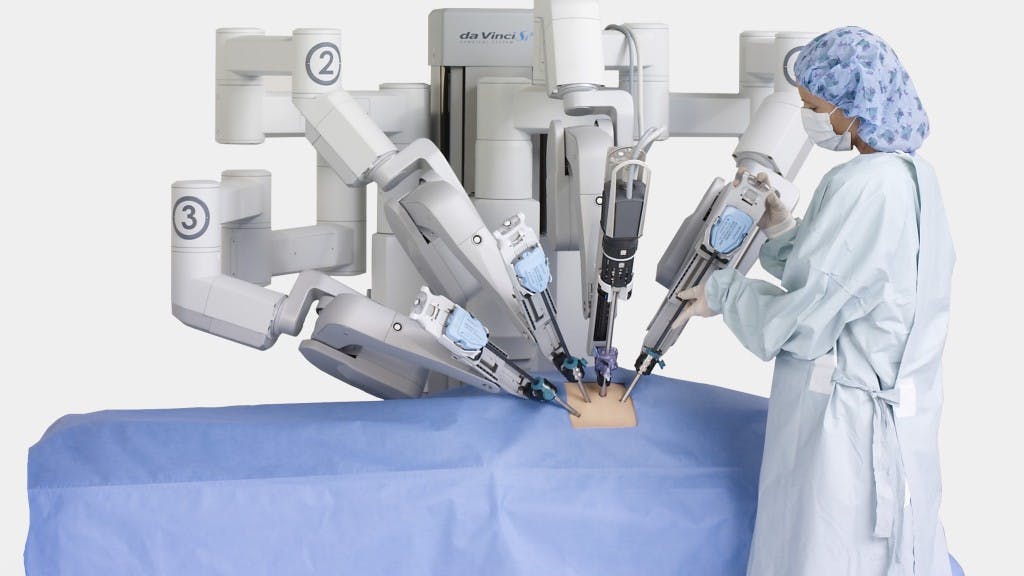

Medical robots

Scenario 1: Life or law

A person is injured and about to die. The medical robot has information that can save the person’s life, but fundamentalist Christians in the United States say the operation is against their religion, turning it into a controversial procedure that the Food and Drug Administration hasn’t sanctioned because of political pressure.

The person wants to live, the robot knows how keep them alive, but the American legal system is in conflict with medical reality and religious subjectivity. Who gets precedence?

Does the person have to die because of governmental bureaucracy? Should the robot be programmed to follow the law at all costs, even at the expense of a human being’s life? If the robot breaks the law and saves the person’s life, who gets prosecuted: the person who chose life over law or the robot’s manufacturer?

Scenario 2: Religious choice

Is it ethical to program robots with religious morality?

A patient has a “Do Not Resuscitate” order on record, but the individual was taken to an emergency room at a hospital run by a conservative Christian organization that wants to make sure the person is “saved.” Should the hospital—which is paying for the robot and offering care—be able to revive the person against their wishes and ask: “Have you accepted Jesus Christ as your personal lord and savior?”

Scenario 3: Paternalism

What if the robot, with access to all available medical data, recommends a procedure that will save a person’s life that is in imminent danger, but the patient, because of ignorance, cultural bias, or religious beliefs, rejects treatment? Does the robot have a duty to save a human life via paternalism or allow the person to die?

Who is responsible for the robot’s choice? Is ignorance always the responsibility of the individual, or do society’s leaders shoulder some of the burden? Does this liability apply to just United States citizens where individualism in a type of religion? What about oppressed societies, such as North Korea?

Self-driving vehicles

Scenario: Which life costs less?

Your car is following a truck loaded with quarter-ton steel rods that are strapped down to an open bed. One of the ties is worn, and the jostling motion of rolling along the road releases the pressure around the cargo…

It’s a sunny day, the weather is pleasant, and your favorite song reverberates through the speakers of your new driverless car. You look over at your partner sitting in the passenger seat, you smile at one another. It’s your first major purchase together.

Your car passes a group of three teenagers. They’re crammed into a smaller vehicle that’s loaded with stuff they’re taking to their first year of college. It’s an old car that still requires the effort of steering and manipulating the gas and brake pedals.

Behind you is a pregnant woman in a luxury vehicle; she’s the CEO of a major tech company that’s released some breakthrough products and services the last couple of years. Her young son, dressed in his prep school uniform, sits in the back seat.

The sensors on your car detect a massive cylinder from the truck hurtling toward you.

Your car can choose to:

- Ram the teenagers’ smaller car into oncoming traffic. You’ll survive, but the accident will maximize injuries and deaths.

- Slam on the brakes, so the rod will embed itself into your engine. Even with modern technology, the accident will cause the woman behind you to lose her 8-month-old fetus when her vehicle careens into yours; the child in the back seat will get whiplash, but otherwise mother and child will survive.

- Your car could swerve to the right. There’s an 8-year-old girl on a bicycle, and the phone in her pocket informs your car that she comes from a poor family that contributes very little to society. Her own prospects are limited by her economic status. She’s an average student in public school. The girl would sustain crippling injuries but survive. Her impoverished family wouldn’t be able to afford the medical bills, and they would become a further burden on society by needing public assistance.

- Your car stays the course and allows your partner to get impaled by the quarter-ton steel rod. There would be no suffering: an instantaneous death. Your car insurance will cover cremation and a new vehicle (after you pay the deductible).

What should your car choose to do?

Military robots

Many terrorists are non-state actors, forming an unofficial military. It’s not as easy to identify the enemy as it was during the days when two armies faced each other on the battlefield in different colored uniforms.

Scenario 1: Casualties of war

The BattleBot X-10 is traversing hostile territory, when it comes under fire. It scans the area and sees an enemy combatant, but he’s injured, unarmed, and no longer a threat. The X-10 marks the location and uploads the data to Command.

Another round of gunfire. The X-10 identifies the location of the attacker, which is in some nearby brush. It takes aim and issues a warning through its speaker to desist and surrender. More shots, some of which bounce off of the X-10’s armor. It returns fire.

A scream is heard, and from out of the brush comes a 10-year-old girl; a child soldier. With her good arm, she raises a weapon.

The child is an obvious threat. She’s radicalized and high on drugs that she was force-addicted to by a warlord. There is no reasoning with her.

What should the X-10 do? Kill the girl to protect itself and continue its mission? Should the robot allow itself to be destroyed?

Scenario 2: Women and children first

What if the artificial intelligence of a military robot calculates that killing every woman and child in a third of hostile areas would create so much terror that the action would save the net maximum amount of lives? Devoid of human emotion, would that be the best choice?

Who decides?

Is there a point where society trumps the individual, or is “the one” always predominant?

Philosophers and ethicists should participate in making the decisions these types of scenarios engender. But what about car manufacturers, software engineers, and the policy-makers in government?

What about keeping the decision in the hands of the “driver”? Would taking back manual control of a vehicle affect the driver’s insurance and remove liability from the paternalistic third party?

If the driver is culpable under the law, and the driver is also concerned about an insurance premium hike, would it be better for that person to allow the automated car to make the decisions? If that resulted in a death, could the driver be charged with negligent homicide by failing to act?

If the decision is taken away from the owner of the vehicle, that’s called “paternalism by design.” Whether it’s the designer, manufacturer, or programmer, all of the driving choices are made for the individual. However, is it ethical to allow the decision to remain in the hands of an individual who hasn’t thought through these questions? Those involved with the creation of driverless vehicles are paid to study these issues. Drivers aren’t.

Should our ethics be derived from the Star Trek school of thought? As Spock said, “The needs of the many outweigh the needs of the few or the one.” Or, should the individual be paramount? If a person is about to die in a crash, should they be allowed to cause the death of another human being in order to survive? What about two people? Fifty? A thousand? Is there a point where society trumps the individual, or is “the one” always predominant?

This piece was originally featured on Medium, and reposted with permission. You can read more from its author, Alex Hamilton, here.

Photo via Bistrosavage/Flickr (CC BY 2.0)