Minnesota Vikings quarterback Teddy Bridgewater left a team practice Tuesday under mysterious circumstances. All that was known was that he was hurt, and the injury was apparently serious.

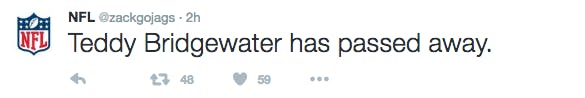

In the absence of real information, in stepped the internet.

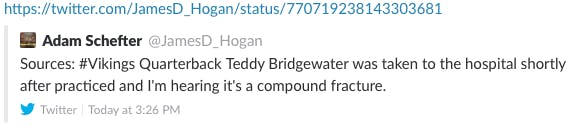

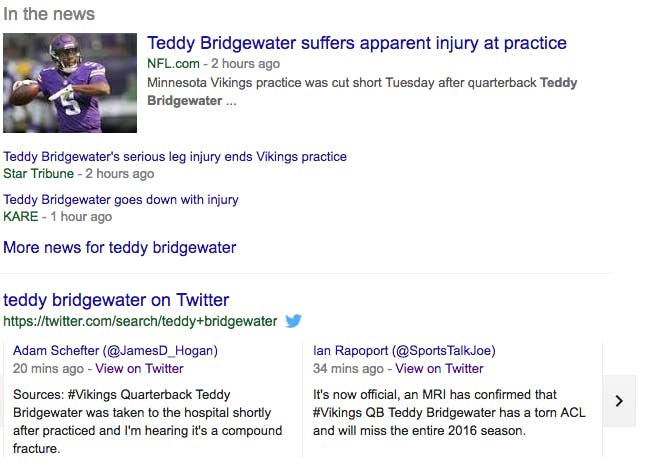

Twitter is the platform on which almost all news breaks, especially in the sports world, as reporters rush to be the first with new information. Unsurprisingly, in the wake of the Bridgewater injury, the most retweeted information came from ESPN reporter Adam Schefter and NFL Network reporter Ian Rapoport.

What was weird about the tweets from the two reliable reporters, which racked up hundreds—or in the case of Rapoport, thousands—of retweets and spread the information far and wide, was that the information seemed to be conflicting.

Rapoport suggested Bridgewater would miss the 2016 NFL season with a torn ACL, while Schefter reported it was a compound fracture that sent the Vikings QB to the hospital.

Neither of those tweets turned out to be true, because neither came from actual reporters. Twitter users in search of a little attention swapped every part of their profiles, including their names and avatars, to give the appearance they were delivering news.

Two hours later, the Vikings held a press conference that didn’t provide much in the way of detail, but was enough to debunk the two fake tweets (it was later reported that Bridgewater had, in fact, torn his ACL, ending his season).

But the damage had already been done. The false information was spread wide, was copied by other unofficial Twitter accounts, and was even getting picked up by local news and online outlets.

Most fans, aching for the latest update about their favorite teams or making last-minute changes to their fantasy draft board, didn’t bother to take the time to scope out the blue checkmark that marks verified accounts or glance at the Twitter handle that quickly reveals the true account identity.

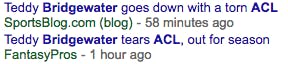

But helping make sure that false information reached as many people as possible was Google. The search engine that handles 40,000 search queries every second gave plenty of people looking for information about Bridgewater the wrong impression.

Built into Google’s search results is an automatically populated list of popular tweets about the search term. The results from Twitter appear especially when relevant to a news story, like Bridgewater’s injury. Not particularly helpful is the fact the first tweets Google displayed were the popular but fake reports.

Twitter does have somewhat of a mechanism built into its platform to prevent the spread of bad information: When a topic trends, the results Twitter generally chooses to highlight are not ones just with a lot of retweets, but from verified and trustworthy accounts.

But it doesn’t take much scrolling to find the fake accounts, either.

It’s not shocking that this type of information spreads so quickly in a situation like Bridgewater’s injury. All the reports from the Vikings practice indicated it was serious; Bridgewater fell to the turf despite taking no contact, players took off their helmets and gathered together for him, and an ambulance rushed to the scene to remove him.

People will cling to any details they can find when information is so sparse. But ideally, the systems in place for delivering that information would be better suited to filter out the nonsense.

Instead, it’s promoted two accounts that have pulled similar stunts in the past—though with less success. And in the case of the fake Ian Rapoport account, which actually belongs to @SportsTalkJoe, it’s given a new audience to an account that regularly posts offensive and borderline racist tweets.

While Facebook has taken a considerable amount of flak for allowing the human hand to guide its otherwise algorithmically generated trending topics, it’s situations like this that make it clear the benefit of having humans involved in the vetting process—and even that can fail.

It’s no surprise that celebrity death hoaxes are so common.

The Daily Dot reached out to Google and Twitter for comment on steps the companies take to prevent the spread of false information. Neither responded at the time of publication.