Google is bringing its AI-assisted image recognition app to the iPhone. The company will add Google Lens to the Google Photos app on iOS over the coming days. A natural next step for Google, Lens is essentially a search engine that uses images instead of words.

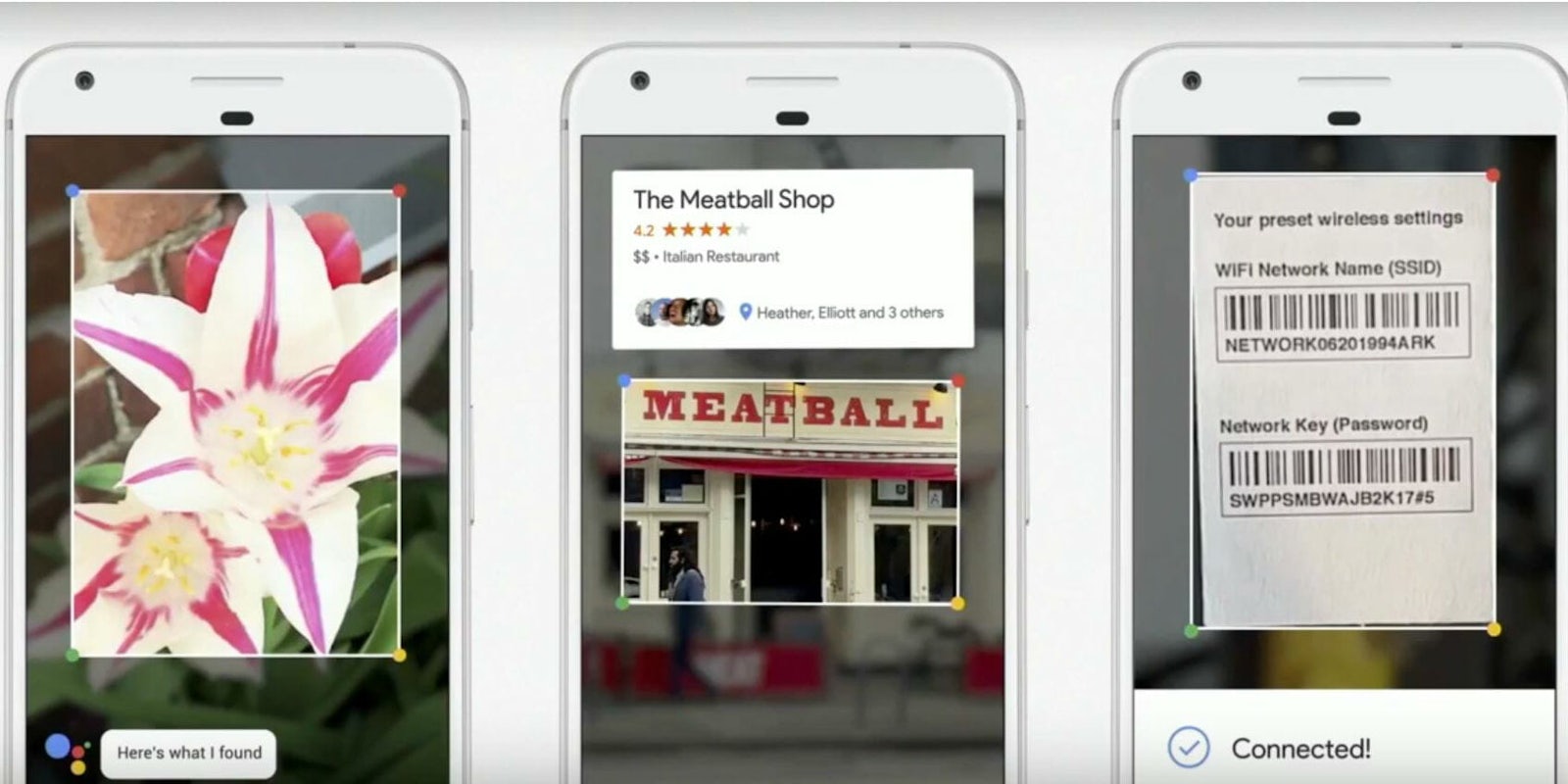

First announced at the Google I/O developer conference last year, Lens blends AI and AR to enhance our interactions with the world around us. Users simply point their smartphone camera at an object and Lens gives them contextual information about it. The feature also produces descriptions of objects from previously captured images. Lens can pull up details about landmarks, restaurants, buildings, paintings in a museum, plants, animals, and advertisements.

How to use Google Lens on iOS

iPhone and iPad owners who want to access Google Lens will need to download the Google Photos app. Note, if you already have the app, make sure it’s updated to version 3.15 and the language is set to English. Once it’s on your device, open the app, select an image, and press the newly added Lens icon (it looks like the Instagram logo). You’ll have to wait a few moments for it to work its magic. When it’s done analyzing, Lens will give you contextual information about objects it found in the photo.

Starting today and rolling out over the next week, those of you on iOS can try the preview of Google Lens to quickly take action from a photo or discover more about the world around you. Make sure you have the latest version (3.15) of the app.https://t.co/Ni6MwEh1bu pic.twitter.com/UyIkwAP3i9

— Google Photos (@googlephotos) March 15, 2018

For example, if you use Lens on an image of a restaurant, it will pull up relevant info, like its name, hours of operation, menu, and user ratings. If you’re in an art museum, you can scan works to learn more about their history. Or if you’re not sure about a certain plant, Lens will tell you if it’s poisonous or not. One particularly interesting feature lets you connect to a Wi-Fi network by scanning a router’s password label.

Lens integrates with the Google Assistant so you can take action on the information you’ve just acquired. In the example Google offered, a woman holds up a business card with someone’s name, title, and contact information. Lens pools that information into a single text file and lets users add it to their contacts list with a single press.

Lens was first released on Google Pixel devices in October and made available on all Android devices on March 5.