Google’s AI branch built a new artificial intelligence capable of learning how to walk, run, jump, and climb, without receiving specific instructions.

DeepMind, the company whose AI beat the world’s greatest Go player, wrote in a blog post how it plans to create artificial intelligence with enough physical smarts to master sophisticated motors skills.

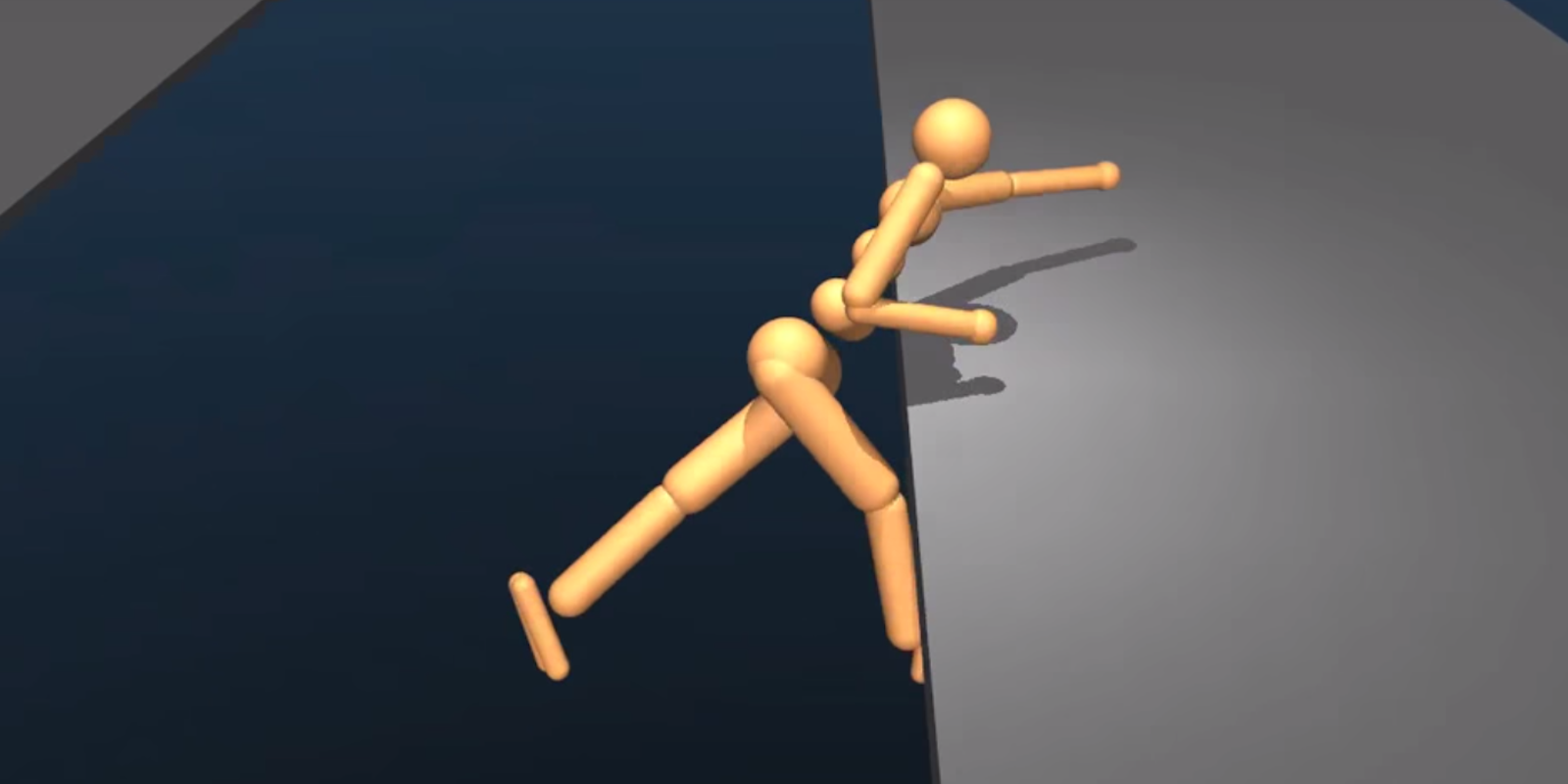

The company created several strange AI body types and tested them on diverse digital terrains, or obstacle courses, to see how well they could adapt in different environments. DeepMind posted a video of the results, which are as hilarious as they are terrifying.

Each of the figures was programmed with sensors that told it how its body was positioned. A set of simple reward signals was used to encourage the agents to get through the course. The rest is the AI learning how it can continue moving forward through the obstacles without being terminated.

For now, AI is still in innocent-baby phase, but DeepMind says its ultimate goal is to “produce flexible and natural behaviors that can be reused and adapted to solve tasks.” The advances it makes can then be programmed into future robots to make them seem more human.

You’ll be relieved to know that DeepMind is also working with Elon Musk’s OpenAI to try to prevent the robot takeover.