Humans have already demonstrated the ability to create some seriously secure encryption algorithms, but that wasn’t enough for the Google Brain team. So, instead of relying on those soft, mushy human brains, Google engineers decided to let a trio of AI minds put their own encryption skills to the test.

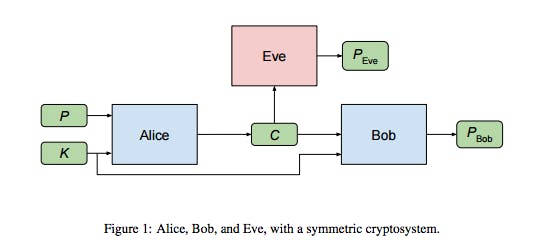

Starting with three different AIs named Alice, Bob, and Eve, Google gave specific goals to each: Alice was tasked with sending Bob a message, and it was Eve’s job to intercept and decode it. Both Alice and Bob were given matching keys with which to encode and decode their conversation, while Eve had to attempt to translate the encrypted message into plaintext without the key.

The important part here is that Alice had to come up with her own encryption algorithm, but neither Bob nor Eve was given that information. Bob didn’t have any idea how the key should be applied to the encrypted message, and Eve was basically working completely from scratch.

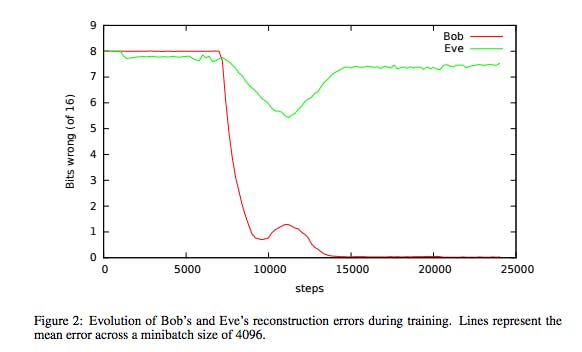

At first, neither of the AI recipients were able to successfully decode the message with any degree of reliability, but soon Bob cracked the code with the help of his key. Alice, on the other hand, struggled longer, but eventually started to hash things out. That is, until Alice tweaked the encryption and Eve once again hit a brick wall:

The results of the testing shows that AI can decode encrypted text with little or no guidance, but that it’s also relatively easy to fool a blind AI that is forced to guess how to solve the problem. The team notes in its report (PDF) that “While it seems improbable that neural networks would become great at cryptanalysis, they may be quite effective in making sense of metadata and in traffic analysis.”

In short, AI is great at encryption, but not so great at blind decryption, at least for now.

H/T ArsTechnica