After the criticism that followed the presidential election, Facebook has publicly stated that it is looking for solutions that tackle fake news. It looks like the social media platform had filed a patent for exactly this type of technology in 2015.

Patent 0350675 describes a technological “systems and methods to identify objectionable content.” Primarily, it’s aimed at detecting and eliminating porn, trolling, and hate speech—although the same kind of tool could be utilized to take down fake news stories. The patent document, published on Thursday by the United States Patent and Trademark Office, uses flow diagrams and early design concepts to describe how the technology might work.

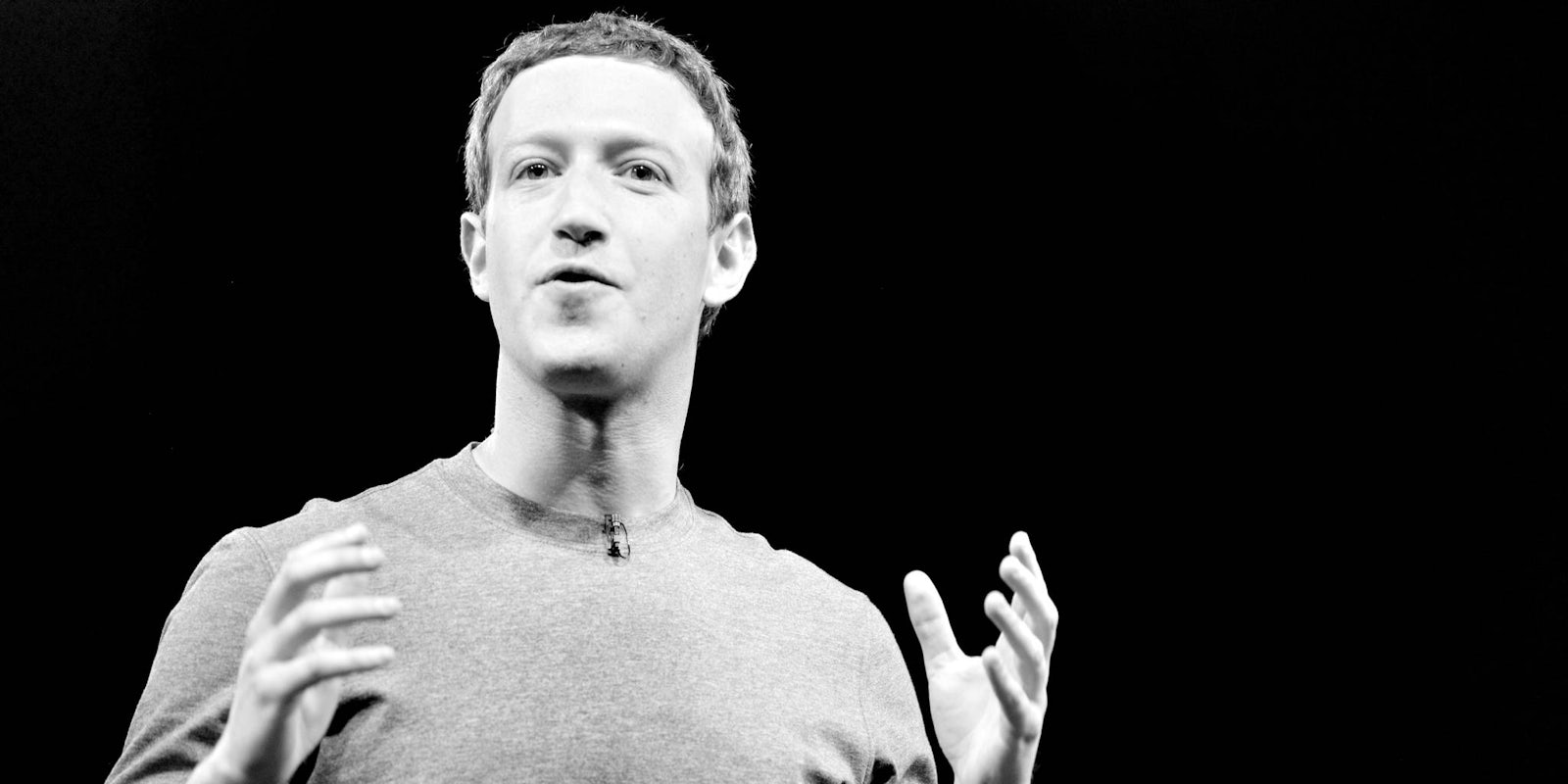

While the patent, which was filed June 2015, awaits approval, Facebook CEO Mark Zuckerburg has continued to attempt to reassure users and the media that his company is actively seeking to fight misinformation on the website. In a statement published just last month, the Facebook founder described some of the strategies the company was employing to undermine misinformation on the website without lending too much faith in an algorithmic solution.

“Historically, we have relied on our community to help us understand what is fake and what is not,” Zuckerberg wrote. “Anyone on Facebook can report any link as false, and we use signals from those reports along with a number of others… to understand which stories we can confidently classify as misinformation. Similar to clickbait, spam and scams, we penalize this content in News Feed so it’s much less likely to spread.”

This patent, however, indicates an automated solution was or is a consideration for the social media network, perhaps as a supplement to the current user-reporting of content. Most of the technological concept options on the patent documentation outline an artificial intelligence system that would learn to identify propaganda or questionable content and would use a score-based system to make the decision on what to do with the content.

No matter what, the company wants to retain its position as a leading news source for users. So, while it’s in Facebook’s best interests to provide editorially sound content, there is a lot at stake if its reputation as a neutral platform slips. Just this year the company hastily fired a full team of human editors to get back onside with users, after it was accused of bias and of suppressing conservative news.

It’s clear that while Facebook feels the pressure to appear like its teams are doing something about fake news, applying solutions that are on hand can carry a lot of risks that, ultimately, could damage profits and value.