A team of engineers and designers in Switzerland is poised to change the way people can create 3D models of humans for things like virtual reality and telepresence. They built an app to create dynamic and detailed human avatars simply by using a smartphone.

“What makes our current system so effective is that it is tailored specifically to modeling the appearance and the dynamics of human faces,” Alexandru-Eugen Ichim, researcher and coauthor of the paper that describes the process, told the Daily Dot in an email. “Each stage of the process is customized to the specificities of faces such as their appearance and motion dynamics.”

Ichim began working on this project as part of his Ph.D. at the Computer Graphics and Geometry Laboratory at EPFL, a technical university in Switzerland.

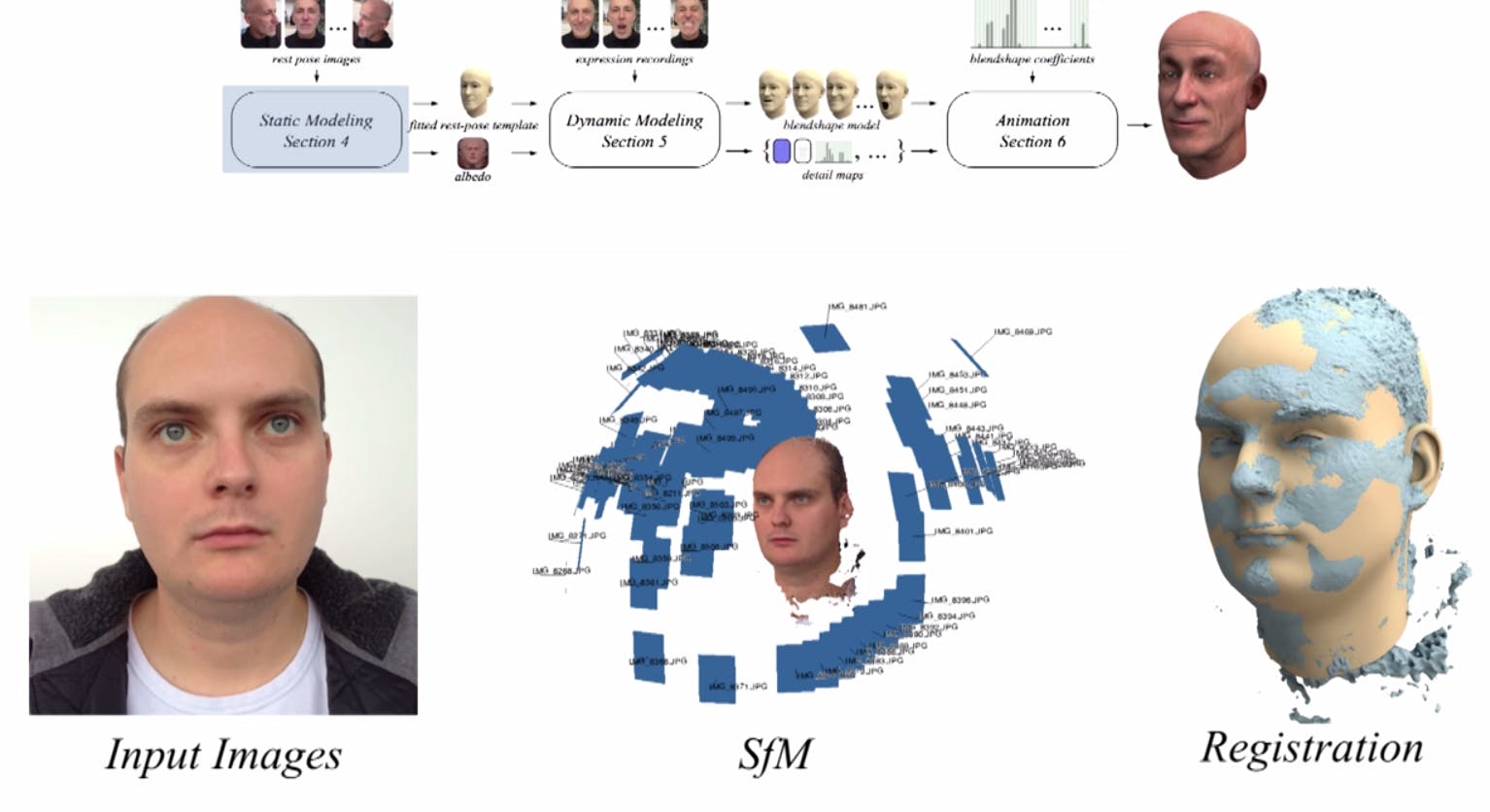

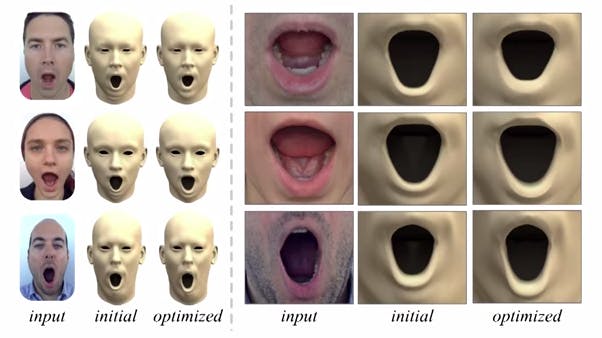

The team created a three-step “pipeline” for modeling faces, making it fairly simple and accessible to create life-like virtual models of people’s heads, all the way down to micro expressions, like the hint of a raised eyebrow, or a wrinkle that forms around pursed lips. The software takes an avatar through static modeling, dynamic modeling, and animation.

Creating individualized 3D graphics through facial rigging is incredibly difficult. Designers create a “rig,” or a skeleton that corresponds to every character, and the character’s face and expressions are mapped onto the rig. Capturing and mirroring accurate facial expressions to avatars is challenging and prohibitively expensive for many designers—which is why characters’ expressions in video games often look similar.

Ichim’s team is democratizing the creation of individualized 3D graphics, making it cheaper and accessible to more people. And all you need is a mobile device.

The first thing the software requires is for a person to take a number of selfies from around the entire head, captured in burst mode. Then, a user records a video making a variety of facial expressions. After running the photos and videos through proprietary mapping software and facial rigging algorithms, the application can create a completely responsive animated avatar. Researchers described the entire process in a YouTube video.

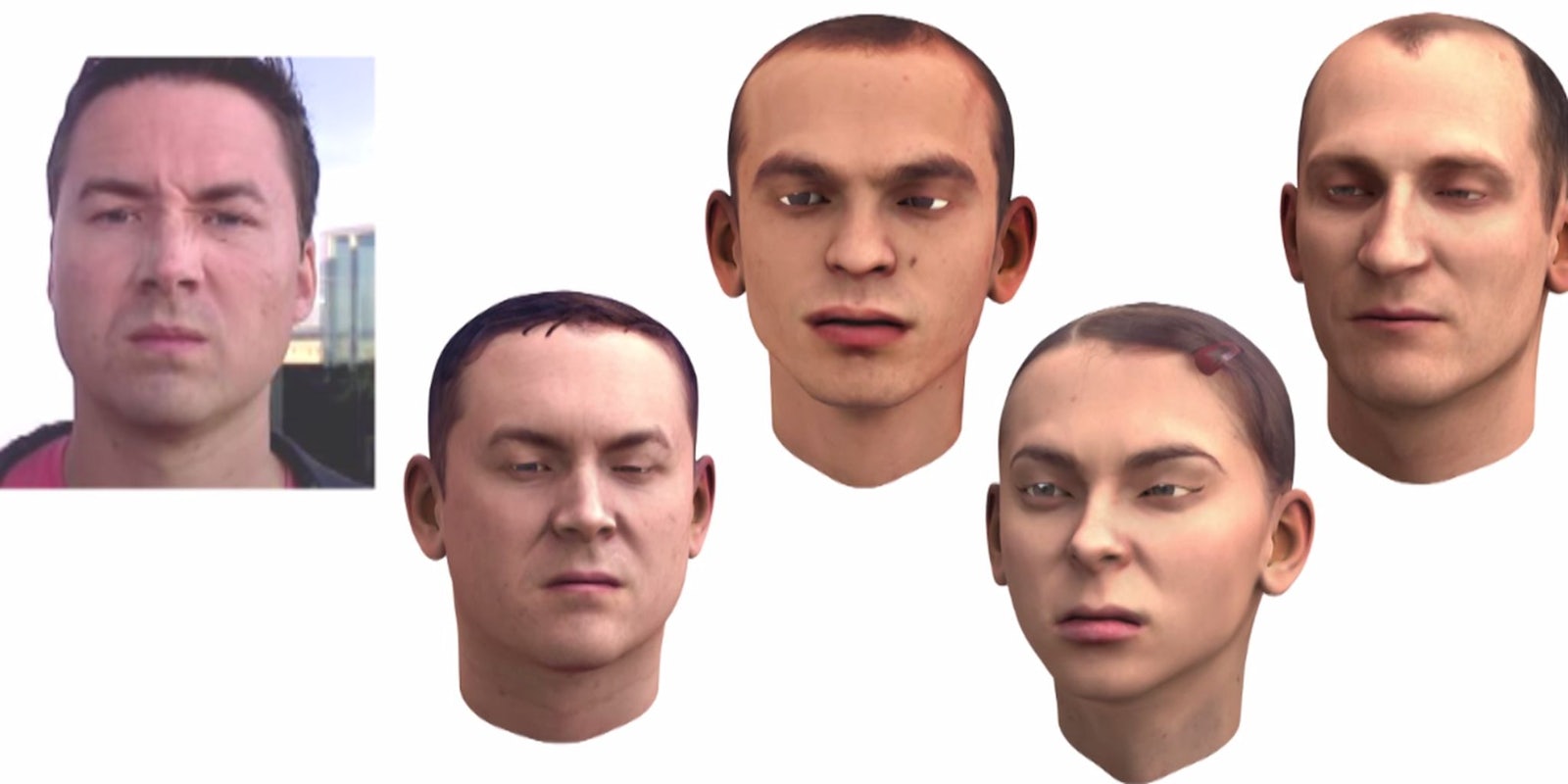

Because individuals record their facial expressions before creating the dynamic avatar, the tech can copy the expression and optimize it for the animated output. Although three people might be making the same shape with their mouths, it will render differently on each avatar based on the initial face scan.

“We believe that correctly modeling the human face, and especially nailing the realism of its motions is one of the most important technologies for VR and computer graphics right now,” Ichim said. “Furthermore, our application allows untrained users to capture their own faces using digital cameras or smartphones, which makes our technology accessible for a larger audience.”

Imagine putting on a VR headset while an avatar appears in a virtual world—but instead of picking from a set of pre-rendered avatars, your own face is what people see when they come in contact with your avatar. You’ll be able to better engage with other people by articulating your thoughts and feelings through facial expressions you’ve pre-programmed into your avatar.

Though this 3D-mapping technique could eventually be used for capturing other bits of the surrounding environment, Ichim said that for now they are focused exclusively on faces. The creation of true-to-life dynamic animation is the most difficult problem to solve—a chair, couch, or mountain doesn’t make mappable micro expressions.

Eventually, Ichim wants everyone to be able to create their own avatars for VR applications.

“Virtual reality is one of the main target ecosystems of our work,” he said. “As cameras have become cheap and 2D-processing tools accessible for the mass markets, we foresee times where creating good quality 3D animation will not be limited to only game or movie studios with large budgets.”

The team is collaborating with Faceshift, another EFPL startup, working on rigging facial animations to 3D characters. For now researchers are still getting feedback on the project, and are planning to release an alpha version of the app later this year.

Screengrab via LGG EPFL/ YouTube