A string of clips circulating on social media praising First Son Barron Trump for his America’s Got Talent audition have been drawing boomers in. There’s only one problem—none of them are real.

Why do people think Barron Trump sang on America’s Got Talent?

In recent days, videos have been popping up across YouTube and Facebook claiming to show Barron Trump performing on popular reality shows.

“Unbelievable! Barron Trump Stuns at AGT 2025 with His Inspiring Journey Greenland with Donald Trump” reads the title of one 22-minute long video that’s racked up over 411,000 views and nearly 1,900 comments in just four days.

Another similarly claims Trump’s singing “SHOCKS Everyone” at American Idol, but adds an important bit of clarification at the end of the title—”AI cover.”

Whether they bother to note it or not, every single one of the videos claiming to show Trump’s “inspiring,” “shocking,” or “emotional” audition for the show is created with artificial intelligence. Some include clips from the actual show featuring the judges and crowd. Some even pull in B-roll footage of the Trump family to create the narrative they’re trying to sell.

But the “Barron Trump” standing up on that stage is a digital creation, as are the songs he allegedly sings. As of publication, he has never appeared on America’s Got Talent, American Idol, or any other talent competition, nor has there been anything to suggest he has any creative inclinations or talents.

Are people falling for the Barron Trump deepfakes?

AI-generated videos painting the 18-year-old NYU student as a musical prodigy have been going around in various forms for at least the last several months. Snopes previously refuted the authenticity of videos posted in late 2024 that “imagined” Trump singing Christian music in honor of his father.

They also drew attention to the comments. Most of them express support for the Trump family, but many come from suspicious-looking accounts that very well may be fake themselves.

Watching any single video should make it obvious that it’s fake. In some, Trump’s mouth doesn’t match the audio. In all of them, his image falls into uncanny valley territory—something that doesn’t quite look human, even to those who can’t articulate exactly why that is.

“This is a weird AI generated video,” u/nomercyvideo wrote on a since-deleted Reddit post, “who falls for this stuff?!”

Without rigorous auditing of the accounts engaging with and circulating these videos, it’s extremely difficult to gauge how many real people are falling for these AI deepfakes. But if nothing else, seeing @johnsmith1234567890 and 2000 other YouTube commenters responding to a video as if it’s the real deal provides a layer of credibility that non-tech-savvy people may take at face value.

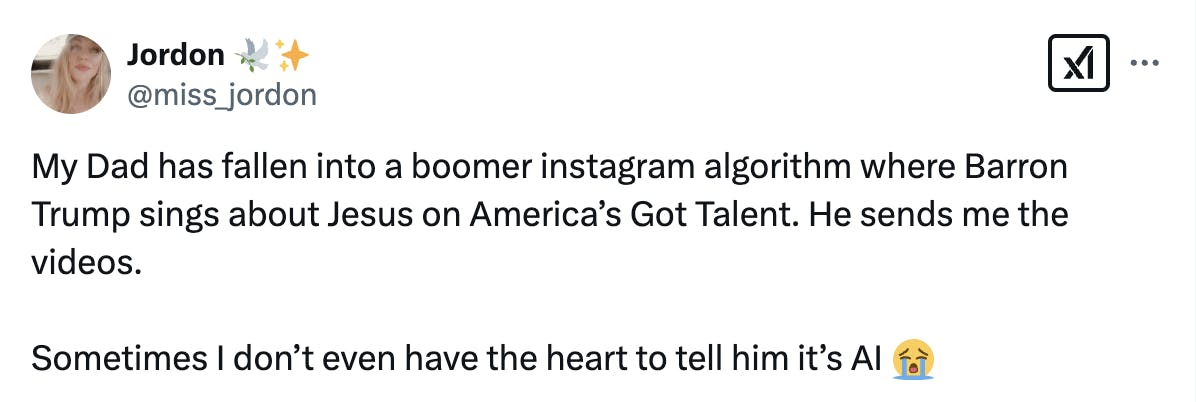

At any rate, we do know that the number of actual humans buying into these videos is greater than zero.

Portuguese politician shared an AI video of Barron Trump on America’s Got Talent believing it was real.

— Rhain (@Rhain1898) February 17, 2025

You just can’t make this up… https://t.co/z6xcZPBfPg

Why does any of this matter?

At the moment, deepfakes are hit or miss in terms of being convincing enough to genuinely fool people who are paying attention. We also know that will likely change in the future. But these bizarre halfway-there videos are still cause for concern.

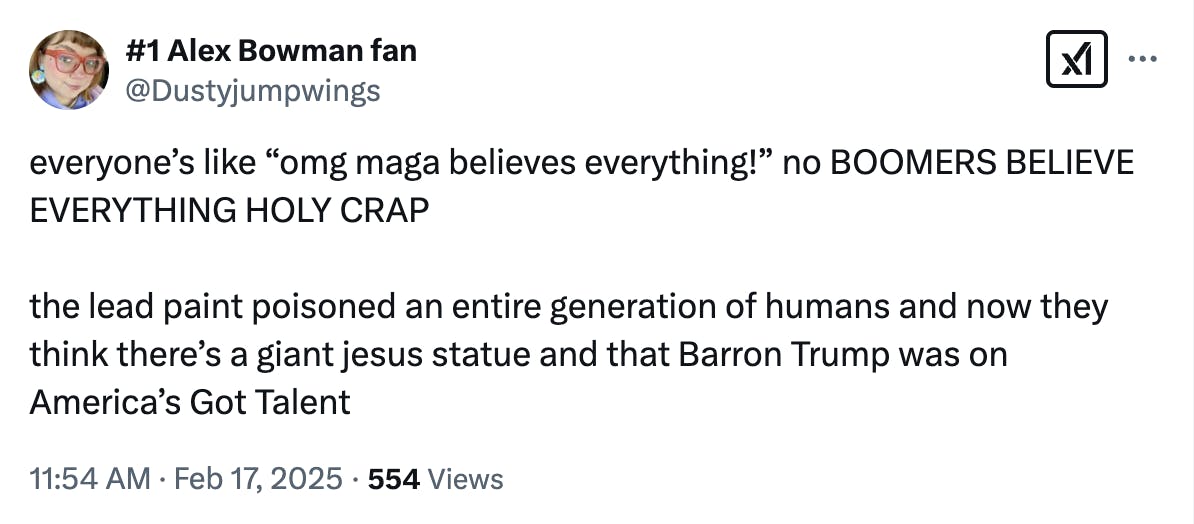

The last few years have seen a proliferation of “fake news” across social media. In the months surrounding the 2020 election, misinformation drew six times as many clicks on Facebook as factual news. Even before taking deepfakes into consideration, we’d already entered an era where false information was readily available and rapidly spread by people who seemed increasingly unable to tell the difference between what’s real and what’s fake online. Or perhaps they’re simply uninterested in bothering to suss out the facts.

Deepfakes flooding social media sites are only going to exacerbate the problem. Not necessarily because they’re already convincing—although some certainly are—but because they are further desensitizing everyone to the idea of unreality. What’s the harm in sharing a video of Barron Trump singing, even if it’s not him?

Of course, there’s a ripple effect. Engaging with AI-generated content can fill a person’s algorithm-based social media feed with more of the same. Ad revenue or a growing following of actual human users can incentivize deepfake creators to keep churning out more and more, both pushing down genuine content in the search results and building the influence these channels wield, which may or may not be used for “harmless” content in perpetuity.

“We’re all living through a great enshittening, in which the services that matter to us, that we rely on, are turning into giant piles of shit,” writer Cory Doctorow said in an article explaining his now-famous theory of enshittification. “It’s frustrating. It’s demoralizing. It’s even terrifying.”

Every tech company that forces AI integration seems to be adding to this problem. Many Google searches now immediately return an “AI overview” with information that is frequently partially or completely incorrect—and there are approximately 8.5 trillion Google searches made every single day.

The intent behind AI-generated content isn’t always malicious. But it does seem to be making the internet a dumber place where it’s more difficult than ever to sort fact from fiction. And regardless of whether people can’t tell that videos of Barron Trump singing to Simon Cowell are fake or if they just don’t care so long as they’re entertained for 15 seconds, it doesn’t bode well for the future of online information.

The internet is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here to get the best (and worst) of the internet straight into your inbox.