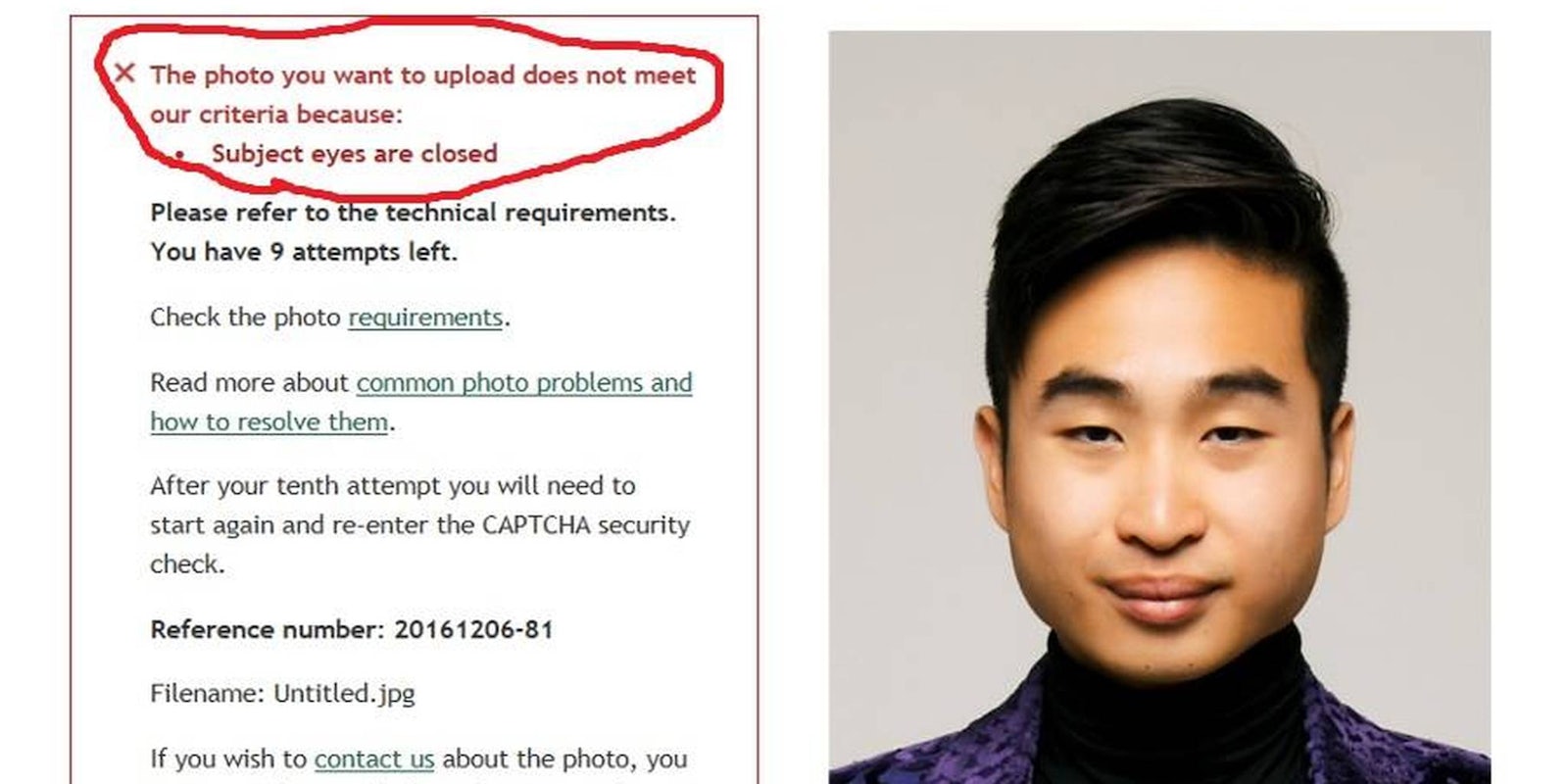

Richard Lee, a 22-year-old New Zealander of Asian descent, recently had his passport photo rejected when the automated facial recognition software registered his eyes as being closed. Lee posted about the incident on Facebook.

Lee told Reuters that it was no big deal, “I’ve always had very small eyes and facial recognition technology is relatively new and unsophisticated.” An Internal Affairs spokesman also said about 20 percent of passport photos are rejected.

However, as much as people love to claim that technology is inherently unbiased, there are many examples of racial bias in facial recognition software. For a while, Google photos was auto-tagging images of black people as gorillas. HP’s face tracking webcams could detect white people but not black people. And many versions of facial recognition software have determined Asian people’s eyes are closed.

As Rose Eveleth wrote for Motherboard, the bias comes not from the technology, but from the programmers. “Algorithms are trained using a set of faces. If the computer has never seen anybody with thin eyes or darker skin, it doesn’t know to see them. It hasn’t been told how. More specifically: The people designing it haven’t told it how.” However, because engineers say they aren’t intentionally programming racial biases, many refuse to admit there is even a problem.

Lee is right that facial recognition technology is unsophisticated. That’s often because the pool of faces used to train it isn’t as diverse as it needs to be. It will always make mistakes, but as it stands now, those mistakes disproportionately affect non-whites.