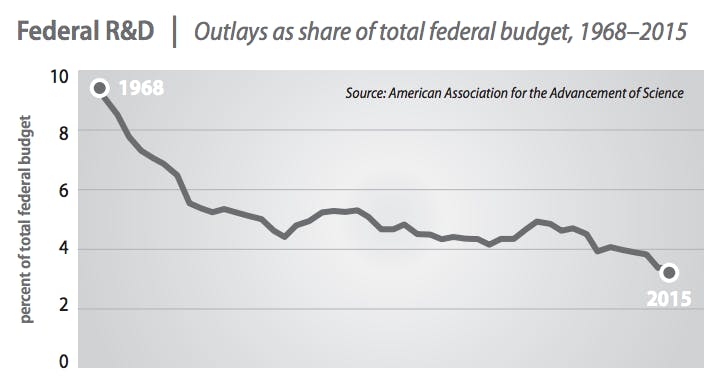

A decline in basic research funding from the U.S. government is impairing the nation’s ability to protect itself from cyberattacks, according to a new report.

The study, titled “The Future Postponed,” was published Monday by a committee at the Massachusetts Institute of Technology (MIT) charged with evaluating country’s innovation deficit.

America’s cyber insecurity stems, at least in part, from “core weaknesses” in the majority of computer systems, which the researchers describe as antiquated and fundamentally flawed.

“These architectures have their roots in the late 1970’s and early 1980’s when computers were roughly 10,000 times slower than today’s processors and had much smaller memories,” the study says.

Among the problems that the researchers noted was the fact that several commonly used programming languages were developed before most computers were networked. As a result, they contain “structural flaws” that leave them susceptible to hackers.

Another significant insecurity stems from the world’s most common method of authentication: the password.

“Cyber criminals,” the report says, “have developed means of exploiting human laziness and credulity to steal such credentials—guessing simple passwords, bogus emails that get people to provide their passwords, and similar tricks.”

Malicious hackers often gain access to sensitive data and systems by targeting a low-level employee—one who may not have received any useful security training—and escalating their privileges until they gain the desired level of access.

The solution, according to the MIT team, is twofold: completely redesign the world’s computers to eliminate inherent flaws and implement a stronger method of authentication.

The researchers recommended multifactor authentication, whereby a computer user is required to provide at least two sources of identity proof. Two-factor authentication is often described as “something you know [a password] and something you have [a smartphone with an authenticator app].”

The report estimates that maintaining inherently flawed computers will cost around $400 billion per year.

“The opportunity exists to markedly reduce our vulnerability and the cost of cyberattacks,” the MIT researchers say in the report. “But current investments in these priority areas especially in non-defense systems are either non-existent or too small to enable development and testing of a prototype system with demonstrably better security and with performance comparable to commercial systems.”

The researchers advise government investment in research aimed at designing a new computer that is proven through “rigorous testing…to be many orders of magnitude more secure than today’s systems.”

According to the report, there has been little research into speeding up the development of new, more secure computer systems or encouraging average consumers to improve their personal security.

“At present, the cost of providing more secure computer systems would fall primarily on the major chip vendors (Intel, AMD, Apple, ARM) and the major operating system vendors (Apple, Google, Microsoft), without necessarily any corresponding increases in revenue,” the report says.

MIT’s report also covered other underfunded areas of innovation, including space exploration, fusion energy, photonics, quantum information technologies, and Alzheimer’s treatment.

The study further stresses the importance of research with long-term benefits, much of which is criticized for not yielding profits quickly enough. The report notes that some observers have asked of the Higgs boson—the recently discovered source of mass for all particles in the universe—“What good is it?”

“It is useful to remember,” the researchers counter, “that similar comments might have been made when the double helix structure of DNA was first understood (many decades before the first biotech drug), when the first transistor emerged from research in solid state physics (many decades before the IT revolution), when radio waves were first discovered (long before radios or broadcast networks were even conceived of ).”

Photo by Steve R. Watson/Flickr (CC BY-SA 2.0)