As the 2020 election nears, the weaponization of information has become a growing concern among members of both political parties. Machine learning technology has made it easy for anyone to manipulate videos of public figures for malicious use.

As fake videos depicting celebrities like President Barack Obama to Kim Kardashian saying things they’ve never said circulate online, pundits, and news personalities reacted with mild panic.

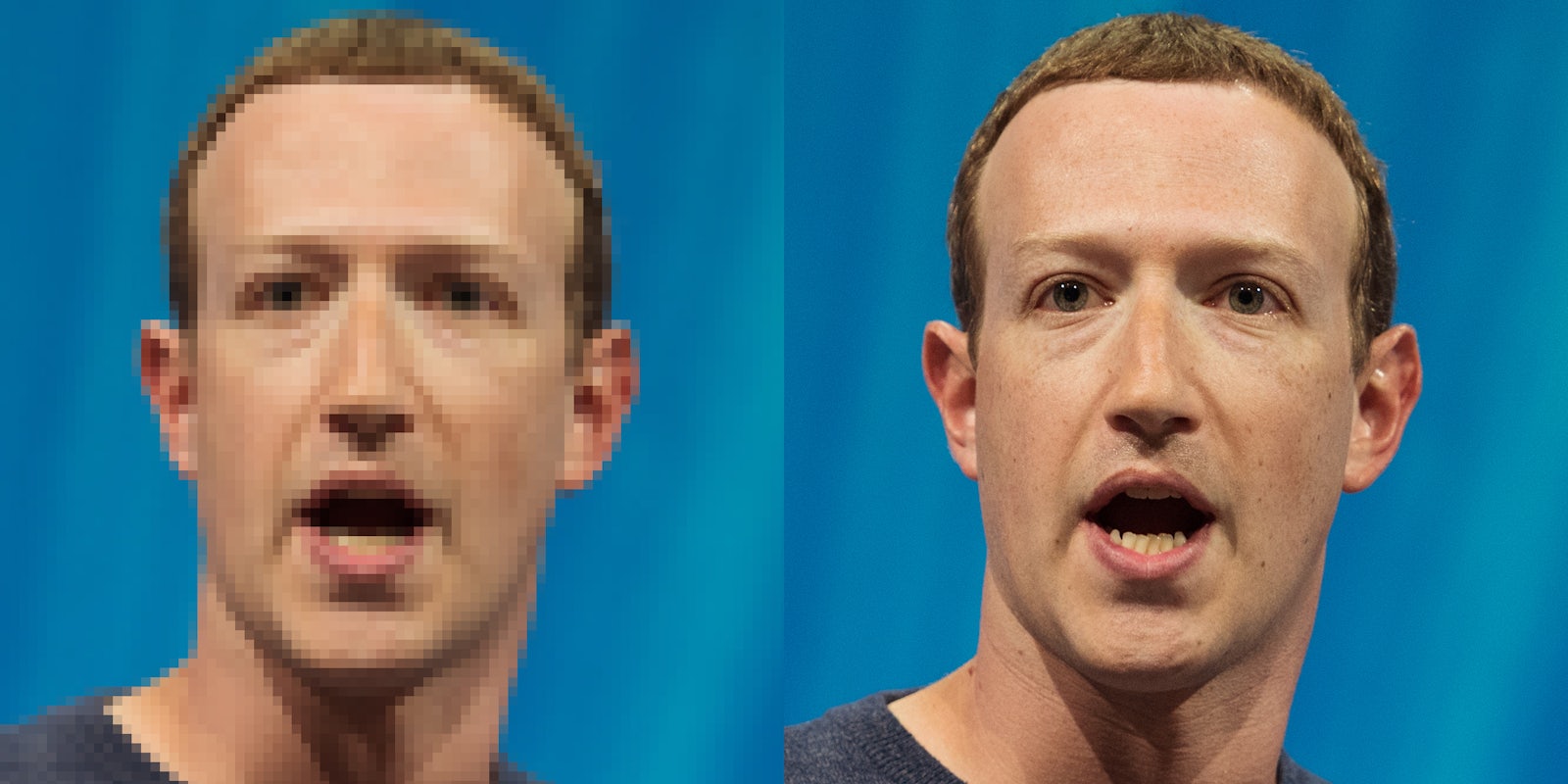

In June, CBS News responded to a video that showed an interview with Facebook CEO Mark Zuckerberg on CBSN, the network’s streaming news network—an interview that never occurred. In the fake clip Zuckerburg was bragging about owning people’s data. This was a manipulated version of a video in which the Facebook CEO discussed a strategy to address election interference leading up to the then midterm elections and looming 2020 election.

In an article for CBSNews.com, editorial director Lex Haris points out that “CBS has requested that Facebook take down this fake, unauthorized use of the CBSN trademark,” referencing a statement from CBS News’ legal team.

But the founders of Deeptrace Labs thought they’d spotted a market opportunity to protect consumers from misinformation. About a year ago, they launched the Amsterdam-based firm with aims to help distinguish between deepfake videos and real videos.

Deepfake videos use generative adversarial networks or GANs, which uses machine learning. GANs use a sample set of images or videos to run against each other. GANs learn rapidly, meaning the more time goes by, the more convincing deepfake videos become to consumers.

“As deepfakes continue to improve and the visual signs of manipulation begin to vanish, this analysis will become increasingly integral for detecting deepfakes” says Henry Adjer, a spokesperson for Deeptrace Labs.

This is why companies want technology that does not just catch deepfake videos after they’ve been created but they don’t get out there in the first place—with technology that verifies video at the source—a camera extension of sorts.

One of the major players is a San Diego-based startup Truepic. One of the signature products they offer is called “Controlled Capture.”

This software operates as an extension to a camera that gives each piece of footage a ‘unique signature’ in the blockchain and protects a video’s metadata. The metadata includes identifying factors like camera type and timecodes. Truepic’s solution would lock those into place.

The blockchain, by its nature, protects data from alteration. Each unique signature cannot be altered.

However, even if somehow a bad actor figures a way to circumvent these protections, a new digital fingerprint is made which a video producer will likely notice. This will give them a chance to discard manipulated video before it gets out into the public. This move will help news producers quickly sift through video under the harsh deadlines of the daily news environment without concern.

This past June in Malaysia, a video made the rounds on social media and newscasts of a political aide confessing to having sex with an economic affairs minister. The confession made the rounds in social media and on area newscasts. The video was proved to be a deepfake but by the time it was cleared the damage had already been done. The aide, Haziq Abdul Aziz, was arrested and suspended from his job.

Truepic says this is why having safeguards would keep videos like this from getting out there in the first place. “Deepfakes will always exist and we will continue to combat them … our goal is to establish truth,” said Mounir Ibrahim, Truepic Vice President of Strategic Initiatives.

Amber Video has a similar product, “Amber authenticate,” which uses blockchain technology to verify the legitimacy of the video.

But Amber differentiates itself by who they work with, choosing to side closely with law enforcement.

“We are focused on critical video that could be used as evidence in court,” says CEO Shamir Allibhai. He says the company is closely working with the companies that create Closed Circuit TV networks and police body cams. According to the Washington Post, approximately 18,00 U.S. law enforcement agencies have a body-cam program.

But while this verification technology shows promise, there are major concerns especially in the case of law enforcement.

“The verification technology is promising but my worry is that as we become more sensitized to the existence of deepfakes and misinformation that may make people easier to manipulate out of your default which is believing what you see,” says Riana Pfefferkorn, Associate Director of Surveillance and Cybersecurity at The Center for Internet and Society at Stanford University.

“There are a lot of unanswered questions but this a big step,” said Pfefferkorn, adding that “it’s also a matter of cost. My concern is that some police departments will be able to afford products like Amber Authenticate and others may not, which will present challenges for jurors in a court of law. Police departments should be concerned that malicious actors could manipulate video to help meet a certain agenda. Police officers could be framed for actions they did not do.

“If people can’t trust what they see, they will revert to populist behavior,” Pfefferkorn says.

NOW HEAR THIS:

How deepfakes are made and the future of digital identity

Introducing 2 GIRLS 1 PODCAST, a weekly comedy show where Alli Goldberg and Jen Jamula (two actors who perform bizarre internet content on stage) have hilarious and humanizing conversations with Bronies, top Reddit mods, professional ticklers, video game archaeologists, dating app engineers, adult babies, cuddling specialists, vampires, Jedi, living dolls, and more.

Subscribe to 2 GIRLS 1 PODCAST in your favorite podcast app.

READ MORE: