Earlier this month, researchers at Facebook released a really interesting study. Published in the journal Proceedings of the National Academy of Sciences, the study found that Facebook users were vulnerable to something it termed ?emotional contagion,” which means that users who were exposed to lots of sad content on their News Feeds had a tendency to post more sad content themselves. Something similar was observed for users who saw more happy posts.

Then this past weekend, it was revealed that Facebook didn’t just provide the data for the study; it had manipulated it. Facebook purposefully determined if (unknowing) participants were being shown sad or happy status updates.

The problem with this little experiment in seeing if it was possible to make thousands of its users more or depressed was that the company, which already has a long history of being cavalier about its users’ privacy, didn’t exactly ask for anyone’s permission before conducting the study.

Quite naturally, a lot of people were pretty upset about it.

On Sunday, the study co-author Adam Kramer responded to the controversy with a Facebook post defending his team’s actions.

?The reason we did this research is because we care about the emotional impact of Facebook and the people that use our product,” he wrote. ?We felt that it was important to investigate the common worry that seeing friends post positive content leads to people feeling negative or left out. At the same time, we were concerned that exposure to friends’ negativity might lead people to avoid visiting Facebook.”

Kramer insisted that the scope the manipulations was relatively small—certain content was only deprioratized for about 0.04 percent of Facebook users and only for a single week in 2012.

?Nobody’s posts were ?hidden,’ they just didn’t show up on some loads of Feed. Those posts were always visible on friends’ timelines, and could have shown up on subsequent News Feed loads,” he explained. ?And we found the exact opposite to what was then the conventional wisdom: Seeing a certain kind of emotion (positive) encourages it rather than suppresses it.”

Kramer continued:

And at the end of the day, the actual impact on people in the experiment was the minimal amount to statistically detect it—the result was that people produced an average of one fewer emotional word, per thousand words, over the following week.

Facebook has insisted from the outset that the study was legal because the data use policy that all Facebook users must agree to before signing up for the social network allows users information to be used ?for internal operations, including troubleshooting, data analysis, testing, research and service improvement.” [Emphasis added.]

Even so, whether a vaguely worded clause in the little-read data use policy really counts as opting-in to a study like this one is debatable.

“If you are exposing people to something that causes changes in psychological status, that’s experimentation,” James Grimmelmann, a professor of technology and the law at the University of Maryland, told Slate. “This is the kind of thing that would require informed consent.”

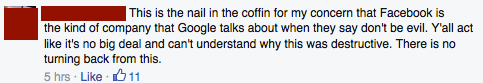

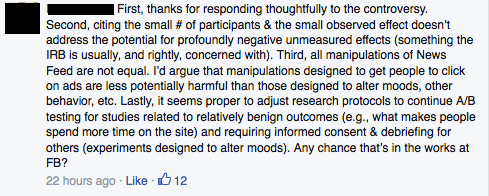

Kramer’s post unsurprisingly triggered a flood of comments from use. Most seemed to appreciate the merits of defense but remained a least a little uncomfortable with the entire endeavor.

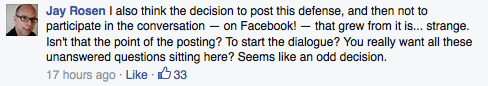

NYU journalism professor and prominent media critic Jay Rosen even chimed in on the overall nature of the discussion.

As Business Insider noted, Facebook did have some defenders. A number of Silicon Valley venture capitalists dismissed the controversy as a tempest in a teapot. Chris Dixon and Marc Andressen of leading VC firm Andressen Horowitz, for example:

If you a/b test to make more money that’s fine, but if you a/b test to advance science that’s bad? I don’t get it.

— Chris Dixon (@cdixon) June 29, 2014

@BenedictEvans @cdixon Someone is forcing you to use Facebook? Good god, man, call the police! :-)

— Marc Andreessen (@pmarca) June 29, 2014

The firm, it should be noted, was a relatively early investor in Facebook and Andressen himself sits on Facebook’s board of directors. For a company constantly on the hunt for ways to boost engagement, Facebook’s realization that showing users a greater percentage of happy posts encourages them to contribute more happy content themselves—rather than become jealous of everyone else’s wonderful, tightly curated lives and decrease engagement—could be easily leveraged to make the company more profitable.

Illustration by Jason Reed