The makeup of Wikipedia editors has become an increasingly controversial topic in recent years, leading to coordinated campaigns to increase the number of female editors or editors who are medical doctors. However, a new research paper by Google Germany engineer Thomas Steiner finds that a surprising demographic accounts for a huge percentage of all edits to the site: robots.

Setting out to discover more about precisely who edits Wikipedia, Steiner created an open-source program that monitors the stream of changes made to the online, crowdsourced encyclopedia, and can determine if each was made by bot or a human.

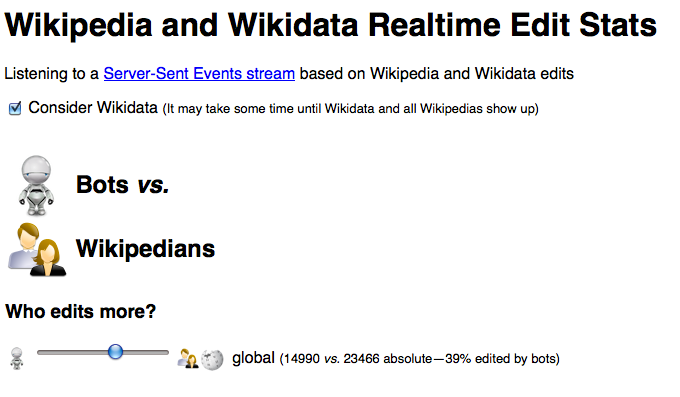

The program found that, over a three day period in late 2013, over 3.8 million edits were made to the 287 different language-based Wikipedia sites. As for the percentages of human vs. robot, well, you can see that for yourself by checking out the program’s site.

During a period on Thursday morning, just under 40 percent of all the edits were made by bots:

A check by Kurzweil AI a day earlier found saw that number reach 42 percent.

In Thursday’s analysis, the program found a striking disparity between the amount of bots on different language Wikipedia sites. The English-language Wikipedia site has a low number of edits made by bots (only three percent), whereas the German sites was 86 percent bot-edited.

Steiner was able to see all of the changes coming down the Wikipedia pipeline because, every time an edit is made to the site, a notice is automatically sent through an online chat room. This chat room allows anyone interested in seeing new edits to the site to do so as they happen. Steiner’s program watched that chat room and then automatically determined the source of the edits. Figuring out if an edit was made by a bot isn’t particularly difficult because Wikipedia’s bot policy requires all bots to have their own dedicated account with a username clearly identifying them as non-human actors.

Automated programs have been making edits and adding articles to Wikipedia for well over a decade. Early examples simply added entires to the site from sources like Easton’s Bible Dictionary and the 1911 Encyclopedia Britannica.

One of the most notable bots from Wikipedia’s early history was Rambot, which created thousands of articles per day about towns across the United States with information drawn from the Census Bureau. While these entires were very basic, and very much read like they were written by a computer program, they largely functioned as useful placeholders eventually fleshed out by real human beings.

Today, Wikipedia bots perform invaluable functions, such as cataloguing entries and quickly deleting vandalism. All bots posting to the site are required to go through a formal approval process before they are allowed commence operation.

?We had a joke that one day all the bots should go on strike just to make everyone appreciate how much work they do,” Chris Grant a member of Wikipedia committee supervising the site’s bots, told the BBC. “The site would demand much more work from all of us and the editor burnout rate would be much higher.”

Photo by espensorvik/Flickr