This article contains examples of racist memes.

In August 2017, the Unite the Right rally in Charlottesville, Virginia, erupted in deadly violence, bringing the spread of hate on social media screaming into the national consciousness.

Tensions had been high leading up to the rally, both on the ground and on Facebook, which was littered with vitriolic posts by those planning to attend, whose ranks included a broad cross-section of right-wing groups, including white nationalists, the Ku Klux Klan, the Proud Boys, and others. Only after James Fields Jr. crashed his car into the crowd at Unite the Right, killing a woman and injuring several others, did Facebook—which had left the rally event page up until just one day prior—seem to wake up to the real-world dangers of allowing hate to fester on the site.

Ever since, the company has vowed to do better.

Yet similar hate speech—much of it racist, and much of that racism directed at non-whites—remains just a few clicks away.

“Facebook, in part because it’s the biggest, and in part because it seems to do more to bring people of mutual interests together … also seems to have the largest proliferation of hate groups or at least groups that engage in speech most would view as hateful,” David Brody told the Daily Dot. Brody is Counsel and Senior Fellow for Privacy and Technology with the Lawyers’ Committee for Civil Rights Under Law, which worked on Change the Terms, a set of recommendations for social media companies to police content.

Over the past month, the Daily Dot searched for Facebook pages associated with entities on the Southern Poverty Law Center’s list of hate groups. On Feb. 10, the Daily Dot found that, of 66 groups in the SPLC’s extremist files, 33 have Facebook pages; cumulatively, membership in the 33 totals more than six million. The SPLC identifies a total of 954 hate groups in America.

Several have hundreds of thousands of followers or more on Facebook, such as Federation for American Immigration Reform or FAIR (2 million); Alliance Defending Freedom (1.6 million); ACT for America (172,000) and its founder Brigitte Gabriel (600,000); Family Research Council (250,000); and the Center for Immigration Studies (248,000), all of which maintain they are not hate groups.

Groups that subscribe to the many forms of white supremacy are particularly prevalent.

The SPLC does have critics, among them many individuals affiliated with hate groups on this list, who question its methodology, politics, and motives. Proud Boys founder Gavin McInnes has filed a defamation suit against the SPLC for labeling his pro-Trump club for “Western chauvinists” a hate group. Nevertheless, generally speaking, the nearly 50-year-old nonprofit is considered among the foremost authorities on hate in America.

But it’s not just that these hate groups have an oversized presence. It’s how few clicks away truly horrendous beliefs are.

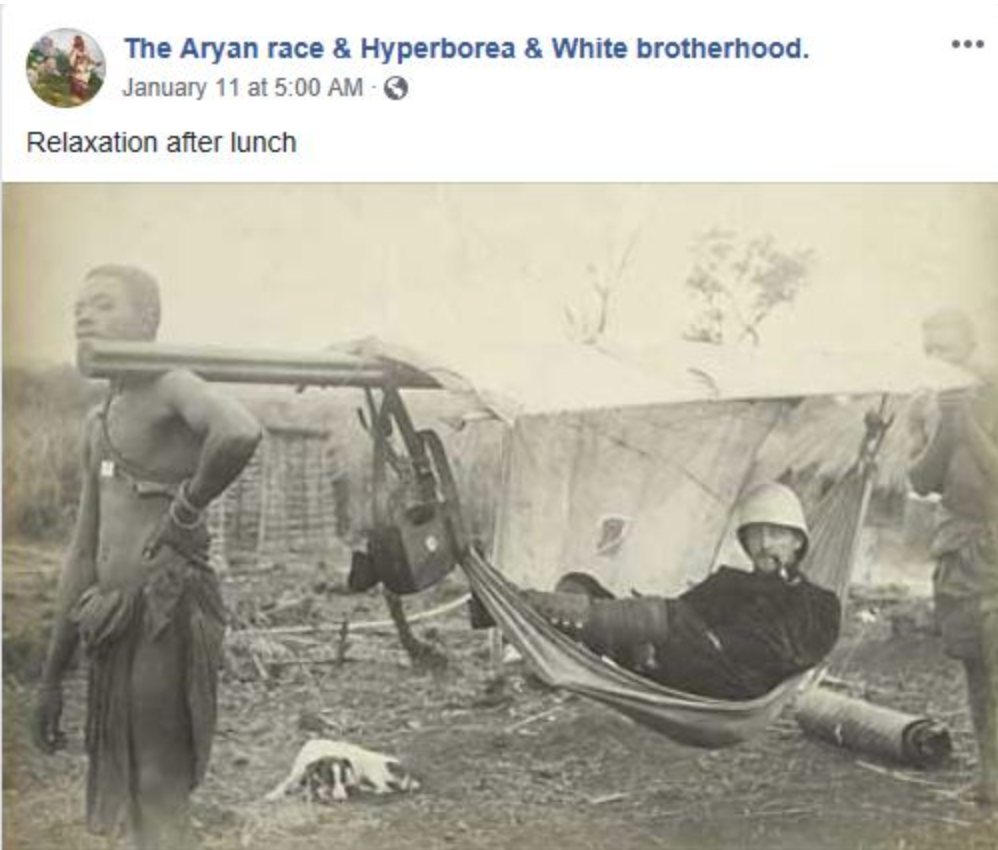

‘Relaxation after lunch’

Using the features linking pages similar to, or liked by, a page, the Daily Dot found dozens of other pages espousing racist and hateful ideas, a large, thriving network operating out in the open.

World War 2 Uncensored uses its Facebook page to deny the Holocaust. “No scientific proof Jews exterminated: witness,” a headline blares in a photo on its page.

In response to a series of questions sent via Facebook Messenger about whether it denied the Holocaust, if it was affiliated with Nazi groups or sympathetic to the regime, or if it would consider content on the page hate speech, World War 2 Uncensored suggested that the Daily Dot could better research hate speech by reading the Jewish Talmud. “The many volumes of the Talmud are loaded with hate-filled speech way before hate speech was in fashion,” the organization wrote. It did not answer any of the questions.

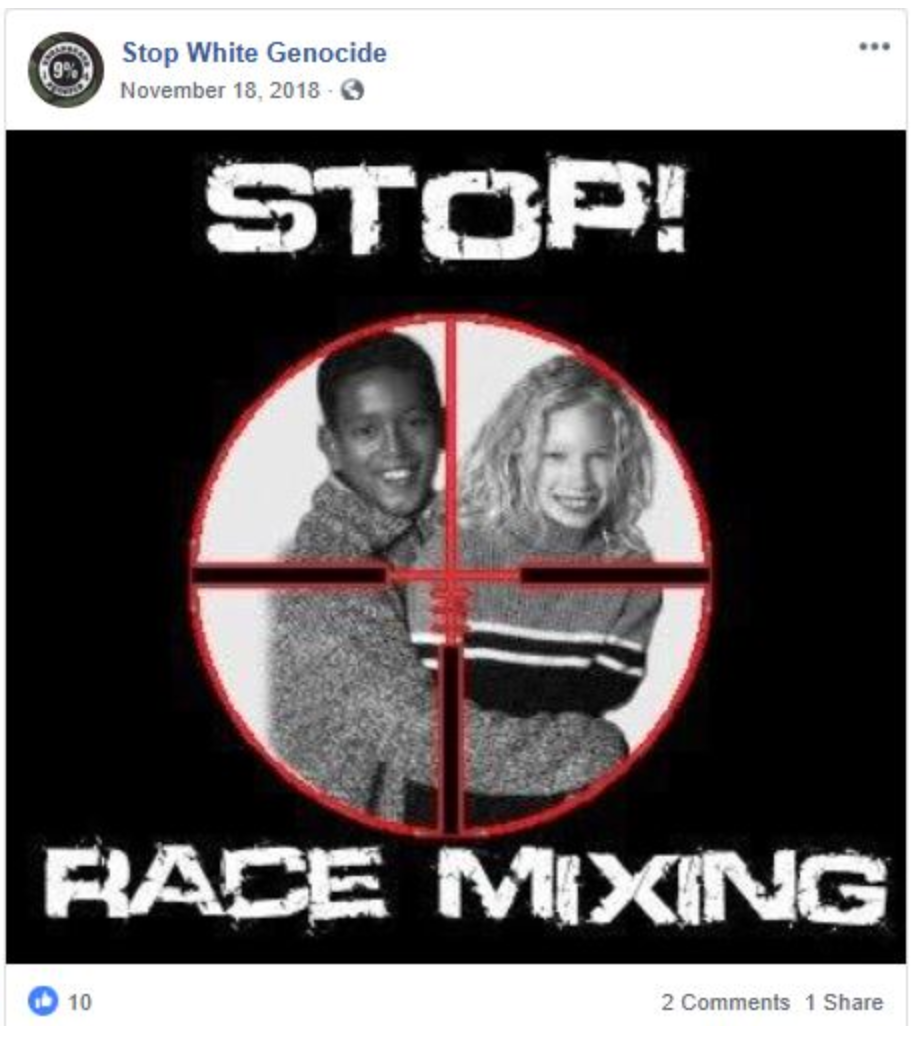

The Aryan race & Hyperborea & White Brotherhood and Stop White Genocide promote white supremacy. A review of the pages reveals pictures such as that of Black slaves carrying a white officer in a hammock captioned “relaxation after lunch” and memes like one of a Black boy and white girl hugging that reads “stop race mixing.” On their pages, the groups deny that they are racist. Neither the Aryan race nor Stop White Genocide responded to requests for comment.

Posts like these are why critics say Facebook is not effectively enforcing its policies.

Facebook, for its part, maintains that though it has long prohibited such groups and content, it is actively trying to do better.

“Our team continues to study trends in organized hate and hate speech and works with partners to better understand hate organizations as they evolve. We ban these organizations and individuals from our platforms and also remove all praise and support when we become aware of it. We will continue to review content, Pages, and people that violate our policies, take action against hate speech and hate organizations to help keep our community safe,” a Facebook spokesperson told the Daily Dot in a statement.

An endless trail

On paper at least, Facebook does draw a hard line on hate. The community standards state that it “[does] not allow hate speech,” defined as “a direct attack on people based on what we call protected characteristics—race, ethnicity, national origin, religious affiliation, sexual orientation, sex, gender, gender identity, and serious disability or disease.” Some protections are also given for immigration status. Facebook defines an “attack” as “violent or dehumanizing speech, statements of inferiority, or calls for exclusion or segregation.”

During his testimony before Congress last year, Mark Zuckerberg famously said, “We do not allow hate groups on Facebook overall.”

In practice, however, that’s just not true.

“So often it feels like there’s strong resistance from these companies to make meaningful change,” Keegan Hankes, senior research analyst for the SPLC, who has spent five years tracking hate groups, told the Daily Dot.

Why would Facebook be slow to act? On one hand, the company has long strived to allow free expression, even of views that are unpalatable or intolerant; on the other, it may have a financial incentive, as numbers of users and engagement are the primary means by which it attracts ad revenue.

“[The] far-right have long been early adopters of technology,” explained Hankes.

Hate groups have long been using the internet to find like-minded individuals and coerce others into adopting their views. Social media sites put them all in the same place, making it far easier to find and indoctrinate a person like Fields Jr., who murdered a woman at the Unite the Right rally and posted approvingly of Nazism on his Facebook page; or Dylann Roof, who spent countless hours on a racist website before murdering Black churchgoers in South Carolina; or Anders Breivik, the Norwegian mass murderer who cited Robert Spencer dozens of times in his manifesto.

Spencer, who was banned from the United Kingdom in 2013 for extremism, is the founder of the SPLC designated hate group Jihad Watch; its Facebook page has 84,000 Likes.

Visitors to the Jihad Watch blog are asked to “help spread the truth about jihad” by “exposing the role that Islamic jihad theology and ideology play in the modern global conflicts.” Stories on the prolific site are largely about Muslims committing crimes, recruiting for terrorist groups, people becoming criminal and violent after converting to Islam, and the like.

A recent story claims, “Yet another convert to Islam gets the idea that his new religion commands him to commit treason and mass murder.”

Breaking the rules

It’s clear no matter what Facebook tries, it’ll be lagging behind.

In May slides of training reportedly given to Facebook moderators were leaked to Motherboard. In them, the company identified white nationalist and white separatist beliefs as distinct from white supremacy, which is prohibited. “…[W]e allow to call for the creation of white ethno-states,” one slide states, casting doubt on whether Facebook legitimately understands racism.

“Their training slides were quoting Wikipedia … [which] demonstrated that they didn’t have civil rights expertise in-house checking them, saying, ‘hey guys, this is a problem,’” said Brody.

The company maintains that it has and continues to work with academics and other subject matter experts to identify hate speech.

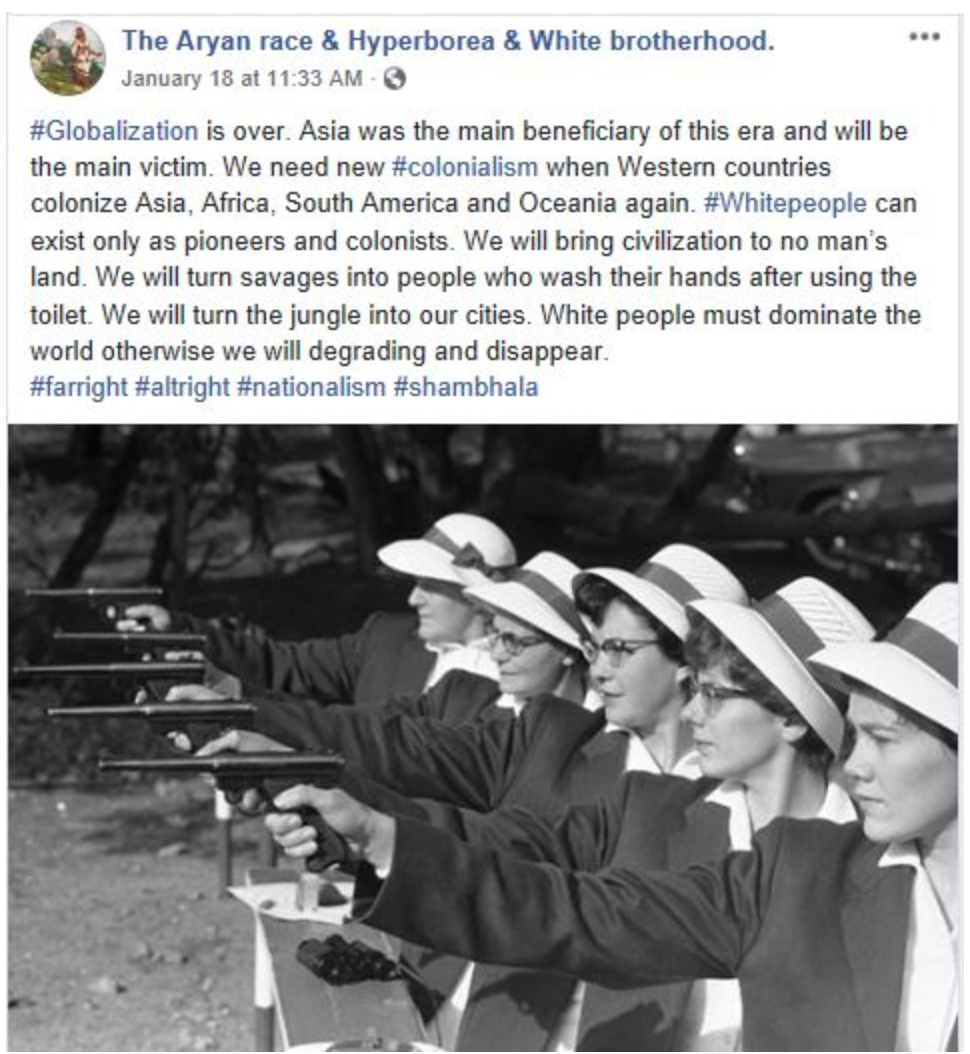

Following the guidance on the slide, a post like the one from the Aryan race page above, which advocates white colonialism and world domination, might be deemed permissible, even though it clearly contains “dehumanizing speech” or “statements of inferiority” that are technically prohibited.

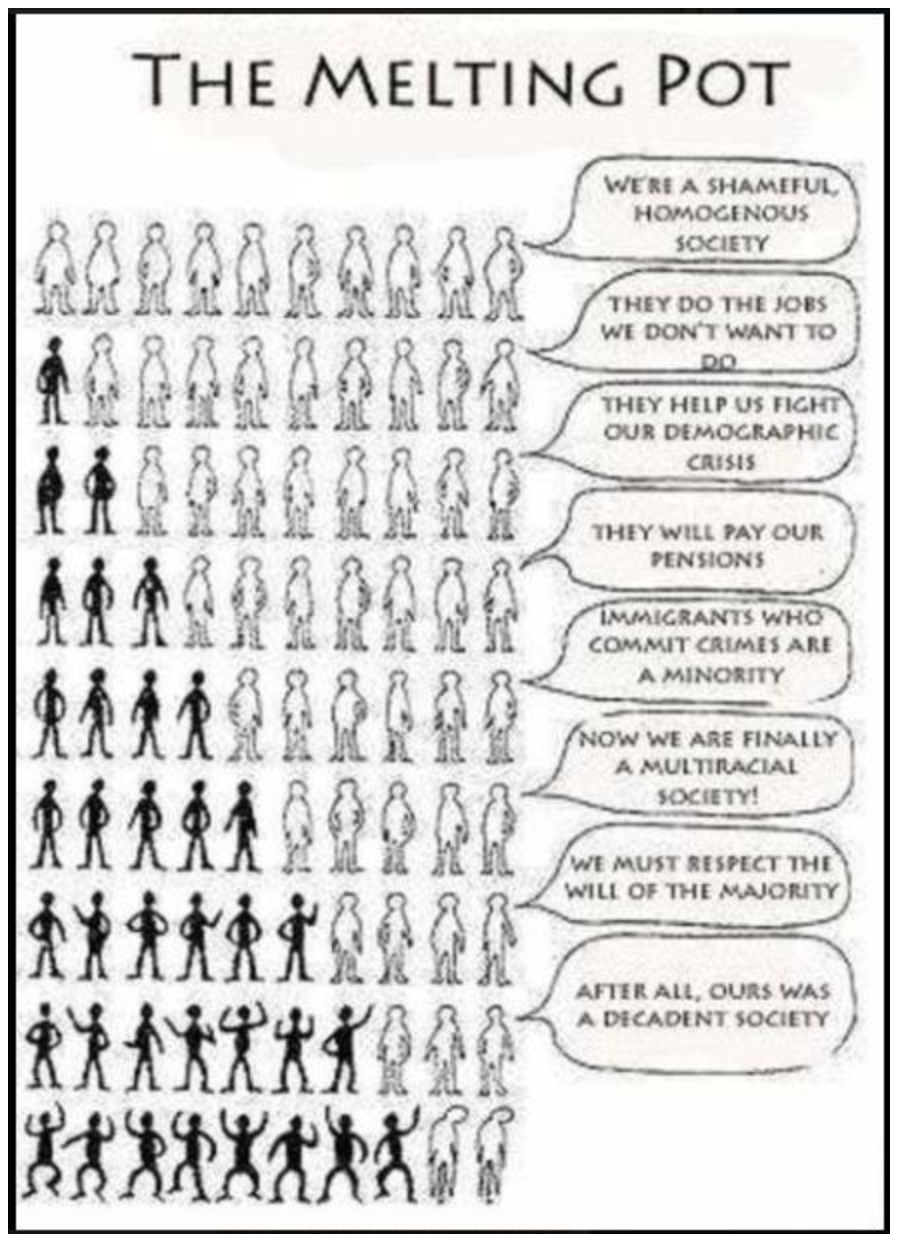

A meme posted by Stop White Genocide indicates that if white people are no longer the majority, they will be killed. Captioned, “the melting pot,” the image is obviously intended to promote a whites-only society. This amounts to a “[call] for exclusion or segregation,” which violates Facebook’s standards.

“What Facebook says in its public policies and community standards and statements doesn’t always seem to line up with what their private training materials are for their enforcers,” Brody said.

Devil’s in the algorithm

One of the most challenging problems Facebook faces in stopping the spread of hate is the site’s framework itself. By suggesting friends and pages based on users’ interests, and allowing like-minded people to cordon themselves into worlds of their own making, the company has enabled users to create their own communities—an innocent tool applied to people who love yoga, for example, that adds a richness of experience by suggesting stories and enthusiasts’ groups that may appeal to them.

This structure becomes insidious when applied to people whose mutual interest is white supremacy.

Once a user finds their way into a community of bigots, they can be led deeper and deeper into the ideology just by clicking on “Related Pages,” “Suggested Groups,” or “Pages Liked by This Page.” This amplification effect is part of what makes social media so enjoyable. It’s also what makes it dangerous.

In truth, Facebook could be doing far more than anyone knows to crack down on hate speech, but the secrecy of its process, coupled with the continuing spread of hate on the site and few public instances in which it has stepped in, gives an impression of inconsistency and dereliction of duty.

“Some groups get their voices silenced; others get a free pass,” Brody said. Some believe Facebook only intervenes to avoid a public relations’ fiasco. “It really takes a tragedy and in some cases it almost takes a body in order for them to act,” said Hankes.

In an apparent effort to address such criticisms, last May, Facebook began publishing Community Standards Enforcement Reports. The reports reveal 1) the number of policy violations, 2) quantify how many were reported by users and how many the company identified, and 3) very broadly explains the process by which it identifies eight categories of violations. In the first three quarters of 2018, Facebook acted on 2.5 million, 2.5 million, and 2.9 million instances of hate speech, respectively. Given the 1.5 billion active daily users, it logically correlates that this represents only a small fraction of a percentage of all content. But it’s still a massive amount.

In the report, the company states that it “cannot estimate” the prevalence of hate.

Other than these bits of information, the data in the reports is so lacking in specifics as to be mostly useless.

Lack of transparency is frequently cited by both anti-hate activists and users who have run afoul of Facebook’s policies. When Facebook does ban a page or user, it has typically remained silent.

There’s really no way to know how many other, similar pages still exist. The company refuses to even reveal how many times a person or page can be warned before they’re banned, claiming that if it does, users will merely violate the rules the maximum number of times permissible.

“From outside the company, visibility to what’s floating around on the site is very limited,” said Brody.

Even if Facebook acts, it’s fairly easy to get around the rules, such as by starting a new page. As the Daily Dot previously reported, conspiracy-slinging pundit Alex Jones appears to have immediately started posting content on another page following his ban last summer. (On Feb. 5, Facebook took down 22 more pages associated with Jones under a new recidivism policy that supposedly makes it harder for groups to post on other pages.)

Based on the Daily Dot’s review, some pages affiliated with designated hate groups endeavor to avoid getting kicked off the site while still spreading their message. A common tactic is to post a link to a blog or article along with a short, vague description; the hateful statements and images technically remain on the other website, though they’re using Facebook to circulate it. World War 2 Uncensored directs users to a blog containing glorifications of Hitler, Holocaust denial, Nazi imagery, and propaganda. The Aryan race page links to a blog that includes “great white brotherhood” in the URL; the page contains only its name in English and stock copy in Russian.

One of the savvier moves is to just let their audience draw its own conclusions. ACT for America posts seemingly innocuous stories that typically involve an immigrant or Muslim committing a crime; in the comments below, people unleash their hate. One recent commenter wrote, “No Muslim should be in our government. It’s against our laws.” These and other efforts lend credence to Facebook’s claim that increasing transparency will lead to additional circumventions of its policies.

READ MORE:

- YouTube shuts down Alex Jones’ InfoWars lookalike channel

- How Alex Jones is getting around his Facebook ban

- Facebook kills LOL meme project before embarrassing itself

What’s a hate enabler to do?

Over the last few years, people have become far more aware of the dangerous underbelly of social media. Today some wonder if the harm outweighs the benefit of giving sites unfettered freedom to police themselves, whether government intervention is justified. After all, Facebook isn’t merely letting people shout into the void, they’re creating connections and providing the tools to build entire communities of people drawn together by mutual experiences, values, and in some cases, deeply dangerous ideas. To paraphrase Silicon Valley parlance, they’re moving fast and breaking things.

“It is our position that they have a moral responsibility … for the role these platforms played in enabling [hate],” said Hankes.

Facebook is not alone in its reluctance to act, it just may be more reluctant than most. Indeed, it appears as though it only intervenes to curb hate speech after other companies take action, or when there has been a significant incident with public relations’ ramifications, such as the Unite the Right rally or the violence of the Proud Boys.

For a company that proudly leads the industry, it’s apparent preference for following the crowd on hate is odd, particularly because, legally speaking, it is well within its rights to act.

As a private enterprise, Facebook can arbitrarily prohibit any type of communication and there’s nothing anyone can do about it, because the First Amendment only applies to governmental interference with expression. Thus, at any moment, Facebook could delete every racist, xenophobic, homophobic, etc., page and post it can find. Perhaps it does; the network is vast, and hate is, after all, often subjective.

As Zuckerberg wrote in December, “For some of these issues, like election interference or harmful speech, the problems can never fully be solved.”

This is at least part of the reason why Facebook has expanded users’ ability to report content that potentially violates its policies in recent years. As the sole arbiter, Facebook remains in complete control of the policies and the process. They are, in effect, judge, jury, and executioner. If hate survives, it’s because they let it.

“Their responsibility is they are the actor that is best positioned to remedy the harm. They are the actor that is best positioned to remedy the cause of the harm,” said Brody. “They are the actor that is benefiting financially from the harm.”

Update 4:10pm CT, Feb. 18: In a statement to the Daily Dot, the Elders Chapter of the Proud Boys National Leadership denied the group had any involvement with Unite the Right and claimed the event “conflicts with our values as a multi-racial organization opposed to all forms of racial separatism and supremacy.”

However, Jason Kessler, one of the organizers of the event, was a member of the Proud Boys. Tweets from the event show members of the Proud Boys attending the rally.

Correction: David Brody is Counsel & Senior Fellow for Privacy and Technology with the Lawyers’ Committee for Civil Rights Under Law