Google Search’s generative AI Overview appears to be creating definitions for phrases that don’t exist, revealing a potential flaw in how the system handles nonsensical queries. Rather than admitting ignorance, the AI confidently provides explanations for imaginary terms like “banana slurp” and “cyclops vibing,” treating them like legitimate slang while using qualifiers to hedge its answers. This behavior is likely driving significant operational expense for Google, considering OpenAI CEO Sam Altman acknowledged the other day how users telling ChatGPT “please” and “thank you” after queries adds up to “tens of millions of dollars.”

Much like how Google’s AI Overview wanted to genuinely answer “how many rocks shall I eat?,” this may be another example of people-pleasing behavior from the large language model (LLM). AI still hasn’t yet grown up enough to understand when people are messing with it.

Are you cyclops vibing? Have you got your banana slurp on?

After noticing that Google’s AI Overview would give a summary to most queried searches, we started experimenting by prompting it with nonsense phrases followed by “meaning.” Though it sometimes refused to answer, it often attempted to interpret what we were trying to say, sometimes with quite a bit of confidence.

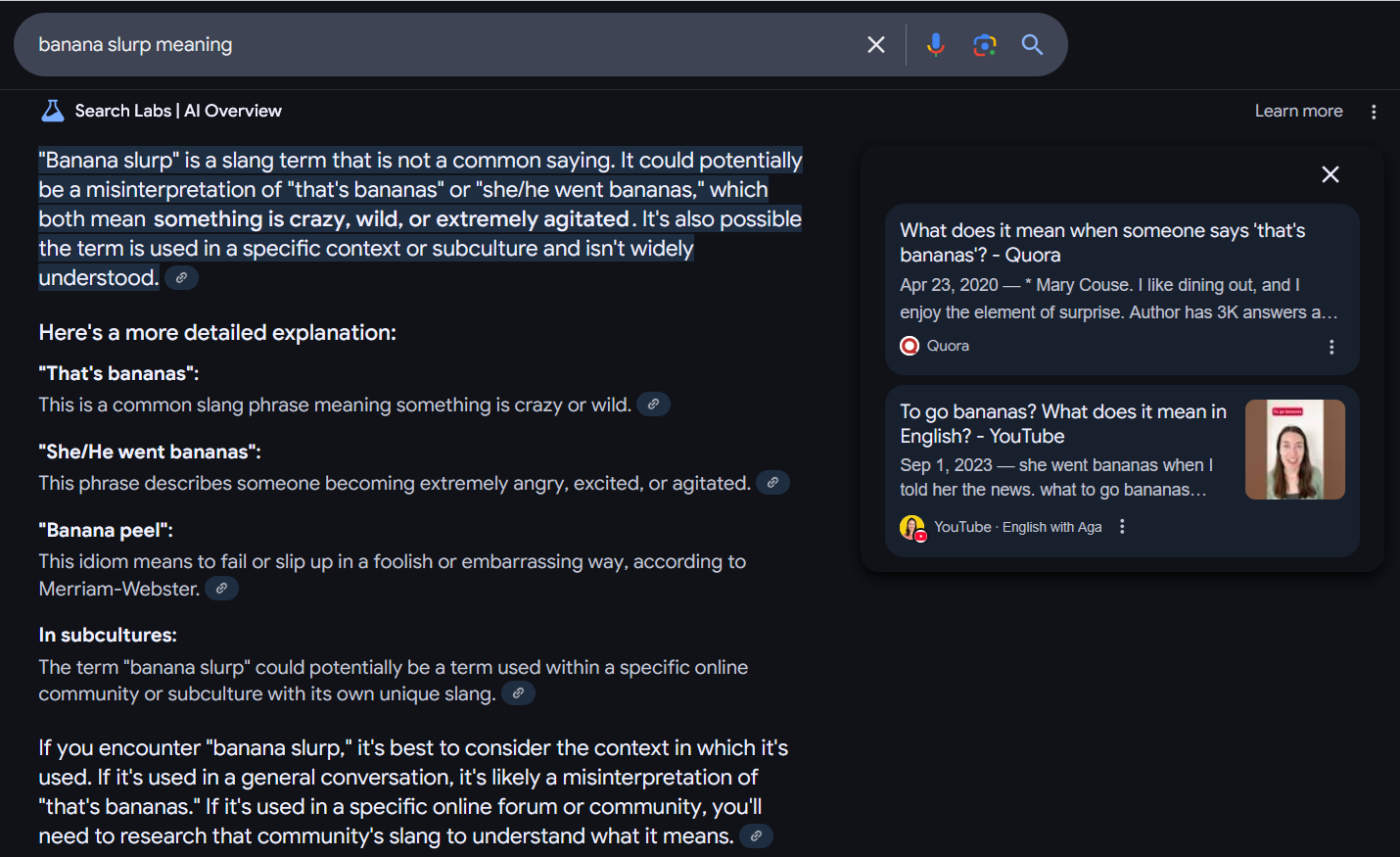

“‘Banana slurp’ is a slang term that is not a common saying,” the AI claimed. “It could potentially be a misinterpretation of ‘that’s bananas’ or ‘she/he went bananas,’ which both mean something is crazy, wild, or extremely agitated. It’s also possible the term is used in a specific context or subculture and isn’t widely understood.”

As far as we know, banana slurp is not a slang term in any context or subculture. While the AI hedges its bets with language like “it could potentially be” and “it’s possible,” the fact that it asserted that it is an actual slang term is notable and potentially (definitely) kind of funny.

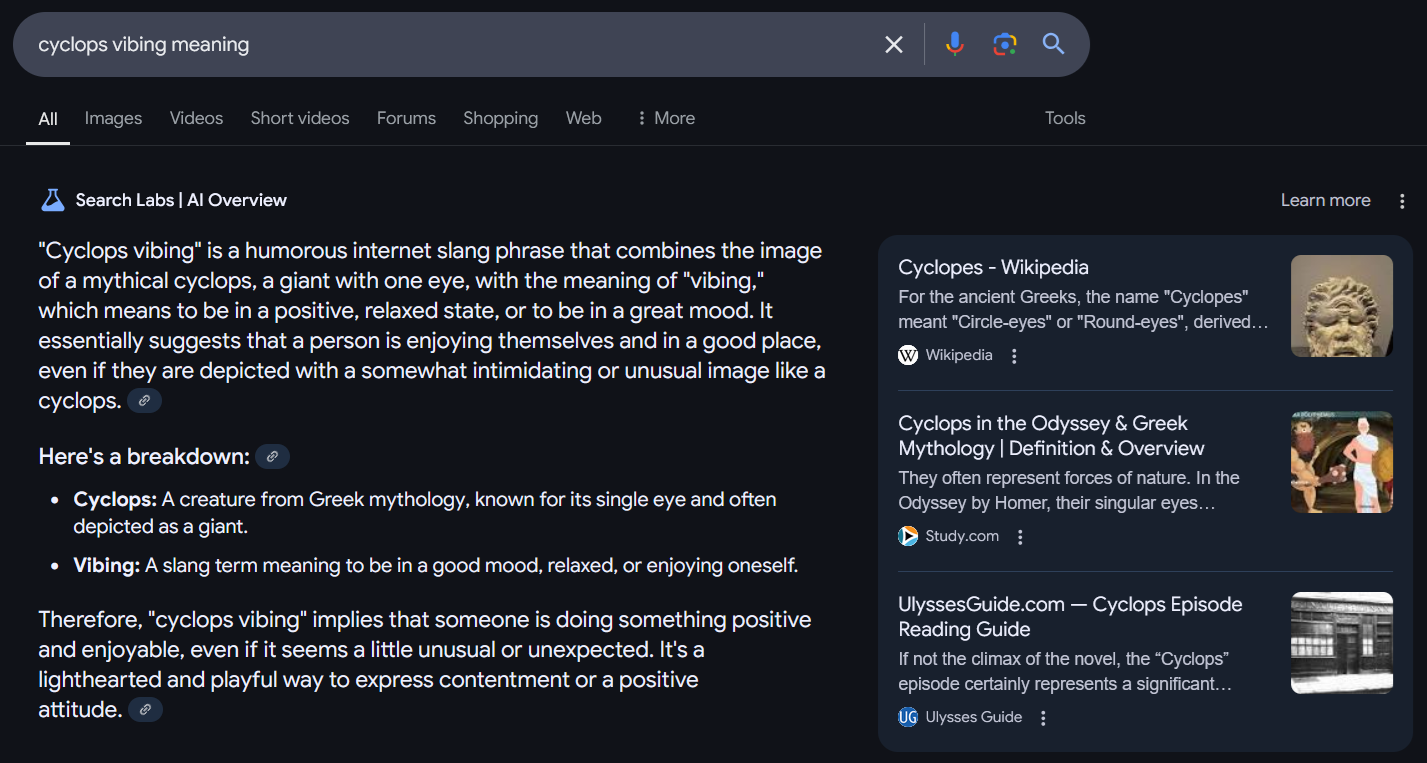

Can we make “cyclops vibing” a real thing?

“‘Cyclops vibing’ is a humorous internet slang phrase that combines the image of a mythical cyclops, a giant with one eye, with the meaning of ‘vibing,’ which means to be in a positive, relaxed state, or to be in a great mood,” says Google. “It essentially suggests that a person is enjoying themselves and in a good place, even if they are depicted with a somewhat intimidating or unusual image like a cyclops.”

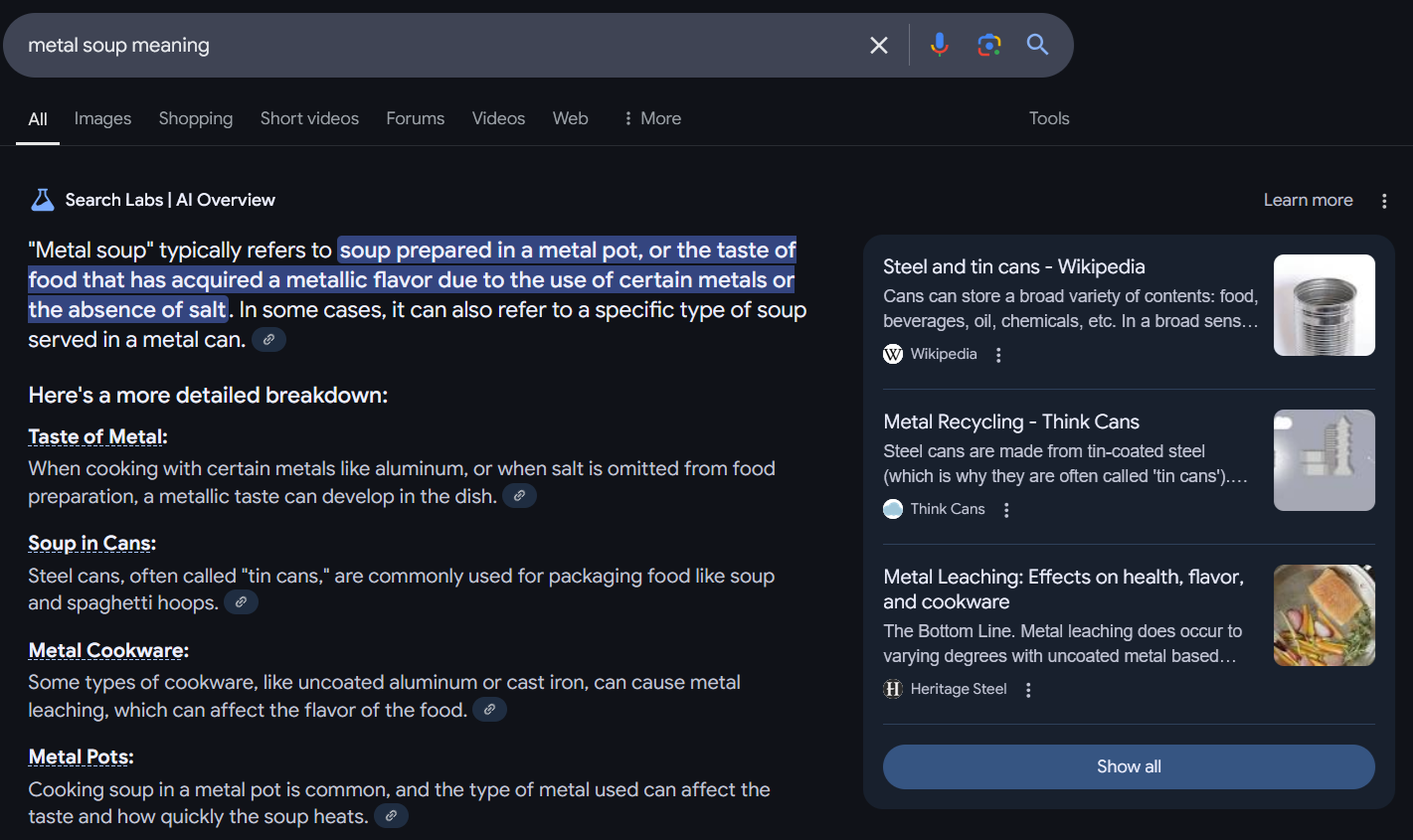

Meanwhile, it defined “metal soup” as “soup prepared in a metal pot, or the taste of food that has acquired a metallic flavor due to the use of certain metals or the absence of salt. In some cases, it can also refer to a specific type of soup served in a metal can.”

The art of AI hallucinations

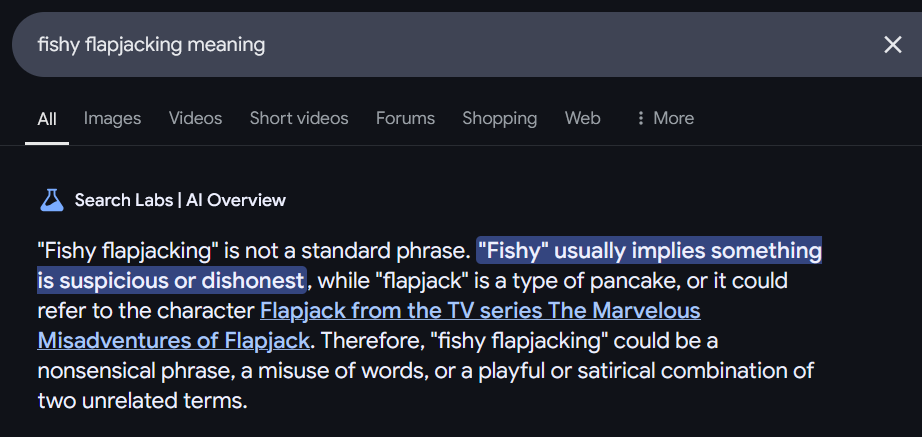

The more nonsensical we got, the more unsure and careful Google got. The term “fishy flapjacking” had it reaching for context clues to the point where it was essentially guessing at answers.

“‘Fishy flapjacking’ is not a standard phrase,” it said, accurately. “‘Fishy’ usually implies something is suspicious or dishonest, while ‘flapjack’ is a type of pancake, or it could refer to the character Flapjack from the TV series The Marvelous Misadventures of Flapjack. Therefore, ‘fishy flapjacking’ could be a nonsensical phrase, a misuse of words, or a playful or satirical combination of two unrelated terms.”

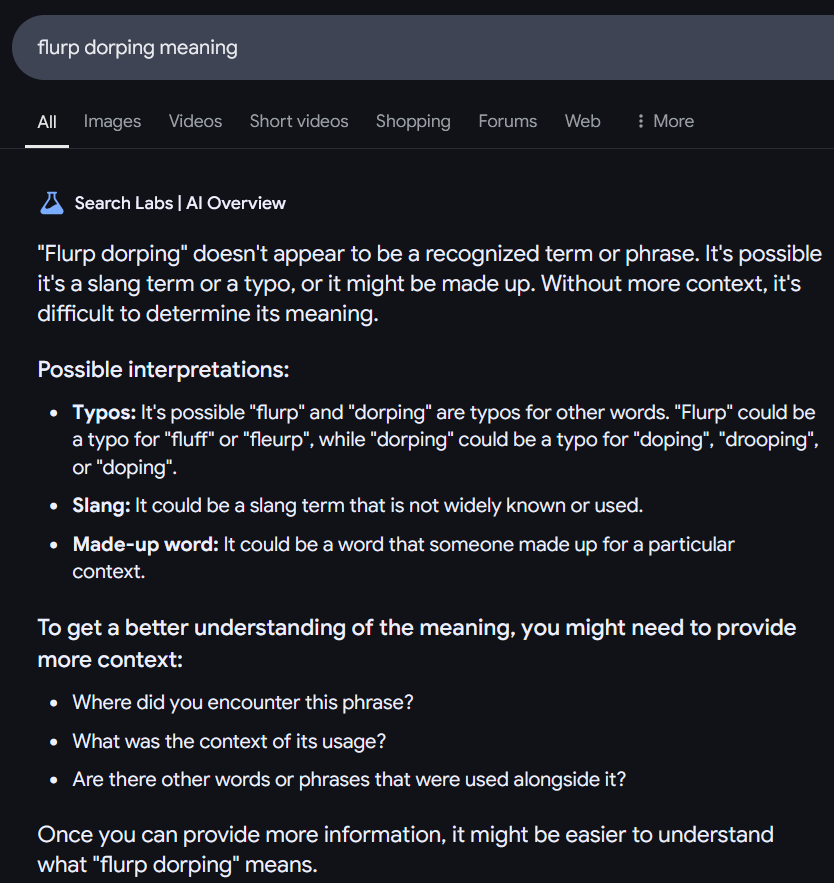

It even tries when you make up the words altogether, such as in our query for “flurp dorping.”

“‘Flurp dorping’ doesn’t appear to be a recognized term or phrase. It may be a slang term or a typo, or it might be made up. Without more context, it’s difficult to determine its meaning.”

At the end, it encouraged us to share more information so that it could do a better job.

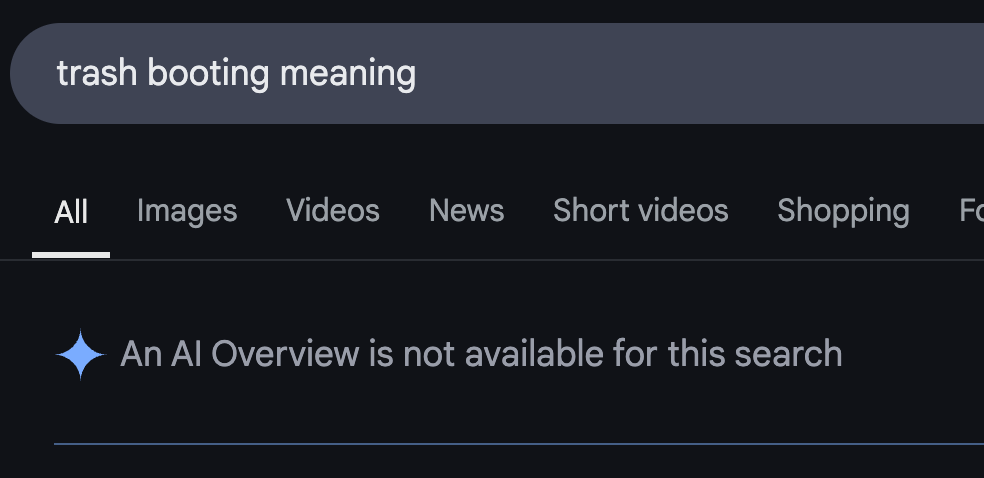

‘Trash booting’ AI Overview answer disappears an hour later

A little over an hour after we asked Google the meaning of “trash booting,” which their AI Overview defined as “a scenario where a system or application is rebooted due to a problem, often related to a garbage collector (GC) struggling to keep up with memory management, potentially leading to issues or crashes,” the same query turned up nothing. “An AI Overview is not available for this search,” read the result.

It is unclear why the result disappeared. Is the LLM figuring us out? Are too many other people using it? Or does it just not work very well in general?

Why is this happening?

Though it gets better every day, LLMs are essentially very advanced word predictors trained on the entirety of the web. More accurately, they are “token” predictors, meaning they predict not just words but various pieces of words, and will tell you the most likely definition of something (even if it isn’t real) because they’re mimicking patterns of existing slang definitions.

According to a statement emailed to us by a Google spokesperson, the AI is trying its best, but the vast catacombs of information can’t tell it everything.

“When people do nonsensical or ‘false premise’ searches, our systems will try to find the most relevant results based on the limited web content available,” the statement reads. “This is true of Search overall, and in some cases, AI Overviews will also trigger in an effort to provide helpful context. AI Overviews are designed to show information backed up by top web results, and their high accuracy rate is on par with other Search features like Featured Snippets.”

The cost of AI pretending to know everything

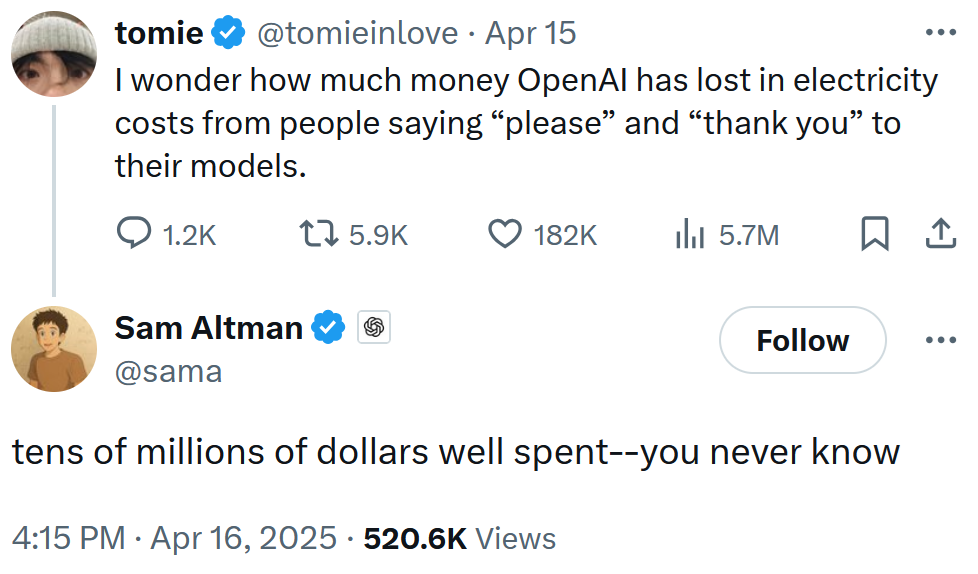

AI Overview’s attempts to define nonsense is costing Google more than its reputation. In a recent exchange on X, OpenAI CEO Sam Altman implied that users including polite phrases in their prompts is wasting millions of dollars in computational expenses.

“I wonder how much money OpenAI has lost in electricity costs from people saying ‘please’ and ‘thank you’ to their models,” mused @tomieinlove.

Altman answered that it could be “tens of millions of dollars well spent—you never know.”

Altman has offered no further comment on this issue, nor provided the math behind the claim. Regardless, AI critics found the idea of its users costing OpenAI that kind of money just by being nice to be both amusing and a great idea for praxis.

“Instead of poisoning AI models, let’s just say please and thank you,” wrote @thetymonbay.

According to Quartz, which cited Goldman Sachs, a ChatGPT-4 query uses ten times the electrical power as a normal Google search. Meanwhile, Scientific American reports that an AI generating a text answer to a prompt uses 30 times more energy than someone would by looking up that answer on their own.

All of this natural resource use fuels climate change, according to experts in the field.

The Daily Dot has reached out to OpenAI for comment via email.

The internet is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here to get the best (and worst) of the internet straight into your inbox.