TikTok has discovered a feature added to the iPhone in December 2024 that tells you the brand and model of any photographed product. One user showed how the integrated ChatGPT program identified this information about a pair of sneakers in a recent video, as well as additional details. It can further identify items like plants, dog breeds, and just about anything else as long as you can get a clear picture.

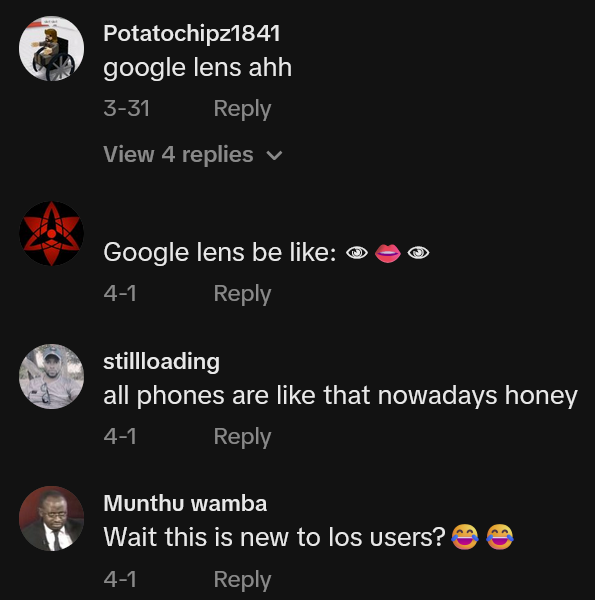

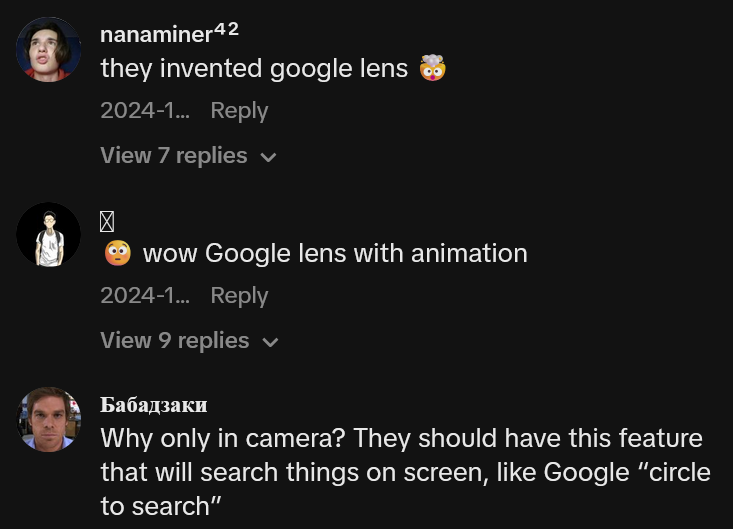

Some, however, were not impressed by the “hack,” accusing Apple of ripping off Google Lens. At the same time, users may need to be wary of ChatGPT results after users repeatedly exposed it and other AI programs for providing wildly incorrect information.

How iPhone users are identifying clothes from just a photo

This little trick is possible on iPhone models 15 Pro and higher. As demonstrated by TikTok user @seppseats, it involves the use of the camera and the newly integrated AI program. Simply follow these steps:

@seppseats did you know your iPhone could do this? #visualintelligence #appleintelligence ♬ original sound – sepps | Giuseppe Federici

- Hold down the camera control button on the right side of your screen.

- Point the camera at the item you’re curious about and take a photo.

- Hit the “Ask” button that appears near the bottom left of the screen.

- Query something like “What shoes are these?”

In the video, ChatGPT correctly identifies the red-orange sneakers as the Nike Air Zoom Alphafly NEXT% that debuted in 2020. It also gives a brief summary of what the shoes are known for.

“How sick is that?” the TikToker asks.

How is Apple’s visual intelligence different from Google Lens?

Giuseppe Federici of that TikTok video isn’t the only one to publicly demonstrate this iPhone feature. Since its release in an update on Dec. 11, 2024, users have been showing it off to their followers in similar tutorials.

Apple announced the feature and its pairing with programs like ChatGPT in a press release on Oct. 28, 2024.

“Users will be able to pull up details about a restaurant in front of them and interact with information—for example, translating text from one language to another,” the company said. “Camera Control will also serve as a gateway to third-party tools with specific domain expertise, like when users want to search Google for where they can buy an item, or benefit from ChatGPT’s problem-solving skills.”

However, TikTok commenters quickly began to point out that this appears no different than Google Lens, which launched on Oct. 4, 2017. Its product page advertises an identical ability to identify plants and animals, and the first thing it mentions is its ability to identify parts of an outfit from a photo and direct you to websites where you can buy them.

This is not exactly the same as using ChatGPT to provide a written summary about the product, but it would only take an additional click to find that information using Google Lens. Any product page will likely include the brand, model, and description of something like a pair of Nike sneakers.

In one demonstration video, TikTok user @sfyn_hh sarcastically wrote, “wow Google lens with animation.”

ChatGPT sometimes hallucinates

While the iPhone’s use of ChatGPT may set it apart from Google Lens, that may not be a boon for the feature. Large language models (LLMs) like ChatGPT made the news repeatedly over the last year for giving severely and sometimes dangerously wrong answers to simple questions.

Remember when Google’s AI told users to make cheese stick to pizza with glue? Or when it said everybody should eat at least one small rock per day?

The problem with LLMs is that they generate answers with information scraped from the internet, but a lot of stuff on the internet is wrong or meant as a joke. ChatGPT may be getting its data from Reddit trolls or articles from The Onion, unable to comprehend satire.

An alarming report presented at a tech conference in May 2024 found that ChatGPT got its answers to programming questions wrong 52 percent of the time. Questions about sneakers may be lower stakes, but users may still want to take answers with a grain of salt for the time being. And don’t be fooled by its disarming politeness.

“The follow-up semi-structured interviews revealed that the polite language, articulated and text-book style answers, and comprehensiveness are some of the main reasons that made ChatGPT answers look more convincing, so the participants lowered their guard and overlooked some misinformation in ChatGPT answers,” researchers explained.

The Daily Dot has reached out to Apple and Google for comment via email.

The internet is chaotic—but we’ll break it down for you in one daily email. Sign up for the Daily Dot’s web_crawlr newsletter here to get the best (and worst) of the internet straight into your inbox.