The team at Google Brain is using two neural networks to turn a blurry grid of pixels into an accurate, detailed approximation of an actual photo.

On television, this image enhancer is called “zoom in, enhance,” an age-old trope that usually shows our hero unrealistically taking a blurry image, zooming in on it, and enhancing the details in order to catch the “bad guy.”

In engineering that method is called super-resolution imaging, and it is achieved by teaching a neural network, or a computer modeled on the human brain. The computer will look for certain details in the original image and fill in the gaps with hallucinations—data added using the computer’s knowledge about typical facial features.

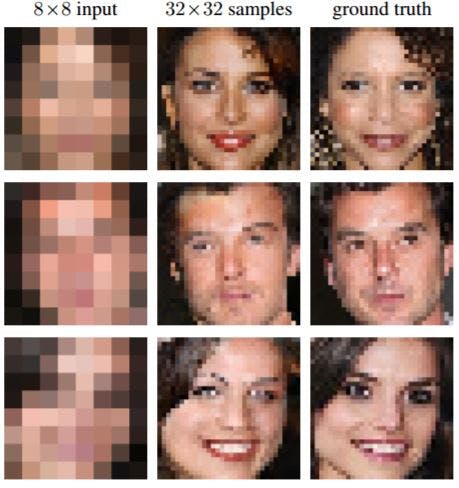

Google used its image enhancer on a number of blurry 8×8 photos to create a more detailed and accurate 32×32 pixel sample.

The results are pretty incredible.

In the capture above, you have an unrecognizable low-resolution image on the left, the computer-enhanced image in the middle, and the real image on the right.

While some of the computer’s creations may look terrifying, the accuracy and amount of detail gathered from the indistinguishable 8×8 image is astonishing.

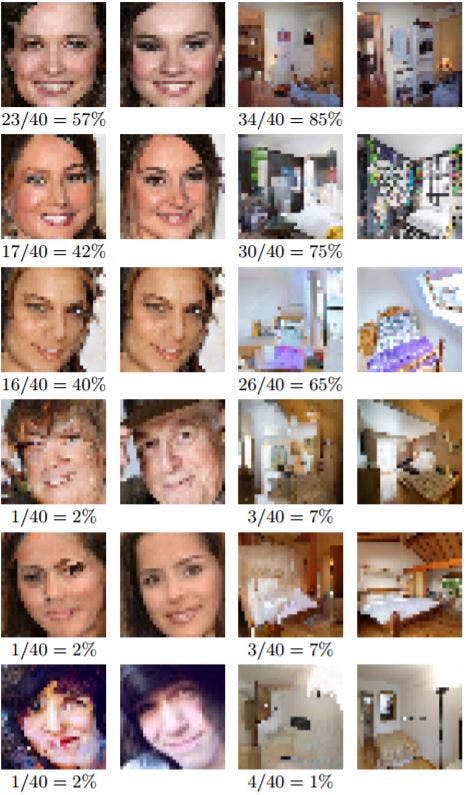

The computer-generated images were accurate enough to fool a test audience. People were asked to choose which picture they thought was the “ground truth.” They chose Google Brain’s super-resolution image in about 10 percent of the celebrity examples, and 28 percent of the bedroom examples.

That level of accuracy could be used to give law enforcement a better idea of how a suspect might look, but is still a long way away from being able to make any conclusive decisions.

H/T Ars Technica