A recent report shows that X employs very few moderators whose primary languages are spoken by millions of users.

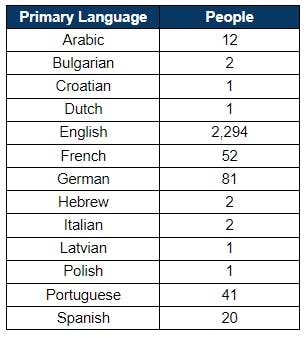

According to the platform’s transparency report filed to the European Commission at the end of October, less than 10% of its 2,294 moderators for the European Union (EU) primarily speak languages other than English. This includes some of the most common languages in Europe, such as German (81 moderators), French (52), Spanish (20), and Italian (2).

The report showed that X had an average of 115 million active users on the platform in the EU from April to October.

The report was filed to comply with the EU’s Digital Services Act (DSA), new legislation that requires companies to report data related to content moderation, such as the number of user complaints, how many human moderators they have, and what languages those moderators speak. Per the DSA, which went into effect in late August, “very large” platforms with at least 45 million monthly active users are required to publish these reports every six months.

The European Commission has said that the law was necessary, in part, because, “Online services are also being misused by manipulative algorithmic systems to amplify the spread of disinformation, and for other harmful purposes. These challenges and the way platforms address them have a significant impact on fundamental rights online.”

The report comes as X is grappling with an onslaught of complaints about the proliferation of hate speech and harassment on the platform and amidst a scandal about owner Elon Musk sharing antisemitic conspiracy theories.

X has long been criticized for having what many view as as a lackadaisical approach to content moderation and for failing to enforce its own policies. Those complaints have intensified significantly since Musk purchased the platform.

In November, Media Matters for America found that X was placing advertisements for Apple, IBM, Oracle, and other corporations next to white nationalist content. In response, IBM suspended all ads and said the situation was “entirely unacceptable.” Apple, Disney and other companies also paused advertisement on X.

In recent weeks, X CEO Linda Yaccarino has attempted to assuage concerns about its commitment to combatting hate speech.

“X’s point of view has always been very clear that discrimination by everyone should STOP across the board—I think that’s something we can and should all agree on. When it comes to this platform—X has also been extremely clear about our efforts to combat antisemitism and discrimination. There’s no place for it anywhere in the world—it’s ugly and wrong. Full stop,” Yaccarino tweeted.

Responses indicate that people were not convinced. Many mockingly asked if Musk was aware of the policy.

“Have you met your boss?” one of the top comments said.

X did not respond to the Daily Dot’s request for comment.

Not enough moderators

Comparing X’s report with those filed by other platforms reveals that it has far fewer moderators than some of its competitors. These moderators are tasked with reviewing a wide variety of content, including hate speech, doxing, revenge porn, violence, scams, self-harm, child exploitation, and intellectual property rights violations.

According to their transparency reports, Facebook and Instagram parent company Meta has 15,000 moderators, YouTube has 17,000, and TikTok has 6,000 within the EU. X has fewer than 2,300.

The European Commission has expressed concern about X’s relatively low number of moderators. After the report came out, a senior commission official told Reuters that they hope X will feel pressured to hire more moderators.

The report shows that the number of mods who speak certain languages is highly disproportionate to the number of users who speak those languages. There is a single Dutch-speaking moderator and 9 million active X users in the Netherlands. Just two mods’ primary language is Italian, while more than 9 million people use X in Italy.

The report shows X has just one moderator for four languages: Croatian, Dutch, Latvian, and Polish. In Poland, X has 14 million users, the fourth-highest number of users among nations in the EU.

The transparency report said that all 2,294 of its moderators’ primary language is English. It’s not clear if this is an error or if all its moderators who speak a language other than English are fluent in both.

The company’s report states, “X has analysed which languages are most common in reports reviewed by our content moderators and has hired content moderation specialists who have professional proficiency in the commonly spoken languages.”

X didn’t answer Daily Dot’s request to clarify whether the moderators who speak two primary languages are bilingual.

Many believe that X’s relative lack of moderators and the fact that the vast majority primarily speak English makes it less effective at enforcing its policies.

“Some of the notes that I’ve heard are that about 90% of trust and safety content moderation staff are English-speaking,” Lauren Krapf, the Anti-Defamation League’s director of policy, told the Daily Dot. “But you know, the concern [is] that a huge number of individuals who are using these platforms are non-English speakers as far as we know, and yet the trust and safety resources don’t align with those percentages.”

The war in Israel has caused a sharp increase in online antisemitism, Islamophobia, and disinformation about the conflict.

The ADL found that antisemitic content increased nearly tenfold on X after Hamas’ attack on Israel, while Facebook “showed a more modest 28% increase.”

“We’ve definitely seen a surge in misinformation proliferating online, especially as it relates to the war between Israel and Hamas and the ongoing conflict, and the humanitarian crisis in Gaza,” said Krapf.

Some platforms have allocated resources to curb the proliferation of hate and disinformation inspired by the war.

Meta said in a statement on Oct. 13 that it established a “special operations center staffed with experts, including fluent Hebrew and Arabic speakers.”

X claims it has also ramped up enforcement. On Oct. 11, Yaccarino tweeted, “In response to the recent terrorist attack on Israel by Hamas, we’ve redistributed resources and refocused internal teams who are working around the clock to address this rapidly evolving situation.”

According to its transparency report, X has 12 moderators who speak Arabic and just two who speak Hebrew, the primary languages of Palestinians and Israelis, respectively.

A first step towards transparency

Experts who spoke to the Daily Dot applauded the DSA and said they expect it to become the global standard, while acknowledging that there is a long way to go to improve content moderation and transparency.

Some have complained that the first reports released to comply with the legislation are vague and inconsistent, making it difficult to compare content moderation across platforms. Platforms use different definitions of complaints and other terminology; they also use different standards to determine what constitutes hateful content.

“What we’re seeing is that transparency reporting is hard; even big companies with a lot of resources, they haven’t necessarily been able to pull all the data,” said Agne Kaarlep, head of policy and advisory services at Tremau.

“I think this just showcases that it’s not necessarily easy to provide some of this data, and it’s also not easy to ensure that the data is good and accurate and has integrity behind it,” Kaarlep added.

Kaarlep, who previously worked as a policy officer at the European Commission, said there are also many ways to understand the legislative text, there is no uniform standard for how service providers record data, and that it’s unfortunately hard to track and divide signals in a real-world setting. For instance, simply defining “European users” or tracing the spread of a piece of misinformation can be extremely difficult.

“We live with the fact that some of the data would be hard to measure, it’s not going to be a clear cut. You can’t even go by language because there are lots of languages in the EU that are not official languages; there are diaspora populations. Location can also be complicated. How do you count people speaking to their relatives across the pond?” said Kaarlep.

Kaarlep acknowledged that these are early days for the new reporting requirements and said that the commission will likely provide more guidance and refine requirements for how platforms must format reports in coming months, which will hopefully make it easier to compare metrics.

Some are optimistic that the DSA will ultimately improve online safety.

“It’s a very ambitious piece of legislation. It’s not only about transparency, it’s about motivation, it’s about risk mitigation, it’s about giving option for users to notify if they encounter illegal content, so it’s incredibly broad,” said Christoph Schmon, international policy director at the Electronic Frontier Foundation.

Schmon said the DSA will help researchers and civil society groups better understand how algorithmic decision-making and content moderation works.

“Per the rule, platforms need to act once they get a notification of a trusted flagger, which can be an authority like Europol, and then the platforms would be incentivized to take off the content quickly,” said Schmon.

On the other hand, some worry the the law may infringe on freedom of expression or disproportionately impact marginalized groups.

“There is risk of shadow negotiations and a politicized enforcement,” Schmon said. “When it comes to the Gaza conflict, we already saw that the European Commission demanded some actions on platforms that were not described in the DSA, like taking off content within 24 hours.”

In spite of such concerns, many believe that the law is an important step in the right direction. Experts hope that the reports platforms are required to file under the DSA will be useful as both regulators and platforms themselves figure out how to better moderate content.

Shubhi Mathur, a program manager at the Stanford Internet Observatory, which studies abuse on the internet and suggests policy and technical solutions, pointed out that there is no existing data on what is the right ratio of moderators to users, or what constitutes a reasonable workload for moderators.

“I think the first iteration of the DSA will lay the foundation of how the European Commission seized on speed and reporting or issues for their guidelines. This is just a ground setting, just so the European Commission knows this is what the current state is,” Mathur said.

Send Hi-Res story tips and suggestions here.