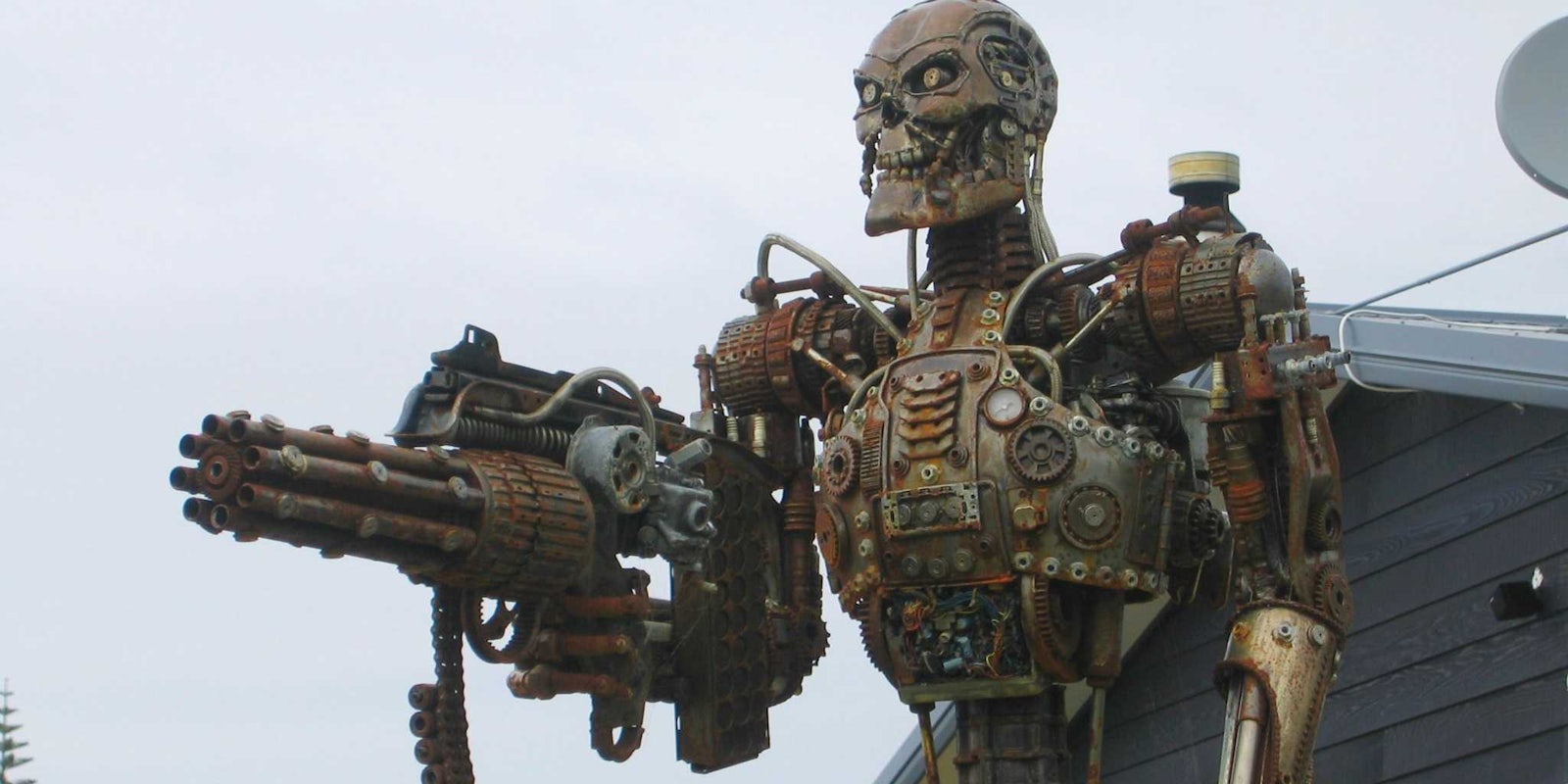

A leading artificial-intelligence group is calling for a ban on the development of offensive autonomous weapons, and in a sign of how many people are worried about this threat, the open letter has attracted hundreds of signatures from the brightest minds in artificial intelligence and robotics.

Among the signatories on the letter from the Future of Life Institute are renowned physicist Stephen Hawking, Tesla founder Elon Musk, and Apple co-founder Steve Wozniak. The letter is set to be presented at the International Joint Conferences on Artificial Intelligence in Buenos Aires on Tuesday.

The letter, which also attracted signatures from Noam Chomsky and a host of other high-profile thinkers and researchers, urges world powers to be cautious in using artificial intelligence to create weapons.

To raise awareness about the letter, Hawking will be answering questions on Reddit in a week-long AMA focused on “making the future of technology more human.”

The letter draws a bright line between autonomous weapons that require human guidance and weapons that can operate completely on their own.

Cruise missiles and remotely piloted drones are acceptable, the signatories said, because humans still make the targeting decisions. However, “armed quadcopters that can search for and eliminate people meeting certain pre-defined criteria” would be a step too far, as that would remove humans from the decision-making chain.

According to the letter, automatic targeting systems are expected to be feasible within the next few years. The race to develop the technology will lead to autonomous weapons becoming the “Kalashnikovs of tomorrow”—cheap, easy to acquire, and easy to mass produce.

The letter’s signatories urged immediate action to ban these systems in order to avoid a “military AI arms race.”

Autonomous weapons development, the letter argues, would result in technology that is “ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group.”

Researchers and scientists involved in the development of artificial intelligence also made their case by comparing autonomous weapons to nuclear ones.

“Just as most chemists and biologists have no interest in building chemical or biological weapons,” they said, “most AI researchers have no interest in building AI weapons—and do not want others to tarnish their field by doing so, potentially creating a major public backlash against AI that curtails its future societal benefits.”

Hawking and Musk have been particularly vocal in their opposition to weaponized AI in the past. Hawking warned that the development of AI could lead to the end of humanity, and Musk donated $10 million to research on AI safety. He has also compared AI advancements to “summoning the demon.”

H/T Ars Technica | Photo via Steve/Flickr (CC BY 2.0)