Facebook is implementing new tools to help people who may be at risk of suicide. New features first available in the U.S. are rolling out globally to help people recognize and report potentially harmful behavior.

The new features include a drop-down menu to flag posts as suicidal. These reported posts will be expedited to Facebook’s team of evaluators that analyze all types of reported content, and if deemed suicidal, both the reporter and the person who posted the content will be met with a variety of options.

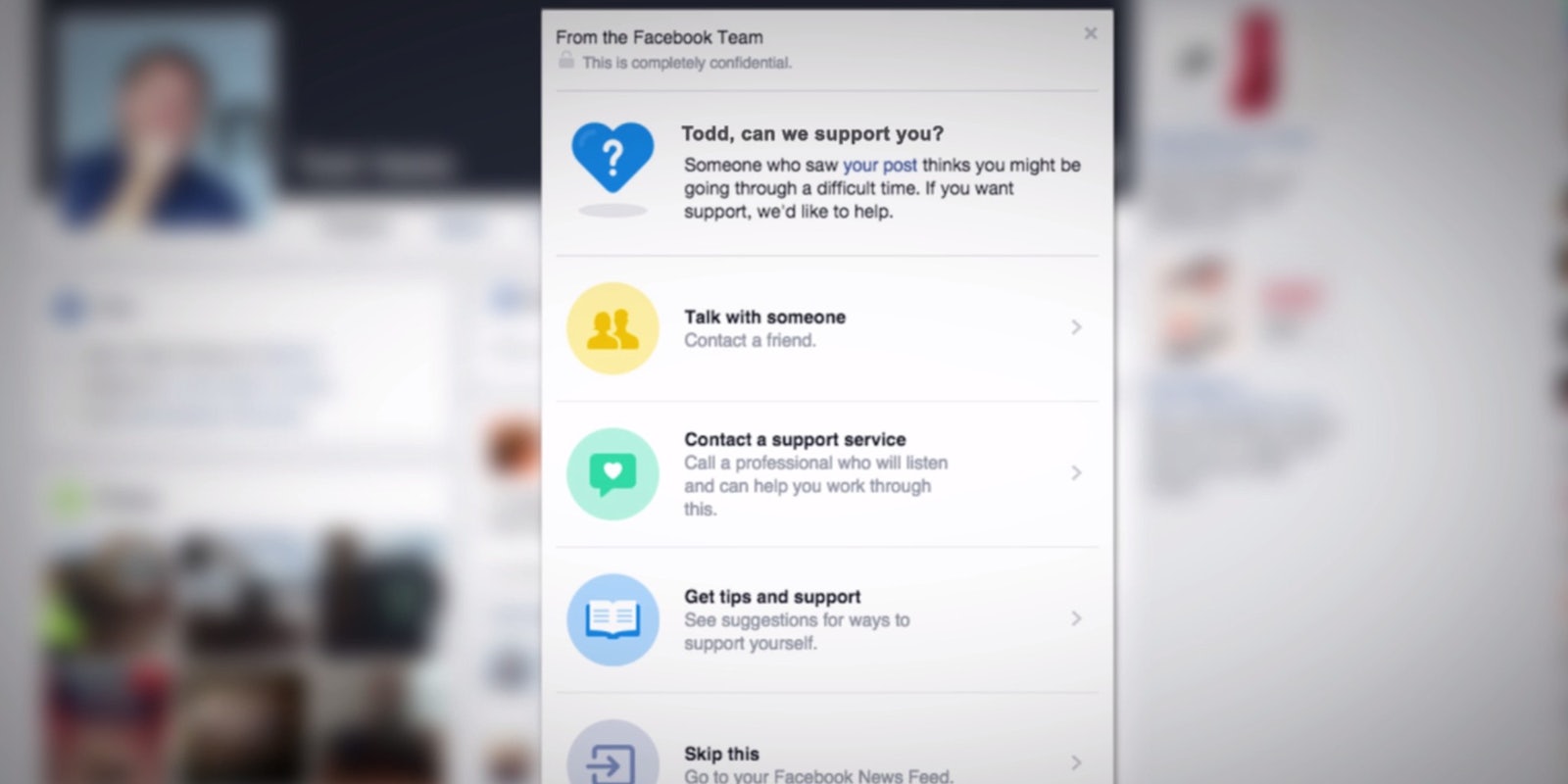

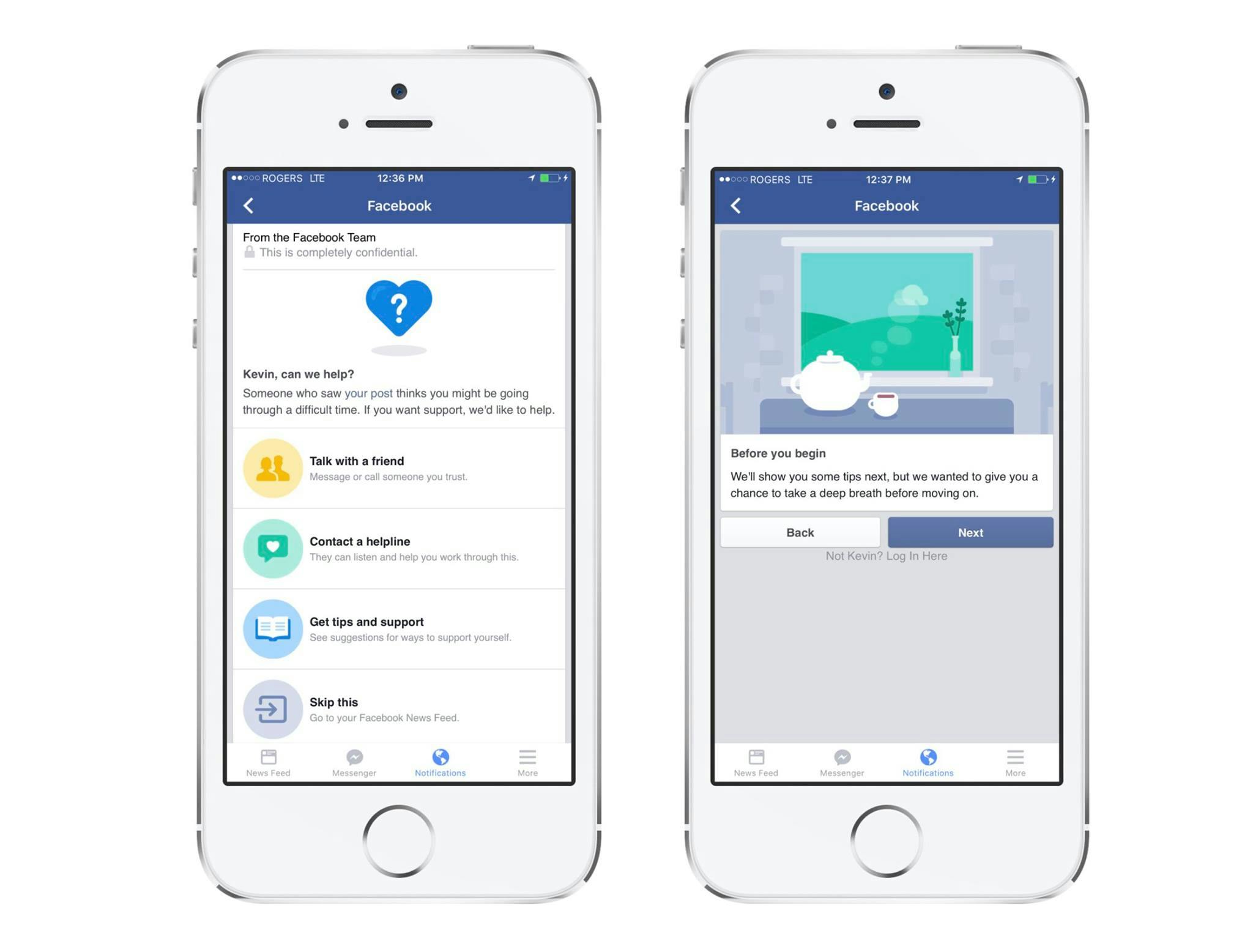

If someone reports a suicidal friend, they will receive information on ways to communicate with their friend, and resources and contact information for suicide prevention. The person who posted the flagged content will receive a notification page the next time they open Facebook, letting them know someone reported their content as potentially harmful as well as supportive tips, including contacting a friend or helpline.

New updates come as suicide rates increase around the country. According to a report from the CDC, suicides in the U.S. are at their highest rate in 30 years. Rates are increasing the most among girls ages 10 to 14 and middle aged women.

The tools were created through partnerships with mental health organizations including Forefront, Lifeline, and Save.org.

To report someone’s post, tap the right corner of the post, and you’ll see a drop-down menu. Hit “Report post,” then “I think it shouldn’t be on Facebook,” then “It’s threatening, violent, or suicidal.” Next you’ll see tips for how to help a person, including reporting the post to Facebook for review. Save.org walks through these steps, as well as why these tools are important, in a video:

Facebook first rolled out suicide prevention tools in 2011, and began testing the features connecting friends to each other and mental health resources in 2015. While the new features rolling out to all of the social network’s 1.65 billion users could potentially help a friend in need, they also further illuminate the extent to which Facebook understands, researches, and changes our behavior.

Privacy concerns arise anytime Facebook uses its platform to manipulate or tackle social issues. In 2014, the company ran a study to determine “emotional contagion” across the platform, manipulating people’s feeds to show more positive or negative posts. The company received tremendous backlash from researchers, privacy advocates, and users for not telling people they would be used in an experiment.

In an effort to be more transparent about how and why Facebook uses people on the platform as research subjects, the company released new information about how it conducts research and the five-member group of Facebook employees that analyze the ethics and risks and benefits before undertaking research. The group is reportedly modeled on institutional review boards, the independent committees that are required to oversee research in academia.

Facebook’s suicide prevention resources are just one way people can get help via digital services. Google, too, will display information for mental health resources in its search results for topics that address the issue. The Crisis Text Line is an organization that enables people in crisis to text with volunteers through an SMS-based system meant to appeal to folks who want someone to talk to in a familiar way, which is especially compelling for young people who are digital natives and use their phones for everything.

Facebook knows everything about you—the type of content you post, what your words mean, where you go, whom you talk with, what apps you use, and your relationship history. Now, it will get more information on whether posts seem harmful and suicidal. But by implementing tools to let friends report potentially harmful posts could let Facebook assist someone in crisis, or help a friend reach out directly, equipped with resources for support.